本文为为🔗365天深度学习训练营内部文章

原作者:K同学啊

一 前期准备数据

1.导入数据

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.preprocessing import MinMaxScaler

from keras.layers import Dense,LSTM,Bidirectional

from keras import Input

from keras.optimizers import Adam

from keras.models import Sequential

from sklearn.metrics import mean_squared_error,r2_score

import warnings

warnings.filterwarnings('ignore')df = pd.read_csv('woodpine2.csv')

print(df)

2.数据可视化

'''

可视化

'''

fig,ax = plt.subplots(1,3,constrained_layout=True,figsize=(14,3))

sns.lineplot(data=df['Tem1'],ax=ax[0])

sns.lineplot(data=df['CO 1'],ax=ax[1])

sns.lineplot(data=df['Soot 1'],ax=ax[2])

plt.show()df = df.iloc[:,1:]

二 特征工程

1.构建特征变量和目标变量

'''

用于从一个 Pandas DataFrame 中提取时间序列数据,生成输入(x)和输出(y)数组,适合用于训练机器学习模型

'''

# 取前八个时间段的Tem、CO 1、Soot 1的数据为X,第九个数据为y

width_x = 8

width_y = 1

x = []

y = []in_start = 0for _,_ in df.iterrows():in_end = in_start + width_xout_end = in_end + width_yif out_end < len(df):X_ = np.array(df.iloc[in_start:in_end,])# 将 X_ 重塑为一维数组,长度为 len(X_) * 3。假设原始数据有多列,这样做的目的是将多列数据展开为一个长数组X_ = X_.reshape(len(X_)*3)# 0代表只提取第一列y_ = np.array(df.iloc[in_end:out_end,0])x.append(X_)y.append(y_)in_start += 1x = np.array(x)

y = np.array(y)

print(x.shape,y.shape)(5939, 24) (5939, 1)

2.归一化

'''

归一化

'''

sc = MinMaxScaler(feature_range=(0,1))

x_scaled = sc.fit_transform(x)

# 许多机器学习模型,尤其是 RNN 和 CNN,期望输入数据具有特定的形状。

# 例如,对于 RNN,输入通常是三维的,形状为 (样本数, 时间步数, 特征数)

x_scaled = x_scaled.reshape(len(x_scaled),width_x,3)3.划分训练集和测试集

'''

划分训练集

'''

X_train = np.array(x_scaled[:5000]).astype('float64')

y_train = np.array(y[:5000]).astype('float64')

X_test = np.array(x_scaled[5000:]).astype('float64')

y_test = np.array(y[5000:]).astype('float64')

print(X_train.shape,X_test.shape)(5000, 8, 3) (939, 8, 3)

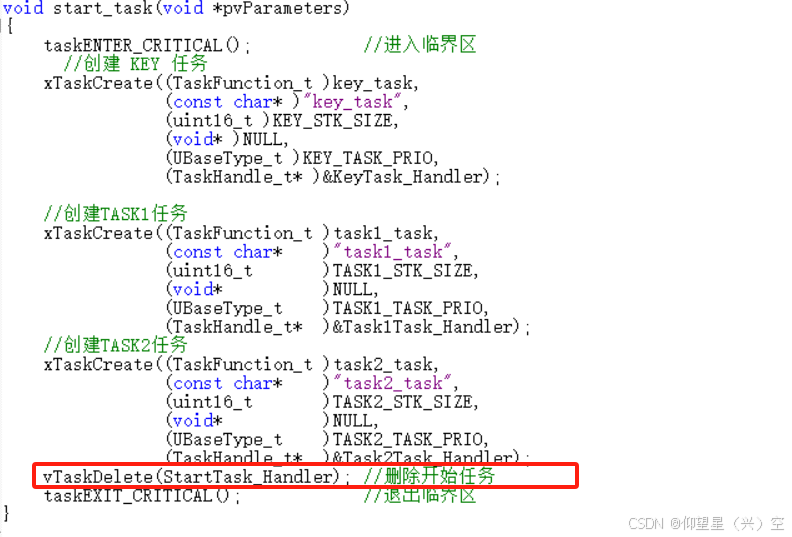

三 构建模型

'''

构建模型

'''

# 多层LSTM

model_lstm = Sequential()

# units=64: LSTM 单元的数量,决定了模型的学习能力。64 表示该层有 64 个隐藏单元。

# activation='relu': 使用 ReLU 激活函数,帮助引入非线性。虽然 LSTM 通常使用 tanh,但在这里可能是为了与后续层兼容。

# return_sequences=True: 该参数指示 LSTM 层返回每个时间步的输出,而不仅仅是最后一个时间步的输出。这对于后续 LSTM 层是必要的。

# input_shape=(X_train.shape[1], 3): 定义输入数据的形状,其中 X_train.shape[1] 是时间步数(width_x),3 是特征数。

model_lstm.add(LSTM(units=64,activation='relu',return_sequences=True,input_shape=(X_train.shape[1],3)))

# 由于没有设置 return_sequences=True,该层将只返回最后一个时间步的输出,适用于输出层

model_lstm.add(LSTM(units=64,activation='relu'))

# Dense: 添加全连接层。

# width_y: 输出层的单元数,通常与预测的目标变量数量相同。此处表示模型输出的维度。

model_lstm.add(Dense(width_y))Model: "sequential_1" _________________________________________________________________Layer (type) Output Shape Param # =================================================================lstm_2 (LSTM) (None, 8, 64) 17408 lstm_3 (LSTM) (None, 64) 33024 dense_1 (Dense) (None, 1) 65 ================================================================= Total params: 50,497 Trainable params: 50,497 Non-trainable params: 0 _________________________________________________________________

四 训练模型

'''

训练

'''

# 只观测loss数值,不观测准确率,所以删去metrics

model_lstm.compile(optimizer=Adam(1e-3),loss='mean_squared_error')history_lstm = model_lstm.fit(X_train,y_train,batch_size=64,epochs=40,validation_data=(X_test,y_test),validation_freq=1)Epoch 1/40 79/79 [==============================] - 2s 10ms/step - loss: 11291.5254 - val_loss: 4030.8381 Epoch 2/40 79/79 [==============================] - 1s 7ms/step - loss: 105.1108 - val_loss: 621.8700 Epoch 3/40 79/79 [==============================] - 1s 7ms/step - loss: 11.1059 - val_loss: 243.1555 Epoch 4/40 79/79 [==============================] - 1s 7ms/step - loss: 7.8829 - val_loss: 226.2837 Epoch 5/40 79/79 [==============================] - 1s 7ms/step - loss: 7.6028 - val_loss: 244.8176 Epoch 6/40 79/79 [==============================] - 1s 7ms/step - loss: 7.7717 - val_loss: 233.2066 Epoch 7/40 79/79 [==============================] - 1s 7ms/step - loss: 7.5230 - val_loss: 223.5901 Epoch 8/40 79/79 [==============================] - 1s 8ms/step - loss: 7.6082 - val_loss: 186.9205 Epoch 9/40 79/79 [==============================] - 1s 8ms/step - loss: 7.6405 - val_loss: 228.2400 Epoch 10/40 79/79 [==============================] - 1s 7ms/step - loss: 7.8450 - val_loss: 189.5714 Epoch 11/40 79/79 [==============================] - 1s 7ms/step - loss: 7.5957 - val_loss: 225.9814 Epoch 12/40 79/79 [==============================] - 1s 8ms/step - loss: 7.8151 - val_loss: 190.3151 Epoch 13/40 79/79 [==============================] - 1s 7ms/step - loss: 7.5514 - val_loss: 171.3298 Epoch 14/40 79/79 [==============================] - 1s 7ms/step - loss: 7.3661 - val_loss: 213.4320 Epoch 15/40 79/79 [==============================] - 1s 8ms/step - loss: 7.5439 - val_loss: 184.0517 Epoch 16/40 79/79 [==============================] - 1s 8ms/step - loss: 7.5511 - val_loss: 171.2384 Epoch 17/40 79/79 [==============================] - 1s 7ms/step - loss: 8.8873 - val_loss: 234.2241 Epoch 18/40 79/79 [==============================] - 1s 7ms/step - loss: 7.4158 - val_loss: 150.4856 Epoch 19/40 79/79 [==============================] - 1s 7ms/step - loss: 7.5648 - val_loss: 181.1954 Epoch 20/40 79/79 [==============================] - 1s 7ms/step - loss: 7.4036 - val_loss: 142.2856 Epoch 21/40 79/79 [==============================] - 1s 7ms/step - loss: 9.1357 - val_loss: 234.9497 Epoch 22/40 79/79 [==============================] - 1s 7ms/step - loss: 8.1056 - val_loss: 161.9010 Epoch 23/40 79/79 [==============================] - 1s 8ms/step - loss: 8.3753 - val_loss: 194.4306 Epoch 24/40 79/79 [==============================] - 1s 8ms/step - loss: 7.6563 - val_loss: 130.8293 Epoch 25/40 79/79 [==============================] - 1s 8ms/step - loss: 7.2591 - val_loss: 132.0350 Epoch 26/40 79/79 [==============================] - 1s 7ms/step - loss: 7.7230 - val_loss: 160.6877 Epoch 27/40 79/79 [==============================] - 1s 7ms/step - loss: 7.5739 - val_loss: 174.5122 Epoch 28/40 79/79 [==============================] - 1s 7ms/step - loss: 6.6420 - val_loss: 134.3670 Epoch 29/40 79/79 [==============================] - 1s 7ms/step - loss: 8.0128 - val_loss: 186.9790 Epoch 30/40 79/79 [==============================] - 1s 7ms/step - loss: 7.7388 - val_loss: 175.0219 Epoch 31/40 79/79 [==============================] - 1s 8ms/step - loss: 7.2826 - val_loss: 162.1556 Epoch 32/40 79/79 [==============================] - 1s 7ms/step - loss: 7.5271 - val_loss: 179.4215 Epoch 33/40 79/79 [==============================] - 1s 7ms/step - loss: 6.5252 - val_loss: 104.9447 Epoch 34/40 79/79 [==============================] - 1s 7ms/step - loss: 6.6822 - val_loss: 250.2031 Epoch 35/40 79/79 [==============================] - 1s 7ms/step - loss: 7.0211 - val_loss: 95.4579 Epoch 36/40 79/79 [==============================] - 1s 7ms/step - loss: 8.2577 - val_loss: 116.2146 Epoch 37/40 79/79 [==============================] - 1s 7ms/step - loss: 7.5878 - val_loss: 243.1991 Epoch 38/40 79/79 [==============================] - 1s 7ms/step - loss: 11.2559 - val_loss: 390.6861 Epoch 39/40 79/79 [==============================] - 1s 7ms/step - loss: 7.0651 - val_loss: 168.2283 Epoch 40/40 79/79 [==============================] - 1s 7ms/step - loss: 7.6015 - val_loss: 223.1200

五 评估模型

'''

评估

'''

# loss图

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = Falseplt.figure(figsize=(5,3))

plt.plot(history_lstm.history['loss'],label='LSTM Training Loss')

plt.plot(history_lstm.history['val_loss'],label='LSTM Testing Loss')

plt.title('Training and Testing Loss')

plt.legend()

plt.show()

# 调用模型预测

pred_y = model_lstm.predict(X_test)

y_test_one = [i for i in y_test]

pred_y_one = [i[0] for i in pred_y]plt.figure(figsize=(8,6))

plt.plot(y_test_one[:1000],color='red',label='真实值')

plt.plot(pred_y_one[:1000],color='blue',label='预测值')

plt.legend()

plt.show()

RMSE_lstm = mean_squared_error(pred_y,y_test) ** 0.5

R2_lstm = r2_score(pred_y,y_test)

print('均方差误差:',RMSE_lstm)

print('R2:',R2_lstm)均方差误差: 14.93720339824608 R2: 0.5779344291884085

总结:

捕捉时间序列中的长期依赖性

处理不规则的时间间隔和噪声

建模复杂的非线性关系

动态调整记忆和遗忘

适应性强

![HTML5+CSS前端开发[保姆级教学]+基本文本控制标签介绍](https://img-blog.csdnimg.cn/img_convert/1a1d0d874eda668d00d598cb2b63a67a.png)