书生浦语大模型实战营第四期:InternVL 多模态模型部署微调实践

- 教程链接:https://github.com/InternLM/Tutorial/tree/camp4/docs/L2/InternVL

- 视频链接:https://www.bilibili.com/video/BV1nESCYWEnN/

- 任务链接:https://github.com/InternLM/Tutorial/blob/camp4/docs/L2/InternVL/task.md

- 提交链接:https://aicarrier.feishu.cn/share/base/form/shrcnUqshYPt7MdtYRTRpkiOFJd

任务说明

基础任务(完成此任务即完成闯关)

- 理解多模态大模型的常见设计模式,可以大概讲出多模态大模型的工作原理。

- 了解InternVL2的设计模式,可以大概描述InternVL2的模型架构和训练流程。

- 了解LMDeploy部署多模态大模型的核心代码,并运行提供的gradio代码,在UI界面体验与InternVL2的对话。

- 了解XTuner,并利用给定数据集微调InternVL2-2B后,再次启动UI界面,体验模型美食鉴赏能力的变化。

基础1:多模态大模型常见设计模式&工作原理

视频内容

举例:

简单总结

多模态大语言模型MLLM是指能够处理和融合多种不同类型数据(如文本、图像、音频、视频等)的大型人工智能模型,这些模型通常基于

深度学习技术,能够理解和生成多种模态的数据,从而在各种复杂的应用场景中表现出强大的能力。

多模态大模型研究的重点是不同模态特征空间的对齐,常见设计模式主要有:

- 以Blip2,Mini-GPT4为代表的Q-Former路线

- 以LLaVA, LLaVA-NEXT为代表的Linear Projector/MLP路线

Q-Former由两个transformer子模块组成,一个是query encoder(可学习的查询编码器),另一个是text encoder & decoder(文本编码器和解码器)。这两个子模块通过cross attention(交叉注意力)机制进行交互,以实现视觉和文本信息的对齐和融合。Q-Former旨在通过一组可学习的query tokens来压缩和提取视觉特征,这些特征能够被大语言模型所解释和利用。它使用了一个轻量级的transformer结构,包含一个可学习的query向量集,用于从冻结的视觉模型中提取视觉特征。Q-Former由两个transformer子模块组成,一个是query encoder(可学习的查询编码器),另一个是text encoder & decoder(文本编码器和解码器)。这两个子模块通过cross attention(交叉注意力)机制进行交互,以实现视觉和文本信息的对齐和融合。由于参数量大、收敛速度慢以及信息损失等问题,Q-Former在某些任务上可能受到限制。此外,Q-Former的训练过程也相对复杂,需要较高的计算资源。

LLaVA的架构包括视觉编码器、语言模型和线性投影三个核心组件。视觉编码器用于处理输入图像以提取特征,语言模型用于理解和生成语言响应,线性投影则作为视觉特征和语言模型嵌入空间之间的桥梁。LaVA 的对齐方式相对来说比较简单,只有简单的线性层。Linear Projector 没有视觉信息损失、训练收敛快、表现也好。

基础2:InternVL2设计模式,模型架构&训练流程

InternVL2的设计基本上都在这个图里了,包括InternViT、Pixel Shuffle、动态处理高分辨率图片、基于InternLM2的Tokenizer

下面是各个部件的细节说明:

训练是分阶段的,先训MLP Projector部分,第二阶段是联合训练,主要是视觉文本指令

训练是分阶段的,先训MLP Projector部分,第二阶段是联合训练,主要是视觉文本指令

大概有这些知识点,先动手操作一下,后面慢慢看~

LMDeploy部署多模态大模型

回顾了一下LMDeploy的优点

LMdeploy的环境配置之前基础岛都搞过了,可以回去翻翻,这里直接部署:

改下教程提供的demo.py中的模型路径即可。

PS:本人也遇到了教程中所说的这个问题:

这个直接按照教程搞下就ok了,可能异步并发的时候也会有bug,遇到再说~

import os

import random

import numpy as np

import torch

import torch.backends.cudnn as cudnn

import gradio as grfrom utils import load_json, init_logger

from demo import ConversationalAgent, CustomThemeFOOD_EXAMPLES = "demo/food_for_demo.json"

# MODEL_PATH = "/root/share/new_models/OpenGVLab/InternVL2-2B"

MODEL_PATH = '../InternVL2-2B'

# MODEL_PATH = "/root/xtuner/work_dirs/internvl_v2_internlm2_2b_lora_finetune_food/lr35_ep10"

OUTPUT_PATH = "./outputs"def setup_seeds():seed = 42random.seed(seed)np.random.seed(seed)torch.manual_seed(seed)cudnn.benchmark = Falsecudnn.deterministic = Truedef main():setup_seeds()# logginginit_logger(OUTPUT_PATH)# food examplesfood_examples = load_json(FOOD_EXAMPLES)agent = ConversationalAgent(model_path=MODEL_PATH,outputs_dir=OUTPUT_PATH)theme = CustomTheme()titles = ["""<center><B><font face="Comic Sans MS" size=10>书生大模型实战营</font></B></center>""" ## Kalam:wght@700"""<center><B><font face="Courier" size=5>「进阶岛」InternVL 多模态模型部署微调实践</font></B></center>"""]language = """Language: 中文 and English"""with gr.Blocks(theme) as demo_chatbot:for title in titles:gr.Markdown(title)# gr.Markdown(article)gr.Markdown(language)with gr.Row():with gr.Column(scale=3):start_btn = gr.Button("Start Chat", variant="primary", interactive=True)clear_btn = gr.Button("Clear Context", interactive=False)image = gr.Image(type="pil", interactive=False)upload_btn = gr.Button("🖼️ Upload Image", interactive=False)with gr.Accordion("Generation Settings"): top_p = gr.Slider(minimum=0, maximum=1, step=0.1,value=0.8,interactive=True,label='top-p value',visible=True)temperature = gr.Slider(minimum=0, maximum=1.5, step=0.1,value=0.8,interactive=True,label='temperature',visible=True)with gr.Column(scale=7):chat_state = gr.State()chatbot = gr.Chatbot(label='InternVL2', height=800, avatar_images=((os.path.join(os.path.dirname(__file__), 'demo/user.png')), (os.path.join(os.path.dirname(__file__), "demo/bot.png"))))text_input = gr.Textbox(label='User', placeholder="Please click the <Start Chat> button to start chat!", interactive=False)gr.Markdown("### 输入示例")def on_text_change(text):return gr.update(interactive=True)text_input.change(fn=on_text_change, inputs=text_input, outputs=text_input)gr.Examples(examples=[["图片中的食物通常属于哪个菜系?"],["如果让你简单形容一下品尝图片中的食物的滋味,你会描述它"],["去哪个地方游玩时应该品尝当地的特色美食图片中的食物?"],["食用图片中的食物时,一般它上菜或摆盘时的特点是?"]],inputs=[text_input])with gr.Row():gr.Markdown("### 食物快捷栏")with gr.Row():example_xinjiang_food = gr.Examples(examples=food_examples["新疆菜"], inputs=image, label="新疆菜")example_sichuan_food = gr.Examples(examples=food_examples["川菜(四川,重庆)"], inputs=image, label="川菜(四川,重庆)")example_xibei_food = gr.Examples(examples=food_examples["西北菜 (陕西,甘肃等地)"], inputs=image, label="西北菜 (陕西,甘肃等地)")with gr.Row():example_guizhou_food = gr.Examples(examples=food_examples["黔菜 (贵州)"], inputs=image, label="黔菜 (贵州)")example_jiangsu_food = gr.Examples(examples=food_examples["苏菜(江苏)"], inputs=image, label="苏菜(江苏)")example_guangdong_food = gr.Examples(examples=food_examples["粤菜(广东等地)"], inputs=image, label="粤菜(广东等地)")with gr.Row():example_hunan_food = gr.Examples(examples=food_examples["湘菜(湖南)"], inputs=image, label="湘菜(湖南)")example_fujian_food = gr.Examples(examples=food_examples["闽菜(福建)"], inputs=image, label="闽菜(福建)")example_zhejiang_food = gr.Examples(examples=food_examples["浙菜(浙江)"], inputs=image, label="浙菜(浙江)")with gr.Row():example_dongbei_food = gr.Examples(examples=food_examples["东北菜 (黑龙江等地)"], inputs=image, label="东北菜 (黑龙江等地)")start_btn.click(agent.start_chat, [chat_state], [text_input, start_btn, clear_btn, image, upload_btn, chat_state])clear_btn.click(agent.restart_chat, [chat_state], [chatbot, text_input, start_btn, clear_btn, image, upload_btn, chat_state], queue=False)upload_btn.click(agent.upload_image, [image, chatbot, chat_state], [image, chatbot, chat_state])text_input.submit(agent.respond,inputs=[text_input, image, chatbot, top_p, temperature, chat_state], outputs=[text_input, image, chatbot, chat_state])demo_chatbot.launch(share=True, server_name="127.0.0.1", server_port=1096, allowed_paths=['./'])demo_chatbot.queue()if __name__ == "__main__":main()

把实战营的宣传照片贴过来提问一下,哈哈,效果貌似还可以:

试下领域的就不太行了:

这个需要后面着手微调~

XTuner微调InternVL2-2B

回顾了一下xtuner的优点:

环境

这里根据教程即可,建议先安装torch,不然可能会有奇奇怪怪的版本冲突:

conda create --name xtuner python=3.10 -y

conda activate xtuner

pip install torch==2.4.1 torchvision==0.19.1 torchaudio==2.4.1 --index-url https://download.pytorch.org/whl/cu121

pip install -U 'xtuner[deepspeed]' timm==1.0.9

pip install transformers==4.39.0

数据

原始数据需要从huggingface上下载,到官网申请下数据,然后生成一个read的token,然后使用下面命令下载,记得改下自己的保存路径,改那个--local-dir即可

huggingface-cli login

huggingface-cli download --repo-type dataset --resume-download lyan62/FoodieQA --local-dir ./huggingface/FoodieQA --local-dir-use-symlinks False

下载示例:

原始数据是这个样子:

{"question": "图片中的食物通常属于哪个菜系?","choices": ["京菜","徽菜","新疆菜","桂菜"],"answer": "2","question_type": "cuisine_type","food_name": "烤羊肉串","question_id": "vqa-0","food_meta": {"main_ingredient": ["肉","羊"],"id": 217,"food_name": "烤羊肉串","food_type": "新疆菜","food_location": "餐馆","food_file": "images/14456664_217_IMG_3854.jpeg"},"question_en": "The food in the picture usually belongs to which cuisine?","choices_en": ["Beijing cuisine","Anhui cuisine","Xinjiang cuisine","Guizhou cuisine"]}

需要根据教程,将数据处理为XTuner所需格式,脚本如下:

import json

input_path = "./huggingface/FoodieQA/FoodieQA/sivqa_tidy.json" # sivqa_tidy.json所在位置

output_path = "./huggingface/FoodieQA/FoodieQA/sivqa_llava.json" # 输出文件位置with open(input_path, 'r', encoding='utf-8') as f:foodqa = json.load(f)llava_format = []

for data in foodqa:llava_format.append({"image": data['food_meta']['food_file'],"conversations": [{"from": "human","value": data['question']+"\n<image>"},{"from": "gpt","value": data['choices'][int(data['answer'])] + ",图中的菜是"+ data['food_meta']['food_name']}]})with open(output_path, 'w', encoding='utf-8') as f:json.dump(llava_format, f, indent=4, ensure_ascii=False)

处理完大概这个样子:

{"image": "images/14456664_217_IMG_3854.jpeg","conversations": [{"from": "human","value": "图片中的食物通常属于哪个菜系?\n<image>"},{"from": "gpt","value": "新疆菜,图中的菜是烤羊肉串"}]}

配置文件

数据准备好, 然后就可以开始微调了,主要就是改配置文件,其他的交给xtuner:

主要配置说明

- `path`: 需要微调的模型路径,在InternStudio环境下,无需修改。

- `data_root`: 数据集所在路径。

- `data_path`: 训练数据文件路径。

- `image_folder`: 训练图像根路径。

- `prompt_temple`: 配置模型训练时使用的聊天模板、系统提示等。使用与模型对应的即可,此处无需修改。

- `max_length`: 训练数据每一条最大token数。

- `batch_size`: 训练批次大小,可以根据显存大小调整。

- `accumulative_counts`: 梯度累积的步数,用于模拟较大的batch_size,在显存有限的情况下,提高训练稳定性。

- `dataloader_num_workers`: 指定数据集加载时子进程的个数。

- `max_epochs`:训练轮次。

- `optim_type`:优化器类型。

- `lr`: 学习率

- `betas`: Adam优化器的beta1, beta2

- `weight_decay`: 权重衰减,防止训练过拟合用

- `max_norm`: 梯度裁剪时的梯度最大值

- `warmup_ratio`: 预热比例,前多少的数据训练时,学习率将会逐步增加。

- `save_steps`: 多少步存一次checkpoint

- `save_total_limit`: 最多保存几个checkpoint,设为-1即无限制

LoRA相关参数:

- `r`: 低秩矩阵的秩,决定了低秩矩阵的维度。

- `lora_alpha` 缩放因子,用于调整低秩矩阵的权重。

- `lora_dropout` dropout 概率,以防止过拟合。

输入xtuner list-cfg,看下目前支持微调的配置文件,找到参考的配置文件:

复制一份过来:

xtuner copy-cfg internvl_v2_internlm2_2b_lora_finetune ./

主要修改下模型和数据路径,根据自己的gpu大小调节下batch_size和学习率即可,如果在开发机里面基本不用怎么改:

# Copyright (c) OpenMMLab. All rights reserved.

from mmengine.hooks import (CheckpointHook, DistSamplerSeedHook, IterTimerHook,LoggerHook, ParamSchedulerHook)

from mmengine.optim import AmpOptimWrapper, CosineAnnealingLR, LinearLR

from peft import LoraConfig

from torch.optim import AdamW

from transformers import AutoTokenizerfrom xtuner.dataset import InternVL_V1_5_Dataset

from xtuner.dataset.collate_fns import default_collate_fn

from xtuner.dataset.samplers import LengthGroupedSampler

from xtuner.engine.hooks import DatasetInfoHook

from xtuner.engine.runner import TrainLoop

from xtuner.model import InternVL_V1_5

from xtuner.utils import PROMPT_TEMPLATE#######################################################################

# PART 1 Settings #

#######################################################################

# Model

path = '/root/share/new_models/OpenGVLab/InternVL2-2B'# Data

data_root = '/root/share/datasets/FoodieQA/' # your data path

data_path = data_root + 'sivqa_llava.json'

image_folder = data_root # your image folder path

prompt_template = PROMPT_TEMPLATE.internlm2_chat

max_length = 8192# Scheduler & Optimizer

batch_size = 4 # per_device

accumulative_counts = 2

dataloader_num_workers = 4

max_epochs = 10

optim_type = AdamW

# official 1024 -> 4e-5

# lr = 1e-6

lr = 3e-5

betas = (0.9, 0.999)

weight_decay = 0.05

max_norm = 1 # grad clip

warmup_ratio = 0.03# Save

save_steps = 64

save_total_limit = -1 # Maximum checkpoints to keep (-1 means unlimited)#######################################################################

# PART 2 Model & Tokenizer & Image Processor #

#######################################################################

model = dict(type=InternVL_V1_5,model_path=path,freeze_llm=True,freeze_visual_encoder=True,# comment the following lines if you don't want to use Lora in llmllm_lora=dict(type=LoraConfig,r=128,lora_alpha=256,lora_dropout=0.05,target_modules=None,task_type='CAUSAL_LM'),# uncomment the following lines if you don't want to use Lora in visual encoder # noqa# visual_encoder_lora=dict(# type=LoraConfig, r=64, lora_alpha=16, lora_dropout=0.05,# target_modules=['attn.qkv', 'attn.proj', 'mlp.fc1', 'mlp.fc2'])

)#######################################################################

# PART 3 Dataset & Dataloader #

#######################################################################

llava_dataset = dict(type=InternVL_V1_5_Dataset,model_path=path,data_paths=data_path,image_folders=image_folder,template=prompt_template,max_length=max_length)train_dataloader = dict(batch_size=batch_size,num_workers=dataloader_num_workers,dataset=llava_dataset,sampler=dict(type=LengthGroupedSampler,length_property='modality_length',per_device_batch_size=batch_size * accumulative_counts),collate_fn=dict(type=default_collate_fn))#######################################################################

# PART 4 Scheduler & Optimizer #

#######################################################################

# optimizer

optim_wrapper = dict(type=AmpOptimWrapper,optimizer=dict(type=optim_type, lr=lr, betas=betas, weight_decay=weight_decay),clip_grad=dict(max_norm=max_norm, error_if_nonfinite=False),accumulative_counts=accumulative_counts,loss_scale='dynamic',dtype='float16')# learning policy

# More information: https://github.com/open-mmlab/mmengine/blob/main/docs/en/tutorials/param_scheduler.md # noqa: E501

param_scheduler = [dict(type=LinearLR,start_factor=1e-5,by_epoch=True,begin=0,end=warmup_ratio * max_epochs,convert_to_iter_based=True),dict(type=CosineAnnealingLR,eta_min=0.0,by_epoch=True,begin=warmup_ratio * max_epochs,end=max_epochs,convert_to_iter_based=True)

]# train, val, test setting

train_cfg = dict(type=TrainLoop, max_epochs=max_epochs)#######################################################################

# PART 5 Runtime #

#######################################################################

# Log the dialogue periodically during the training process, optional

tokenizer = dict(type=AutoTokenizer.from_pretrained,pretrained_model_name_or_path=path,trust_remote_code=True)custom_hooks = [dict(type=DatasetInfoHook, tokenizer=tokenizer),

]# configure default hooks

default_hooks = dict(# record the time of every iteration.timer=dict(type=IterTimerHook),# print log every 10 iterations.logger=dict(type=LoggerHook, log_metric_by_epoch=False, interval=10),# enable the parameter scheduler.param_scheduler=dict(type=ParamSchedulerHook),# save checkpoint per `save_steps`.checkpoint=dict(type=CheckpointHook,save_optimizer=False,by_epoch=False,interval=save_steps,max_keep_ckpts=save_total_limit),# set sampler seed in distributed evrionment.sampler_seed=dict(type=DistSamplerSeedHook),

)# configure environment

env_cfg = dict(# whether to enable cudnn benchmarkcudnn_benchmark=False,# set multi process parametersmp_cfg=dict(mp_start_method='fork', opencv_num_threads=0),# set distributed parametersdist_cfg=dict(backend='nccl'),

)# set visualizer

visualizer = None# set log level

log_level = 'INFO'# load from which checkpoint

load_from = None# whether to resume training from the loaded checkpoint

resume = False# Defaults to use random seed and disable `deterministic`

randomness = dict(seed=None, deterministic=False)# set log processor

log_processor = dict(by_epoch=False)训练&debug

一开始是打算在自己机器搞事情,后来碰到一个bug,死活解决不了:

RuntimeError: "_amp_foreach_non_finite_check_and_unscale_cuda" not implemented for 'BFloat16'

网上倒是有一些方案,例如:

- https://github.com/pytorch/pytorch/issues/127176,不过行不通

办法总是有的,于是开始尝试以下两个解决方案:

- 利用docker重新在服务器构建个干干净净的容器,看看是不是因为乱七八糟的环境配置导致的

- 使用书生浦语大模型实战营提供的开发机,这个已经完成基础岛了,解锁了50%的A100权限,嘿嘿

果然,上述两个方案都是行的通的,哈哈,第一个方案用的A30,只能设置batch_size=2,第二个用的50%的A100,可以设置batch_size=4,果断选择第二个~

训练命令(开发机一个卡这么搞ok):

xtuner train /root/homework/L2_internVL/InternVL2-Tutorial/xtuner_config/internvl_v2_internlm2_2b_lora_finetune_food.py --deepspeed deepspeed_zero2

如果多个卡可以这么指定(本地配的环境一个卡那个命令提示有问题,两个卡就没问题,奇奇怪怪的):

CUDA_VISIBLE_DEVICES=0,1 NPROC_PER_NODE=2 xtuner train /root/homework/L2_internVL/InternVL2-Tutorial/xtuner_config/internvl_v2_internlm2_2b_lora_finetune_food.py --deepspeed deepspeed_zero2

对了,之前本地调还遇到了这个错误:

RuntimeError: The server socket has failed to listen on any local network address. The server socket has failed to bind to [::]:29500 (errno: 98 - Address already in use). The server socket has failed to bind to 0.0.0.0:29500 (errno: 98 - Address already in use).

去github查了下issues,有类似问题,居然需要通过改源码来解决,而不能命令行手动改master port,后面可能会改吧,暂时的解决方案请参考:https://github.com/InternLM/xtuner/issues/467

搞完之后开始训练:

记得提前开screen,看eta还得好久,看下开发机目前资源占用

模型转换和合并

主要命令:

python xtuner/configs/internvl/v1_5/convert_to_official.py xtuner/configs/internvl/v2/internvl_v2_internlm2_2b_lora_finetune_food.py ./work_dirs/internvl_v2_internlm2_2b_lora_finetune_food/iter_640.pth ./work_dirs/internvl_v2_internlm2_2b_lora_finetune_food/lr35_ep10/

这是转换后的模型文件:

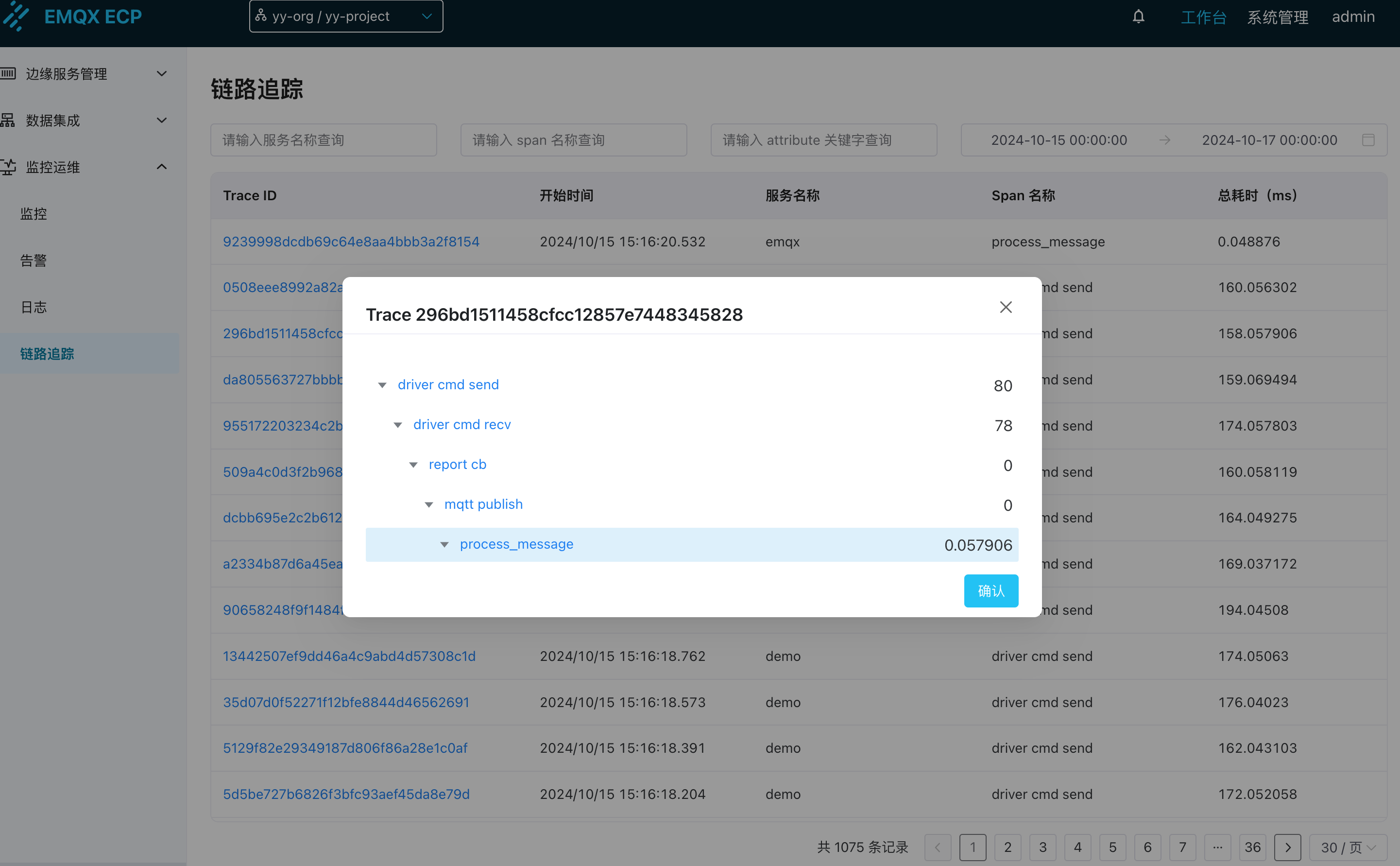

模型测试

回到lmdeploy部分,对比使用微调前的模型以及微调后的模型,主要在demo.py里面修改下模型路径即可,效果对比如下,微调前:

属实在胡说八道了,这是宫保鸡丁呀,嘿嘿~

微调后:

效果还是不错的呢,后面就可以自己制作感兴趣的数据集,测试下垂直领域的其他案例了~

恭喜我完成了本课程!

参考文献

教程里的参考文献放这里,备用~