- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊

导入基础的包

from tensorflow import keras

from tensorflow.keras import layers,models

import os, PIL, pathlib

import matplotlib.pyplot as plt

import tensorflow as tf

import numpy as np

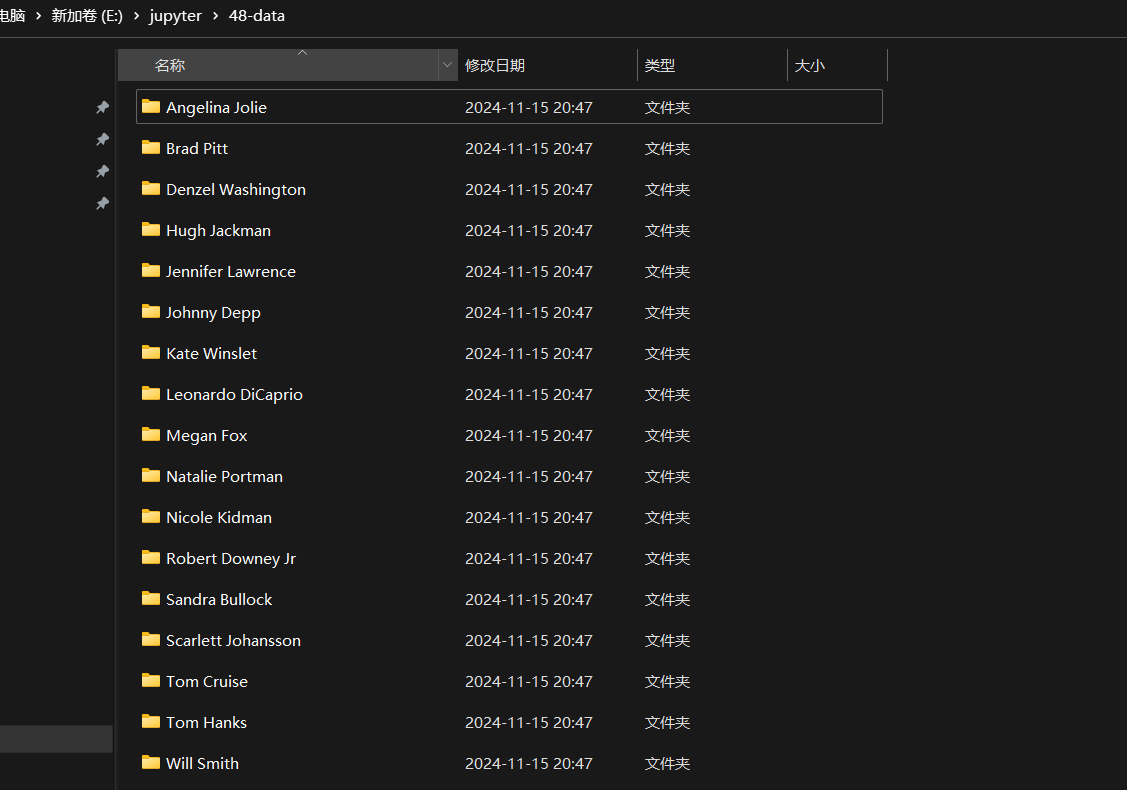

读取本地的好莱坞明星文件构建数据集。

data_dir = "./48-data/"data_dir = pathlib.Path(data_dir)

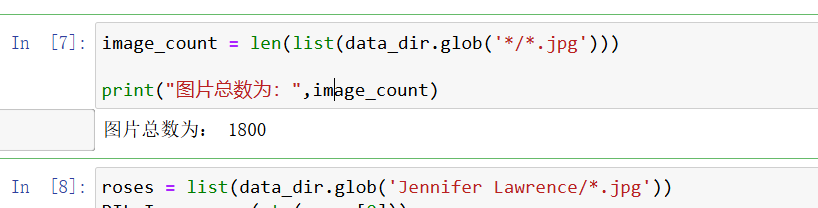

打印文件的数量,一共1800张图片。

image_count = len(list(data_dir.glob('*/*.jpg')))print("图片总数为:",image_count)

构建训练集

train_ds = tf.keras.preprocessing.image_dataset_from_directory(data_dir,validation_split=0.1,subset="training",label_mode = "categorical",seed=123,image_size=(img_height, img_width),batch_size=batch_size)

构建验证集

val_ds = tf.keras.preprocessing.image_dataset_from_directory(data_dir,validation_split=0.1,subset="validation",label_mode = "categorical",seed=123,image_size=(img_height, img_width),batch_size=batch_size)

构建网络模型

model = models.Sequential([layers.experimental.preprocessing.Rescaling(1./255, input_shape=(img_height, img_width, 3)),layers.Conv2D(16, (3, 3), activation='relu', input_shape=(img_height, img_width, 3)), # 卷积层1,卷积核3*3 layers.AveragePooling2D((2, 2)), # 池化层1,2*2采样layers.Conv2D(32, (3, 3), activation='relu'), # 卷积层2,卷积核3*3layers.AveragePooling2D((2, 2)), # 池化层2,2*2采样layers.Dropout(0.5), layers.Conv2D(64, (3, 3), activation='relu'), # 卷积层3,卷积核3*3layers.AveragePooling2D((2, 2)), layers.Dropout(0.5), layers.Conv2D(128, (3, 3), activation='relu'), # 卷积层3,卷积核3*3layers.Dropout(0.5), layers.Flatten(), # Flatten层,连接卷积层与全连接层layers.Dense(128, activation='relu'), # 全连接层,特征进一步提取layers.Dense(len(class_names)) # 输出层,输出预期结果

])model.summary() # 打印网络结构

设置学习率,并且编译网络模型

initial_learning_rate = 1e-4lr_schedule = tf.keras.optimizers.schedules.ExponentialDecay(initial_learning_rate, decay_steps=60, # 敲黑板!!!这里是指 steps,不是指epochsdecay_rate=0.96, # lr经过一次衰减就会变成 decay_rate*lrstaircase=True)# 将指数衰减学习率送入优化器

optimizer = tf.keras.optimizers.Adam(learning_rate=lr_schedule)model.compile(optimizer=optimizer,loss=tf.keras.losses.CategoricalCrossentropy(from_logits=True),metrics=['accuracy'])

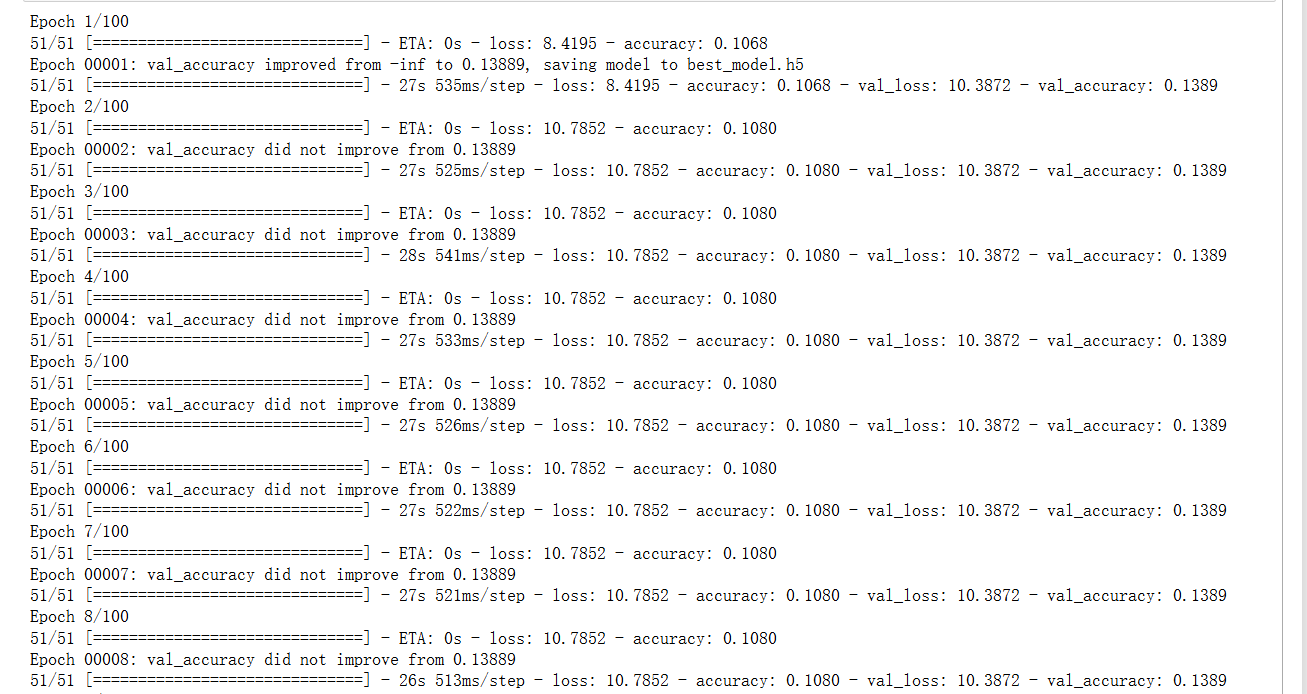

开始训练

轮次 100轮,保存最佳的模型参数。

from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStoppingepochs = 100# 保存最佳模型参数

checkpointer = ModelCheckpoint('best_model.h5',monitor='val_accuracy',verbose=1,save_best_only=True,save_weights_only=True)# 设置早停

earlystopper = EarlyStopping(monitor='val_accuracy', min_delta=0.001,patience=20, verbose=1)

开始训练

history = model.fit(train_ds,validation_data=val_ds,epochs=epochs,callbacks=[checkpointer, earlystopper])

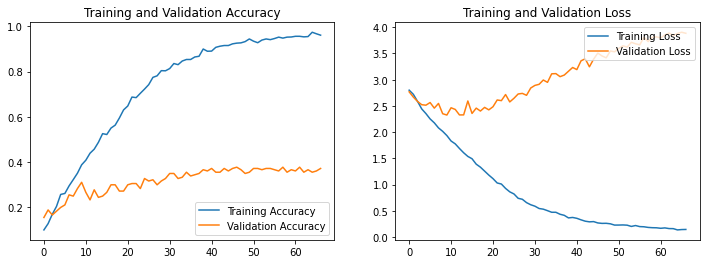

画图训练集和测试集的 准确率和丢失率。

from PIL import Image

import numpy as npimg = Image.open("./48-data/Jennifer Lawrence/003_963a3627.jpg")

image = tf.image.resize(img, [img_height, img_width])img_array = tf.expand_dims(image, 0) predictions = model.predict(img_array)

print("预测结果为:",class_names[np.argmax(predictions)])