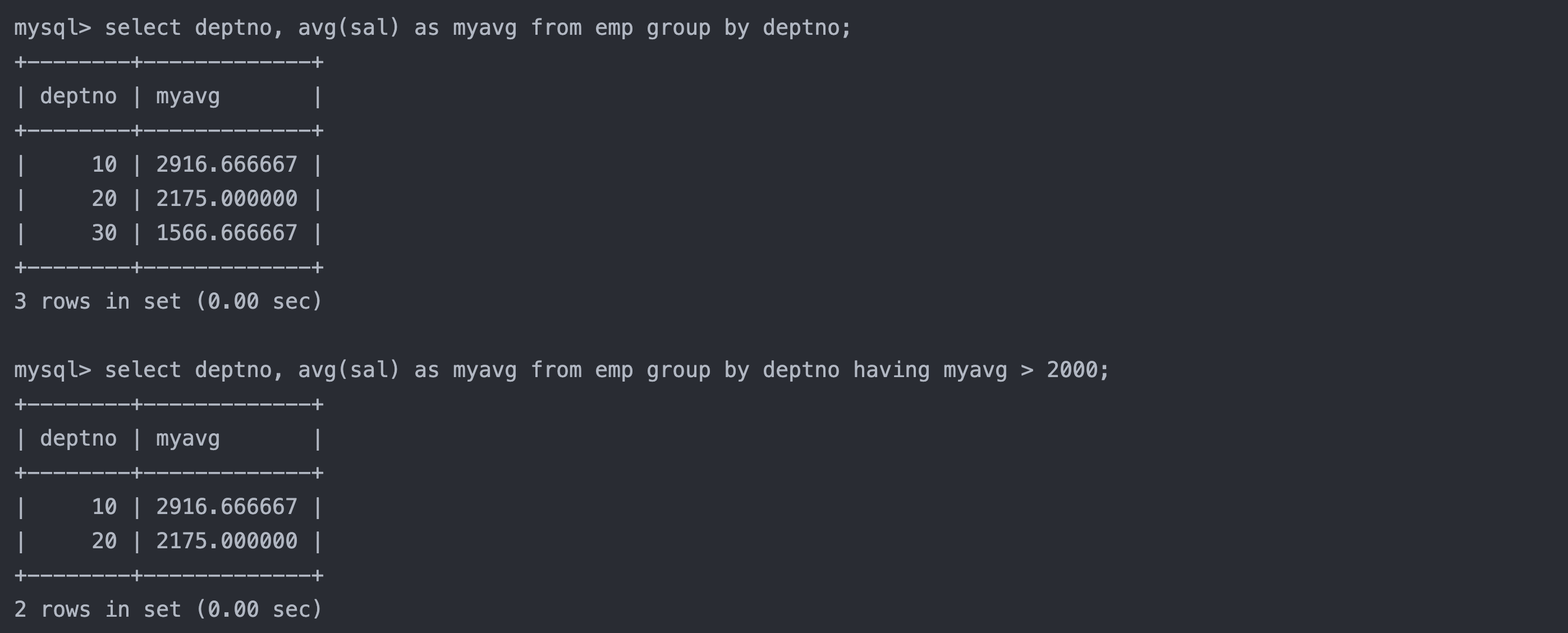

sqoop脚本:

sqoop import -D mapred.job.queue.name=highway \

-D mapreduce.map.memory.mb=4096 \

-D mapreduce.map.java.opts=-Xmx3072m \

--connect "jdbc:oracle:thin:@//localhost:61521/LZY2" \

--username LZSHARE \

--password '123456' \

--query "SELECT TO_CHAR(GCRQ, 'YYYY') AS gcrq_year,TO_CHAR(GCRQ, 'MM') AS gcrq_month,TO_CHAR(GCRQ, 'DD') AS gcrq_day,YEAR,TO_CHAR(GCRQ, 'YYYY-MM-DD HH24:MI:SS') AS GCRQ,........TO_CHAR(DELETE_TIME, 'YYYY-MM-DD HH24:MI:SS') AS DELETE_TIME,CREATE_BY,TO_CHAR(CREATE_TIME, 'YYYY-MM-DD HH24:MI:SS') AS CREATE_TIME,UPDATE_BY,TO_CHAR(UPDATE_TIME, 'YYYY-MM-DD HH24:MI:SS') AS UPDATE_TIME,TO_CHAR(INSERT_TIME, 'YYYY-MM-DD HH24:MI:SS') AS INSERT_TIMEFROM LZJHGX.dat_dcsj_time

WHERE TO_CHAR(GCRQ , 'YYYY-MM-DD') < TO_CHAR(SYSDATE, 'YYYY-MM-DD') AND \$CONDITIONS" \

--split-by sjxh \

--hcatalog-database dw \

--hcatalog-table ods_pre_dat_dcsj_time \

--hcatalog-storage-stanza 'stored as orc' \

--num-mappers 20其中query语句在Oracle中执行时,日期时间都是完整保留的。

但是执行完毕查看hive,却发现日期时间字段都是为NULL。

但如果不做tochar转换会报错:

Error: java.lang.ClassCastException: java.sql.Timestamp cannot be cast to org.apache.hadoop.hive.common.type.Timestamp

那么换个解决思路,将hive表中的timestamp字段改为string类型,这样修改后成功解决。