descheduler规则

`karmada-descheduler` 定期检测所有部署,通常是每2分钟一次,并确定目标调度集群中无法调度的副本数量。它通过调用 `karmada-scheduler-estimator` 来完成这个过程。如果发现无法调度的副本,它将通过减少 `spec.clusters` 的配置来将它们驱逐出去。这个操作会触发 `karmada-scheduler` 基于当前情况进行“规模调度”。

需要注意的是,只有在将副本调度策略设置为动态划分时,这个驱逐和重新调度的过程才会生效。这种策略允许根据可用资源将副本动态分配到不同的集群中。通过定期检查集群的状态和资源可用性,Karmada可以调整副本的放置位置,以确保资源利用的高效性和系统稳定性。

前提条件

确保所有k8s成员加入到karmada当中且karmada-descheduler加入到karmada-host中如图所示

测试

模拟缺乏资源而导致调度失败,创建俩个nginx副本将他们放到俩个成员集群中

```

root@karmada-cluster01:/home/karmada-teaching/descheduler# cat pp-deploy-nginx-descheduler.yaml

apiVersion: policy.karmada.io/v1alpha1

kind: PropagationPolicy

metadata:

name: nginx-descheduler-propagation

spec:

resourceSelectors:

- apiVersion: apps/v1

kind: Deployment

name: nginx-descheduler

placement:

clusterAffinity:

clusterNames:

- cluster1

- cluster2

replicaScheduling:

replicaDivisionPreference: Weighted

replicaSchedulingType: Divided

weightPreference:

dynamicWeight: AvailableReplicas

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-descheduler

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: 10.250.2.118:18000/library/nginx:latest

name: nginx

```

kubectl apply -f pp-deploy-nginx-descheduler.yaml -n karmada --kubeconfig ~/kube-karmada

propagationpolicy.policy.karmada.io/nginx-descheduler-propagation configured

deployment.apps/nginx-descheduler configured

此时都在cluster01上 现在将cluster集群停止调度

root@karmada-cluster01:/home/karmada-teaching/descheduler# kubectl --kubeconfig ~/.kube/21config cordon k8s-cluster1-node2

node/k8s-cluster1-node2 cordoned

root@karmada-cluster01:/home/karmada-teaching/descheduler# kubectl --kubeconfig ~/.kube/21config cordon k8s-cluster1-node1

node/k8s-cluster1-node1 cordoned

root@karmada-cluster01:/home/karmada-teaching/descheduler# kubectl --kubeconfig ~/.kube/21config cordon k8s-cluster01

node/k8s-cluster01 cordoned删除nginx pod

root@karmada-cluster01:/home/karmada-teaching/descheduler# kubectl --kubeconfig ~/.kube/21config delete pod -l app=nginx -n karmada

pod "nginx-descheduler-7864466765-fgqsc" deleted

pod "nginx-descheduler-7864466765-lhvnr" deleted

pod "nginx-descheduler-7864466765-mbh4n" deleted

```

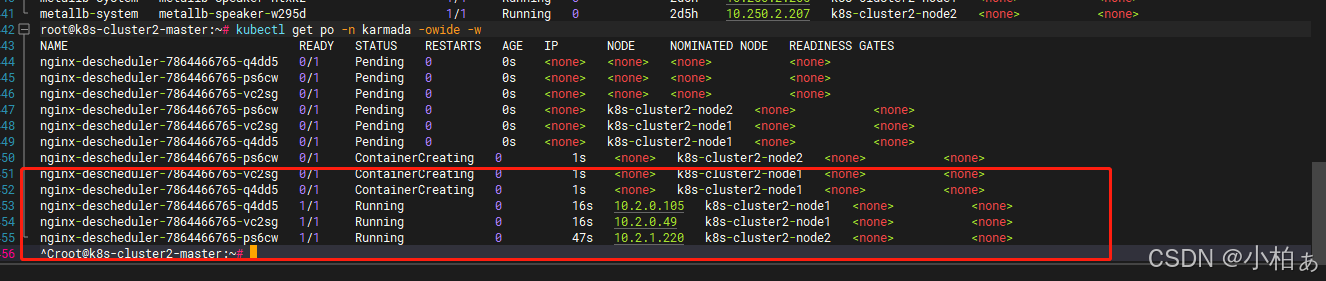

此时集群1的po状态

```

root@k8s-cluster1-master:/home/k8s# kubectl get po -n karmada -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-descheduler-7864466765-b4h4l 0/1 Pending 0 18s <none> <none> <none> <none>

nginx-descheduler-7864466765-cvx4k 0/1 Pending 0 18s <none> <none> <none> <none>

nginx-descheduler-7864466765-xr22g 0/1 Pending 0 18s <none> <none> <none> <none>

```大概等5-7分钟就会从cluster1到cluster2当当中

已经调度到另外集群当中