目录

1. 📂 前言

2. 💠 任务拆分

2.1 产品需求拆分

2.2 开发工作拆分

3. 🔱 开发实现

3.1 代码目录截图

3.2 app 模块

3.3 middleware 模块

3.4 portal 模块

4. ⚛️ 拍照与录像

4.1 前滑后滑统一处理

4.2 初始化 View 以及 Camera

4.3 页面前后滑处理

4.4 字符串资源

4.5 照片视频存放

4.6 拍照

4.7 录像

5. ⚛️ 图片视频查看

5.1 数据结构定义

5.2 图片视频获取工具类

5.3 解耦 viewmodel 和 model 层

5.4 初始化 View

5.5 BannerViewPager 组件加载和使用

5.6 获取数据并加载到 View 显示

5.7 页面前后滑处理

5.8 gradle 依赖

6. ✅ 小结

1. 📂 前言

背景:为了满足用户对 AR 眼镜相机功能的体验,研发内部决定开发一款带有 AR 眼镜特性相机应用,无产品、设计、测试以及项目同学的参与。

参与开发人员:OS/应用开发同学(本人)。

客户与用户:用户是最终使用产品的人,更多关注功能实用性,当前阶段用户是OS/应用开发同学,未来用户是产品经理、设计同学,以及未来会使用此OS的用户;客户是直属领导,更多关注功能完成度。

2. 💠 任务拆分

2.1 产品需求拆分

由于是研发内部需求,没有产品经理参与,所以需要通过调研已有产品,并结合过往相机应用开发经验,大致拆分为三块需求:拍照、录像以及图片视频查看。

2.2 开发工作拆分

根据拆分需求以及 AR 眼镜的特性,拆分出如下开发工作:

-

搭建项目;——0.5人/天(基于 Android应用开发框架轮子 构造相机应用初版代码)

-

实现打开应用后默认拍照模式的开发与自测;——0.5人/天(使用CameraX API,参考开源库 KotlinCameraXDemo)

-

实现TP点击拍照并保存在图库的开发与自测;——0.5人/天

-

实现TP前滑切换为录像模式、TP点击录像、再次TP点击结束录像、并保存在图库、TP再次前滑切换为拍照模式的开发与自测;——1人/天

-

实现TP后滑打开图库查看功能的开发与自测;——0.5人/天

-

实现图库查看时可前滑后滑浏览图片、视频,对于视频可点击播放与暂停功能的开发与自测;——1人/天(此部分任务,由于本人曾经做过有现成代码可搬运过来,正常情况下可能至少需要3天左右时间的开发与调优)

-

请产品同学以及开发同学进行功能体验并优化功能;——0.5人/天

-

代码整理、新建仓库上传代码以及内置 APK 在系统 OS;——0.5人/天

3. 🔱 开发实现

3.1 代码目录截图

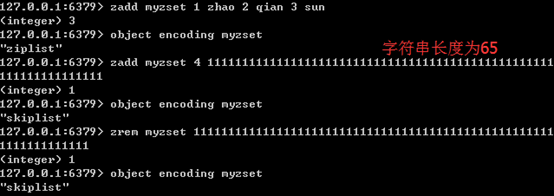

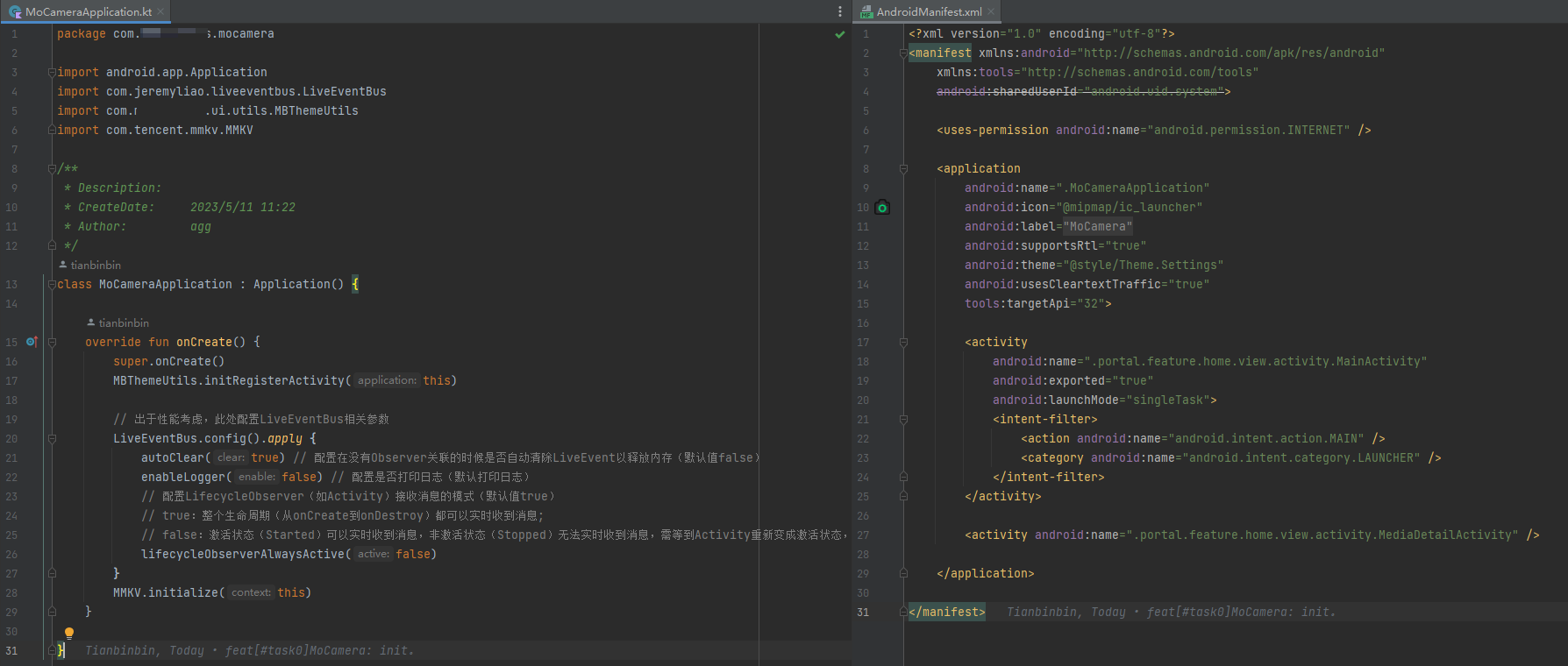

3.2 app 模块

主要定制 Application 与 manifest。

3.3 middleware 模块

中间件层,主要放置一些模块间共用的元素,比如中英文文字翻译、应用 logo、一些 base 类等,由于开发框架文章有对应阐述,此处就不再赘述。

3.4 portal 模块

按照 MVVM 应用架构,在 MainActivity 类中实现了拍照和录像功能,在 MediaDetailActivity 类中实现了图片视频的查看功能,功能具体代码实现,将在接来下的章节作为本文重点展开。

4. ⚛️ 拍照与录像

4.1 前滑后滑统一处理

在 BaseActivity 中重写 dispatchGenericMotionEvent方法,提供 scrollForward 和 scrollBackward 方法,方便子类统一处理前滑后滑事件。

abstract class BaseActivity<VB : ViewBinding, VM : BaseViewModel> : AppCompatActivity(), IView {protected lateinit var binding: VBprotected val viewModel: VM by lazy {ViewModelProvider(this)[(this.javaClass.genericSuperclass as ParameterizedType).actualTypeArguments[1] as Class<VM>]}override fun onCreate(savedInstanceState: Bundle?) {super.onCreate(savedInstanceState)binding = initBinding(layoutInflater)setContentView(binding.root)initData()initViewModel()initView()}override fun dispatchGenericMotionEvent(event: MotionEvent): Boolean {if (event.action == MotionEvent.ACTION_SCROLL && FastScrollUtils.isNotFastScroll(binding.root)) {if (event.getAxisValue(MotionEvent.AXIS_VSCROLL) < 0) scrollForward() else scrollBackward()}return super.dispatchGenericMotionEvent(event)}abstract fun initBinding(inflater: LayoutInflater): VBabstract fun scrollForward()abstract fun scrollBackward()}并且加上了防快速操作的逻辑,避免不必要 bug。

/*** Description: 防止快速滑动,多次触发事件* CreateDate: 2022/11/29 17:59* Author: agg*/

object FastScrollUtils {private const val MIN_CLICK_DELAY_TIME = 500fun isNotFastScroll(view: View, time: Int = MIN_CLICK_DELAY_TIME): Boolean {var flag = trueval curClickTime = System.currentTimeMillis()view.getTag(view.id)?.let {if (curClickTime - (it as Long) < time) {flag = false}}if (flag) view.setTag(view.id, curClickTime)return flag}}4.2 初始化 View 以及 Camera

首先,在 Activity 的初始化过程中,初始化窗口时将 WIndow 设置为 0dof,让可视窗口跟随用户视野移动。

private fun initWindow() {Log.i(TAG, "initWindow: ")val lp = window.attributeslp.dofIndex = 0lp.subType = WindowManager.LayoutParams.MB_WINDOW_IMMERSIVE_0DOFwindow.attributes = lp}然后,初始化 CameraX 相关配置,包括了 ImageCapture 拍照和 VideoCapture 录像 API 的初始化,bindToLifecycle 绑定生命周期。

private val CAMERA_MAX_RESOLUTION = Size(3264, 2448)private lateinit var mCameraExecutor: ExecutorServiceprivate var mImageCapture: ImageCapture? = nullprivate var mVideoCapture: VideoCapture? = nullprivate var mIsVideoModel = falseprivate var mVideoRecordTime = 0Lprivate fun initCameraView() {Log.i(TAG, "initCameraView: ")mCameraExecutor = Executors.newSingleThreadExecutor()val cameraProviderFuture = ProcessCameraProvider.getInstance(this)cameraProviderFuture.addListener({try {mImageCapture = ImageCapture.Builder().setCaptureMode(ImageCapture.CAPTURE_MODE_MAXIMIZE_QUALITY).setTargetResolution(CAMERA_MAX_RESOLUTION).build()mVideoCapture = VideoCapture.Builder().build()val cameraSelector = CameraSelector.DEFAULT_BACK_CAMERAval cameraProvider = cameraProviderFuture.get()cameraProvider.unbindAll()cameraProvider.bindToLifecycle(this, cameraSelector, mImageCapture, mVideoCapture)} catch (e: java.lang.Exception) {Log.e(TAG, "bindCamera Failed!: $e")}}, ContextCompat.getMainExecutor(this))}其次,如果拍照、录像需要预览界面,一是需要增加 PreviewView 预览组件,二是将 bindToLifecycle 方法参数中增加 Preview 即可,如下代码所示:

<?xml version="1.0" encoding="utf-8"?>

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"xmlns:app="http://schemas.android.com/apk/res-auto"android:id="@+id/parent"android:layout_width="match_parent"android:layout_height="match_parent"android:keepScreenOn="true"><androidx.camera.view.PreviewViewandroid:id="@+id/previewView"android:layout_width="match_parent"android:layout_height="match_parent" />

</androidx.constraintlayout.widget.ConstraintLayout>cameraProviderFuture.addListener({try {// ...// 可预览val preview = Preview.Builder().build()binding.previewView.apply {implementationMode = PreviewView.ImplementationMode.COMPATIBLEpreview.setSurfaceProvider(surfaceProvider)}cameraProvider.bindToLifecycle(this, cameraSelector, preview, mImageCapture, mVideoCapture)

// cameraProvider.bindToLifecycle(this, cameraSelector, mImageCapture, mVideoCapture)} catch (e: java.lang.Exception) {Log.e(TAG, "bindCamera Failed!: $e")}

}, ContextCompat.getMainExecutor(this))最后,监听窗口点击并处理对应事件即可。

private val mVideoIsRecording = AtomicBoolean(false)private fun isRecording(): Boolean = mVideoIsRecording.get()override fun initView() {initWindow()initCameraView()binding.parent.setOnClickListener {Log.i(TAG, "click: model=$mIsVideoModel,isRecordingVideo=${isRecording()}")try {if (mIsVideoModel) if (isRecording()) stopRecord() else startRecord() else takePicture()} catch (e: Exception) {e.printStackTrace()}}}4.3 页面前后滑处理

/*** 往前滑动:切换为录像模式/拍照模式*/override fun scrollForward() {Log.i(TAG, "scrollForward: model=$mIsVideoModel,isRecordingVideo=${isRecording()}")if (mIsVideoModel) {if (isRecording()) {MBToast(this, Toast.LENGTH_SHORT, getString(R.string.record_video_in_progress)).show()} else {mIsVideoModel = falseMBToast(this, Toast.LENGTH_SHORT, getString(R.string.photo_model)).show()}} else {mIsVideoModel = trueMBToast(this, Toast.LENGTH_SHORT, getString(R.string.video_model)).show()}}/*** 往后滑动:打开图库查看功能*/override fun scrollBackward() {if (isRecording()) {MBToast(this, Toast.LENGTH_SHORT, getString(R.string.record_video_in_progress)).show()} else {Log.i(TAG, "scrollBackward: 打开图库查看功能")MediaDetailActivity.launch(this)}}4.4 字符串资源

// 中文:

<resources><string name="app_name">Mo相机</string><string name="loading">加载中…</string><string name="tap_to_snap">单击拍照</string><string name="tap_to_record_video">单击录像</string><string name="tap_to_stop_record_video">单击暂停录像</string><string name="scroll_backward_to_gallery">后滑打开图库</string><string name="scroll_forward_to_snap_model">前滑切换拍照模式</string><string name="scroll_forward_to_video_model">前滑切换录像模式</string><string name="record_video_start">开始录像</string><string name="record_video_in_progress">正在录像</string><string name="record_video_complete">录像完成</string><string name="take_picture_complete">拍照完成</string><string name="photo_model">拍照模式</string><string name="video_model">录像模式</string>

</resources>// 英文:

<resources><string name="app_name">MoCamera</string><string name="loading">Loading…</string><string name="tap_to_snap">Tap To Snap</string><string name="tap_to_record_video">Tap To Record Video</string><string name="tap_to_stop_record_video">Tap To Stop Record Video</string><string name="scroll_backward_to_gallery">Scroll Backward To Gallery</string><string name="scroll_forward_to_snap_model">Scroll Forward To Snap Model</string><string name="scroll_forward_to_video_model">Scroll Forward To Video Model</string><string name="record_video_start">Record Video Start</string><string name="record_video_in_progress">Video Recording In Progress</string><string name="record_video_complete">Record Video Complete</string><string name="take_picture_complete">Take Picture Complete</string><string name="photo_model">Photo Model</string><string name="video_model">Video Model</string>

</resources>4.5 照片视频存放

initOutputDirectory 初始化存放目录,以及 createPhotoFile 创建照片存放路径和 createVideoFile 创建视频存放路径、updateMediaFile 更新系统相册。

class MainViewModel : BaseViewModel() {private lateinit var outputDirectory: Filefun init(context: ContextWrapper) {viewModelScope.launch { SoundPoolTools.init(context) }initOutputDirectory(context)}/*** 更新系统相册*/fun updateMediaFile(context: Context, file: File) {val intent = Intent(Intent.ACTION_MEDIA_SCANNER_SCAN_FILE)intent.data = Uri.fromFile(file)context.sendBroadcast(intent)}fun createPhotoFile(): File {return File(outputDirectory, System.currentTimeMillis().toString() + Constants.PHOTO_EXTENSION)}fun createVideoFile(): File {return File(outputDirectory, System.currentTimeMillis().toString() + Constants.VIDEO_EXTENSION)}private fun initOutputDirectory(context: ContextWrapper) {outputDirectory = File(context.externalMediaDirs[0], context.getString(R.string.app_name))if (!outputDirectory.exists()) outputDirectory.mkdir()}}4.6 拍照

/*** 拍照*/private fun takePicture() {Log.i(TAG, "takePicture: ")SoundPoolTools.playCameraPhoto(this)val photoFile = viewModel.createPhotoFile()mImageCapture?.takePicture(ImageCapture.OutputFileOptions.Builder(photoFile).build(),mCameraExecutor,object : ImageCapture.OnImageSavedCallback {override fun onError(exc: ImageCaptureException) {Log.e(TAG, "Photo capture failed: ${exc.message}", exc)}override fun onImageSaved(output: ImageCapture.OutputFileResults) {val savedUri = output.savedUri ?: Uri.fromFile(photoFile)Log.i(TAG, "Photo capture succeeded: $savedUri")runOnUiThread {MBToast(this@MainActivity,Toast.LENGTH_SHORT,getString(R.string.take_picture_complete)).show()}viewModel.updateMediaFile(this@MainActivity, photoFile)}})}4.7 录像

private fun startRecord() {if (isRecording()) {MBToast(this, Toast.LENGTH_SHORT, getString(R.string.record_video_in_progress)).show()return}Log.i(TAG, "startRecord: ")val videoFile = viewModel.createVideoFile()mVideoCapture?.startRecording(VideoCapture.OutputFileOptions.Builder(videoFile).build(),mCameraExecutor,object : VideoCapture.OnVideoSavedCallback {override fun onVideoSaved(output: VideoCapture.OutputFileResults) {mVideoIsRecording.set(false)val savedUri = output.savedUri ?: Uri.fromFile(videoFile)Log.i(TAG, "onVideoSaved:${savedUri.path}")runOnUiThread {MBToast(this@MainActivity,Toast.LENGTH_SHORT,getString(R.string.record_video_complete)).show()}viewModel.updateMediaFile(this@MainActivity, videoFile)}override fun onError(videoCaptureError: Int, message: String, cause: Throwable?) {mVideoIsRecording.set(false)Log.e(TAG, "onError:${message}")}})mVideoIsRecording.set(true)if (isRecording()) {MBToast(this, Toast.LENGTH_SHORT, getString(R.string.record_video_start)).show()}}private fun stopRecord() {Log.i(TAG, "stopRecord: ")if (mVideoIsRecording.get()) mVideoCapture?.stopRecording()}5. ⚛️ 图片视频查看

5.1 数据结构定义

首先,定义图片、视频及其父类的数据结构。

open class MediaInfo(@Exposevar size: Long = 0L, // 大小 单位B@Exposevar width: Float = 0f, // 宽@Exposevar height: Float = 0f, // 高@Exposevar localPath: String = "", // 系统绝对路径@Exposevar localPathUri: String = "", // 媒体文件Uri@Exposevar fileName: String = "", // 文件名@Exposevar mimeType: String = "", // 媒体类型@Exposevar mediaId: String = "", // 媒体ID@Exposevar lastModified: Long = 0L, // 最后更改时间

) {companion object {const val PHOTO_MIMETYPE_DEFAULT = "image/jpeg"const val VIDEO_MIMETYPE_DEFAULT = "video/mp4"const val PHOTO_MIMETYPE_DEFAULT_CONTAIN = "image"const val VIDEO_MIMETYPE_DEFAULT_CONTAIN = "video"}enum class MediaType(val type: Int) {NOT_DEFINE(0),PHOTO(1),VIDEO(2),}}data class PhotoInfo(var photoId: String = "",@SerializedName("photoCoverFull") var photoCoverFull: String = "", // 在线图片url 或者 系统图片media路径@SerializedName("photoCover") // 在线图片缩略图 或者 系统图片media路径var photoCover: String = "",

) : MediaInfo() {override fun equals(other: Any?): Boolean {return if (other is PhotoInfo) {other.photoId == this.photoId} else {false}}override fun hashCode(): Int {return photoId.hashCode()}

}/*** Description:* CreateDate: 2022/11/16 18:09* Author: agg** 码率(比特率),单位为 bps,比特率越高,传送的数据速度越快。在压缩视频时指定码率,则可确定压缩后的视频大小。* 视频大小(byte) = (duration(ms) / 1000) * (biteRate(bit/s) / 8)*/

data class VideoInfo(var firstFrame: Bitmap? = null, // 视频第一帧图,业务层使用,可能为空。@Exposevar duration: Long = 0L, // 视频长度 ms@Exposevar biteRate: Long = 0L, // 视频码率 bps/* --------not necessary, maybe not value---- */@Exposevar addTime: Long = 0L, // 视频添加时间@Exposevar videoRotation: Int = 0, // 视频方向/* --------not necessary, maybe not value---- */

) : MediaInfo()其次,需要提供混合图片视频的数据结构,混合展示图片视频时需使用到。

data class MixMediaInfoList(var isLoadVideoListFinish: Boolean = false,var isLoadPhotoListFinish: Boolean = false,var videoList: MutableList<MediaInfo> = mutableListOf(),var photoList: MutableList<MediaInfo> = mutableListOf(),var maxMediaList: MutableList<MediaInfo> = mutableListOf(),

) {suspend fun getMaxMediaList(): MutableList<MediaInfo> = withContext(Dispatchers.IO) {if (maxMediaList.isEmpty()) {maxMediaList.addAll(videoList)maxMediaList.addAll(photoList)maxMediaList.sortByDescending { it.lastModified }}maxMediaList}}5.2 图片视频获取工具类

首先,提供图片获取工具类,并将其转为自定义的数据结构 PhotoInfo。

object PhotoInfoUtils {/*** 获取系统所有图片文件*/suspend fun getSysPhotos(contentResolver: ContentResolver): MutableList<MediaInfo> =withContext(Dispatchers.IO) {val photoList: MutableList<MediaInfo> = mutableListOf()var cursor: Cursor? = nulltry {cursor = contentResolver.query(Media.EXTERNAL_CONTENT_URI, arrayOf(Media._ID,Media.DATA,Media.DISPLAY_NAME,Media.MIME_TYPE, // 媒体类型Media.SIZE,Media.WIDTH, // 图片宽Media.HEIGHT, // 图片高Media.DATE_MODIFIED,), null, null, ContactsContract.Contacts._ID + " DESC", null)cursor?.moveToFirst()if (cursor == null || cursor.isAfterLast) return@withContext photoListcursor.let {while (!it.isAfterLast) {val photoLibraryInfo = getPhoto(it)// cursor并不能保证每张图都能获取到宽高,仅在这里取sizephotoList.add(photoLibraryInfo)it.moveToNext()}}} catch (e: Exception) {LogUtils.e(e)} finally {cursor?.close()}photoList}@SuppressLint("Range")private fun getPhoto(cursor: Cursor): PhotoInfo {val phoneLibraryInfo = PhotoInfo()phoneLibraryInfo.photoId = cursor.getString(cursor.getColumnIndex(Media._ID))phoneLibraryInfo.localPath = cursor.getString(cursor.getColumnIndex(Media.DATA))phoneLibraryInfo.mimeType = cursor.getString(cursor.getColumnIndex(Media.MIME_TYPE))phoneLibraryInfo.size = cursor.getLong(cursor.getColumnIndex(Media.SIZE))phoneLibraryInfo.width = cursor.getFloat(cursor.getColumnIndex(Media.WIDTH))phoneLibraryInfo.height = cursor.getFloat(cursor.getColumnIndex(Media.HEIGHT))phoneLibraryInfo.lastModified = cursor.getLong(cursor.getColumnIndex(Media.DATE_MODIFIED))val thumbnailUri: Uri = ContentUris.withAppendedId(Media.EXTERNAL_CONTENT_URI, phoneLibraryInfo.photoId.toLong())phoneLibraryInfo.photoCoverFull = thumbnailUri.toString()phoneLibraryInfo.photoCover = thumbnailUri.toString()phoneLibraryInfo.localPathUri = thumbnailUri.toString()return phoneLibraryInfo}}然后,提供视频获取工具类,并将其转为自定义的数据结构 VideoInfo。

/*** Description: 视频信息工具类* CreateDate: 2022/11/16 18:46* Author: agg*/

@SuppressLint("Range", "Recycle")

object VideoInfoUtils {private const val VIDEO_FIRST_FRAME_TIME_US = 1000Lprivate const val URI_VIDEO_PRE = "content://media/external/video/media"/*** 获取系统所有视频文件*/suspend fun getSysVideos(contentResolver: ContentResolver): MutableList<MediaInfo> =withContext(Dispatchers.IO) {val videoList: MutableList<MediaInfo> = mutableListOf()var cursor: Cursor? = nulltry {val queryArray = arrayOf(Media._ID,Media.SIZE, // 视频大小Media.WIDTH, // 视频宽Media.HEIGHT, // 视频高Media.DATA, // 视频绝对路径Media.DISPLAY_NAME, // 视频文件名Media.MIME_TYPE, // 媒体类型Media.DURATION, // 视频长度Media.DATE_ADDED, // 视频添加时间Media.DATE_MODIFIED, // 视频最后更改时间).toMutableList()

// if (SDK_INT >= Build.VERSION_CODES.R) queryArray.add(Media.BITRATE)// 视频码率cursor = contentResolver.query(Media.EXTERNAL_CONTENT_URI,queryArray.toTypedArray(),null,null,Media.DATE_ADDED + " DESC",null)cursor?.moveToFirst()if (cursor == null || cursor.isAfterLast) return@withContext videoListwhile (!cursor.isAfterLast) {getVideoInfo(cursor).run { if (duration > 0 && size > 0) videoList.add(this) }cursor.moveToNext()}} catch (e: Exception) {LogUtils.e(e)} finally {cursor?.close()}videoList}/*** 获取视频文件信息* 注:(1)暂未包括videoRotation;(2)biteRate通过文件大小和视频时长计算*/private suspend fun getVideoInfo(cursor: Cursor): VideoInfo = withContext(Dispatchers.IO) {val videoInfo = VideoInfo()videoInfo.mediaId = cursor.getString(cursor.getColumnIndex(Media._ID))videoInfo.size = cursor.getLong(cursor.getColumnIndex(Media.SIZE))videoInfo.width = cursor.getFloat(cursor.getColumnIndex(Media.WIDTH))videoInfo.height = cursor.getFloat(cursor.getColumnIndex(Media.HEIGHT))videoInfo.localPath = cursor.getString(cursor.getColumnIndex(Media.DATA))videoInfo.localPathUri = getVideoPathUri(videoInfo.mediaId).toString()videoInfo.fileName = cursor.getString(cursor.getColumnIndex(Media.DISPLAY_NAME))videoInfo.mimeType = cursor.getString(cursor.getColumnIndex(Media.MIME_TYPE))

// 不能在这获取第一帧,太耗时,改为使用的地方去获取第一帧。

// videoInfo.firstFrame = getVideoThumbnail(cursor.getString(cursor.getColumnIndex(Media.DATA)))videoInfo.duration = cursor.getLong(cursor.getColumnIndex(Media.DURATION))videoInfo.biteRate = ((8 * videoInfo.size * 1024) / (videoInfo.duration / 1000f)).toLong()

// if (SDK_INT >= Build.VERSION_CODES.R) cursor.getLong(cursor.getColumnIndex(Media.BITRATE))

// else ((8 * videoInfo.size * 1024) / (videoInfo.duration / 1000f)).toLong()videoInfo.addTime = cursor.getLong(cursor.getColumnIndex(Media.DATE_ADDED))videoInfo.lastModified = cursor.getLong(cursor.getColumnIndex(Media.DATE_MODIFIED))videoInfo}/*** 获取视频文件信息* 注:(1)暂未包括lastModified、addTime;(2)mediaId以filePath代替** @param path 视频文件的路径* @return VideoInfo 视频文件信息*/suspend fun getVideoInfo(path: String?): VideoInfo = withContext(Dispatchers.IO) {val videoInfo = VideoInfo()if (!path.isNullOrEmpty()) {val media = MediaMetadataRetriever()try {media.setDataSource(path)videoInfo.size =File(path).let { if (FileUtils.isFileExists(it)) it.length() else 0 }videoInfo.width = media.extractMetadata(METADATA_KEY_VIDEO_WIDTH)?.toFloat() ?: 0fvideoInfo.height = media.extractMetadata(METADATA_KEY_VIDEO_HEIGHT)?.toFloat() ?: 0fvideoInfo.localPath = pathvideoInfo.localPathUri = getVideoPathUri(Utils.getApp(), path).toString()videoInfo.fileName = path.split(File.separator).let {if (it.isNotEmpty()) it[it.size - 1] else ""}videoInfo.mimeType = media.extractMetadata(METADATA_KEY_MIMETYPE) ?: ""

// videoInfo.firstFrame = media.getFrameAtTime(VIDEO_FIRST_FRAME_TIME_US)?.let { compressVideoThumbnail(it) }videoInfo.duration = media.extractMetadata(METADATA_KEY_DURATION)?.toLong() ?: 0videoInfo.biteRate = media.extractMetadata(METADATA_KEY_BITRATE)?.toLong() ?: 0videoInfo.videoRotation =media.extractMetadata(METADATA_KEY_VIDEO_ROTATION)?.toInt() ?: 0videoInfo.mediaId = path} catch (e: Exception) {} finally {media.release()}}videoInfo}/*** 获取视频缩略图:通过Uri抓取第一帧* @param videoUriString 视频在媒体库中Uri。如:content://media/external/video/media/11378*/suspend fun getVideoThumbnail(context: Context, videoUriString: String): Bitmap? =withContext(Dispatchers.IO) {var bitmap: Bitmap? = nullval retriever = MediaMetadataRetriever()try {retriever.setDataSource(context, Uri.parse(videoUriString))// OPTION_CLOSEST_SYNC:在给定的时间,检索最近一个同步与数据源相关联的的帧(关键帧)// OPTION_CLOSEST:表示获取离该时间戳最近帧(I帧或P帧)bitmap = if (SDK_INT >= Build.VERSION_CODES.O_MR1) {retriever.getScaledFrameAtTime(VIDEO_FIRST_FRAME_TIME_US,OPTION_CLOSEST_SYNC,THUMBNAIL_DEFAULT_COMPRESS_VALUE.toInt(),THUMBNAIL_DEFAULT_COMPRESS_VALUE.toInt())} else {retriever.getFrameAtTime(VIDEO_FIRST_FRAME_TIME_US)?.let { compressVideoThumbnail(it) }}} catch (e: Exception) {} finally {try {retriever.release()} catch (e: Exception) {}}bitmap}/*** 通过视频资源ID,直接获取视频Uri* @param mediaId 视频资源ID*/fun getVideoPathUri(mediaId: String): Uri =Uri.withAppendedPath(Uri.parse(URI_VIDEO_PRE), mediaId)/*** 通过视频资源本地路径,获取视频Uri* @param path 视频资源本地路径*/fun getVideoPathUri(context: Context, path: String): Uri? {var uri: Uri? = nullvar cursor: Cursor? = nulltry {cursor = context.contentResolver.query(Media.EXTERNAL_CONTENT_URI,arrayOf(Media._ID),Media.DATA + "=? ",arrayOf(path),null)uri = if (cursor != null && cursor.moveToFirst()) {Uri.withAppendedPath(Uri.parse(URI_VIDEO_PRE),"" + cursor.getInt(cursor.getColumnIndex(MediaStore.MediaColumns._ID)))} else {if (File(path).exists()) {val values = ContentValues()values.put(Media.DATA, path)context.contentResolver.insert(Media.EXTERNAL_CONTENT_URI, values)} else {null}}} catch (e: Exception) {} finally {cursor?.close()}return uri}}同时,提供视频缩略图工具类,方便业务代码使用。

/*** Description: 视频缩略图工具类* 宽高压缩、缩放法压缩可针对Bitmap操作,而采样率压缩和质量压缩针对于File、Resource操作* CreateDate: 2022/11/24 18:48* Author: agg*/

object VideoThumbnailUtils {/*** 视频缩略图默认压缩尺寸*/const val THUMBNAIL_DEFAULT_COMPRESS_VALUE = 1024f/*** 视频缩略图默认压缩比例*/private const val THUMBNAIL_DEFAULT_SCALE_VALUE = 0.5fprivate const val MAX_IMAGE_SIZE = 500 * 1024 //图片压缩阀值/*** 压缩视频缩略图* @param bitmap 视频缩略图*/fun compressVideoThumbnail(bitmap: Bitmap): Bitmap? {val width: Int = bitmap.widthval height: Int = bitmap.heightval max: Int = Math.max(width, height)if (max > THUMBNAIL_DEFAULT_COMPRESS_VALUE) {val scale: Float = THUMBNAIL_DEFAULT_COMPRESS_VALUE / maxval w = (scale * width).roundToInt()val h = (scale * height).roundToInt()return compressVideoThumbnail(bitmap, w, h)}return bitmap}/*** 压缩视频缩略图:宽高压缩* 注:如果用户期望的长度和宽度和原图长度宽度相差太多的话,图片会很不清晰。* @param bitmap 视频缩略图*/fun compressVideoThumbnail(bitmap: Bitmap, width: Int, height: Int): Bitmap? {return Bitmap.createScaledBitmap(bitmap, width, height, true)}/*** 压缩视频缩略图:缩放法压缩* 注:长度和宽度没有变,内存缩小4倍(内存像素宽高各缩小一半)*/fun compressVideoThumbnailMatrix(bitmap: Bitmap): Bitmap? {val matrix = Matrix()matrix.setScale(THUMBNAIL_DEFAULT_SCALE_VALUE, THUMBNAIL_DEFAULT_SCALE_VALUE)return Bitmap.createBitmap(bitmap, 0, 0, bitmap.width, bitmap.height, matrix, true)}/*** 压缩视频缩略图:采样率压缩* @param filePath 视频缩略图路径*/fun compressVideoThumbnailSample(filePath: String, width: Int, height: Int): Bitmap? {return BitmapFactory.Options().run {// inJustDecodeBounds 设置为 true后,BitmapFactory.decodeFile 不生成Bitmap对象,而仅仅是读取该图片的尺寸和类型信息。inJustDecodeBounds = trueBitmapFactory.decodeFile(filePath, this)inSampleSize = calculateInSampleSize(this, width, height)inJustDecodeBounds = falseBitmapFactory.decodeFile(filePath, this)}}/*** 压缩图片到指定宽高* @param localPath 图片本地路径* @param size 原图所占空间大小*/fun compressLocalImage(localPath: String, size: Int): Bitmap? {return if (size > MAX_IMAGE_SIZE) {val scale = size.toDouble() / MAX_IMAGE_SIZEval simpleSize = ceil(sqrt(scale)).toInt() //取最接近的平方根var bitmap = BitmapFactory.Options().run {inPreferredConfig = Bitmap.Config.RGB_565inSampleSize = simpleSizeinJustDecodeBounds = falseval ins = Utils.getApp().contentResolver.openInputStream(Uri.parse(localPath))BitmapFactory.decodeStream(ins, null, this)}val angle = readImageRotation(localPath)if (angle != 0 && bitmap != null) {bitmap = rotatingImageView(angle, bitmap)}if (bitmap == null) {return null}val result = tryCompressAgain(bitmap)result} else { //不压缩null}}/*** 试探性进一步压缩体积*/private fun tryCompressAgain(original: Bitmap): Bitmap {val out = ByteArrayOutputStream()original.compress(Bitmap.CompressFormat.JPEG, 100, out)val matrix = Matrix()var resultBp = originaltry {var scale = 1.0fwhile (out.toByteArray().size > MAX_IMAGE_SIZE) {matrix.setScale(scale, scale)//每次缩小 1/10resultBp =Bitmap.createBitmap(original,0,0,original.width,original.height,matrix,true)out.reset()resultBp.compress(Bitmap.CompressFormat.JPEG, 100, out)scale *= 0.9f}} catch (e: Exception) {e.printStackTrace()} finally {out.close()}return resultBp}//获取图片旋转角度private fun readImageRotation(path: String): Int {return kotlin.runCatching {val ins = Utils.getApp().contentResolver.openInputStream(Uri.parse(path)) ?: return 0val exifInterface = ExifInterface(ins)val orientation: Int = exifInterface.getAttributeInt(ExifInterface.TAG_ORIENTATION,ExifInterface.ORIENTATION_NORMAL)ins.close()return when (orientation) {ExifInterface.ORIENTATION_ROTATE_90 -> 90ExifInterface.ORIENTATION_ROTATE_180 -> 180ExifInterface.ORIENTATION_ROTATE_270 -> 270else -> 0}}.getOrNull() ?: 0}/*** 旋转图片*/private fun rotatingImageView(angle: Int, bitmap: Bitmap): Bitmap? {// 旋转图片 动作val matrix = Matrix()matrix.postRotate(angle.toFloat())// 创建新的图片return Bitmap.createBitmap(bitmap, 0, 0, bitmap.width, bitmap.height, matrix, true)}/*** 计算采样率:值为2的幂。例如, 一个分辨率为2048x1536的图片,如果设置 inSampleSize 为4,那么会产出一个大约512x384大小的Bitmap。* @param options* @param reqWidth 想要压缩到的宽度* @param reqHeight 想要压缩到的高度* @return*/fun calculateInSampleSize(options: BitmapFactory.Options, reqWidth: Int, reqHeight: Int): Int {var inSampleSize = 1if (options.outHeight > reqHeight || options.outWidth > reqWidth) {val halfHeight: Int = options.outHeight / 2val halfWidth: Int = options.outWidth / 2while (halfHeight / inSampleSize >= reqHeight && halfWidth / inSampleSize >= reqWidth) {inSampleSize *= 2}}return inSampleSize}/*** 计算采样率:值为整数的采样率。*/fun calculateInSampleSizeFixed(options: BitmapFactory.Options, width: Int, height: Int): Int {val hRatio = ceil(options.outHeight.div(height.toDouble())) // 大于1:图片高度>手机屏幕高度val wRatio = ceil(options.outWidth.div(width.toDouble())) // 大于1:图片宽度>手机屏幕宽度return if (hRatio > wRatio) hRatio.toInt() else wRatio.toInt()}}5.3 解耦 viewmodel 和 model 层

class MediaDetailViewModel : BaseViewModel() {private val model by lazy { MediaDetailModel() }val getSysPhotosLiveData: MutableLiveData<List<MediaInfo>> by lazy { MutableLiveData<List<MediaInfo>>() }val getSysVideosLiveData: MutableLiveData<List<MediaInfo>> by lazy { MutableLiveData<List<MediaInfo>>() }fun getSysVideos(contentResolver: ContentResolver) {viewModelScope.launch(Dispatchers.IO) {getSysVideosLiveData.postValue(model.getSysVideos(contentResolver))}}fun getSysPhotos(contentResolver: ContentResolver) {viewModelScope.launch(Dispatchers.IO) {getSysPhotosLiveData.postValue(model.getSysPhotos(contentResolver))}}}open class BaseViewModel : ViewModel()class MediaDetailModel {suspend fun getSysVideos(contentResolver: ContentResolver) =VideoInfoUtils.getSysVideos(contentResolver)suspend fun getSysPhotos(contentResolver: ContentResolver) =PhotoInfoUtils.getSysPhotos(contentResolver)}5.4 初始化 View

首先,在 Activity 的初始化过程中,初始化窗口时将 WIndow 设置为 0dof,让可视窗口跟随用户视野移动。

private fun initWindow() {Log.i(TAG, "initWindow: ")val lp = window.attributeslp.dofIndex = 0lp.subType = WindowManager.LayoutParams.MB_WINDOW_IMMERSIVE_0DOFwindow.attributes = lp}其次,引入图片视频 BannerViewPager 组件。

<?xml version="1.0" encoding="utf-8"?>

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"xmlns:app="http://schemas.android.com/apk/res-auto"android:id="@+id/parent"android:layout_width="match_parent"android:layout_height="match_parent"><com.zhpan.bannerview.BannerViewPagerandroid:id="@+id/bvFeedPhotoContent"android:layout_width="match_parent"android:layout_height="match_parent" /></androidx.constraintlayout.widget.ConstraintLayout>最后,初始化 BannerViewPager 组件。

private fun initBannerViewPager() {(binding.bvFeedPhotoContent as BannerViewPager<MediaInfo>).apply {bigDetailAdapter = MediaDetailItemAdapter()adapter = bigDetailAdaptersetLifecycleRegistry(lifecycle)setCanLoop(false)setAutoPlay(false)setIndicatorVisibility(GONE)disallowParentInterceptDownEvent(true) // 不允许内部拦截,使得activity可以获得下滑能力registerOnPageChangeCallback(object : ViewPager2.OnPageChangeCallback() {/*** 某个页面被选中(从0计数) 翻页成功才会调用* @param position 翻页后的视图在集合中位置*/@SuppressLint("SetTextI18n")override fun onPageSelected(position: Int) {super.onPageSelected(position)// 刷新上个页面的信息(图片缩放置为初始状态、视频停止播放)Log.i(TAG, "onPageSelected: position = $position")}})}.create()}private fun updateViewPager(allMediaInfoList: MutableList<MediaInfo>) {Log.i(TAG, "updateViewPager: size = ${allMediaInfoList.size}")binding.bvFeedPhotoContent.refreshData(allMediaInfoList)binding.bvFeedPhotoContent.setCurrentItem(0, false)}5.5 BannerViewPager 组件加载和使用

首先,自定义适配器 MediaDetailItemAdapter。

class MediaDetailItemAdapter : BaseBannerAdapter<MediaInfo>() {private var context: Context? = nulloverride fun getLayoutId(viewType: Int): Int = R.layout.media_detail_item_adapteroverride fun createViewHolder(parent: ViewGroup, itemView: View?, viewType: Int): BaseViewHolder<MediaInfo> {context = parent.contextreturn super.createViewHolder(parent, itemView, viewType)}override fun bindData(holder: BaseViewHolder<MediaInfo>, mediaInfo: MediaInfo, position: Int, pageSize: Int) {val vpViewGroup = holder.findViewById<VideoPlayerViewGroup>(R.id.vpViewGroup)val ivAvatarDetail = holder.findViewById<PhotoView>(R.id.ivAvatarDetail)val ivAvatarDetailLarge =holder.findViewById<SubsamplingScaleImageView>(R.id.ivAvatarDetailLarge)if (mediaInfo is PhotoInfo) {vpViewGroup.visibility = GONEinitPhotoInfo(mediaInfo, ivAvatarDetail, ivAvatarDetailLarge)} else if (mediaInfo is VideoInfo) {vpViewGroup.visibility = VISIBLEivAvatarDetail.visibility = GONEivAvatarDetailLarge.visibility = GONEvpViewGroup.initVideoInfo(mediaInfo)}}fun releaseAllVideos() {GSYVideoManager.releaseAllVideos()}private fun initPhotoInfo(photoInfo: PhotoInfo,ivAvatarDetail: PhotoView,ivAvatarDetailLarge: SubsamplingScaleImageView) {ivAvatarDetail.visibility = VISIBLEivAvatarDetailLarge.visibility = VISIBLEivAvatarDetail.maximumScale = MAX_SCALEivAvatarDetail.minimumScale = MIN_SCALEivAvatarDetail.scale = MIN_SCALEval imageUrl = photoInfo.photoCoverFullval thumbUrl = photoInfo.photoCoverif (imageUrl.isNotEmpty()) {ivAvatarDetail.visibility = VISIBLEcontext?.let {val isLarge = photoInfo.size > 5000 * 1024 // 大于5m认为是大图if (!isLarge) {ivAvatarDetail.visibility = VISIBLEGlide.with(it).load(imageUrl).thumbnail(Glide.with(it).load(thumbUrl)).override(SIZE_ORIGINAL, SIZE_ORIGINAL).into(ivAvatarDetail)ivAvatarDetailLarge.visibility = INVISIBLE} else {ivAvatarDetailLarge.visibility = VISIBLEGlide.with(it).load(imageUrl).downloadOnly(object : SimpleTarget<File>() {override fun onResourceReady(resource: File, transition: Transition<in File>?) {// 在宽高均大于手机屏幕的图片被下载到media后会被强制旋转,这里需要禁止ivAvatarDetailLarge.orientation =SubsamplingScaleImageView.ORIENTATION_USE_EXIFivAvatarDetailLarge.setImage(ImageSource.uri(Uri.fromFile(resource)))ivAvatarDetail.visibility = INVISIBLE}})}}} else {ivAvatarDetail.visibility = GONEivAvatarDetailLarge.visibility = GONE}}companion object {const val MAX_SCALE = 5Fconst val MIN_SCALE = 1F}}对于视频组件,需要自定义 View 及其 ViewGroup。

class VideoPlayerView : StandardGSYVideoPlayer {constructor(context: Context?) : super(context)constructor(context: Context?, attrs: AttributeSet?) : super(context, attrs)constructor(context: Context?, fullFlag: Boolean?) : super(context, fullFlag)override fun getLayoutId(): Int = R.layout.video_player_viewoverride fun touchSurfaceMoveFullLogic(absDeltaX: Float, absDeltaY: Float) {super.touchSurfaceMoveFullLogic(absDeltaX, absDeltaY)//不给触摸快进,如果需要,屏蔽下方代码即可mChangePosition = false//不给触摸音量,如果需要,屏蔽下方代码即可mChangeVolume = false//不给触摸亮度,如果需要,屏蔽下方代码即可mBrightness = false}override fun touchDoubleUp(e: MotionEvent?) {//super.touchDoubleUp();//不需要双击暂停}}对应的,视频播放的核心操作逻辑放在 VideoPlayerViewGroup。

class VideoPlayerViewGroup(context: Context, attrs: AttributeSet?) :ConstraintLayout(context, attrs), GSYVideoProgressListener, SeekBar.OnSeekBarChangeListener {companion object {private const val DELAY_DISMISS_3_SECOND = 3000Lprivate const val VIDEO_RESOLUTION_BEYOND_2K = 3500f}private val binding by lazy {VideoPlayerViewGroupBinding.inflate(LayoutInflater.from(context), this, true)}private val runnable: Runnable = Runnable {binding.playBtn.isVisible = falsebinding.currentPosition.isVisible = falsebinding.seekbar.isVisible = falsebinding.duration.isVisible = false}private var videoInfo: VideoInfo? = null/*** seekbar拖动后的进度*/private var seekbarTouchFinishProgress = -1L/*** 视频未初始化时,记录seekbar拖动后的进度,初始化后,需定位到记录位置。*/private var seekbarTouchFinishProgressNotInitial = -1Lfun initVideoInfo(videoInfo: VideoInfo) {this.videoInfo = videoInfoupdateVideoSeekbar(0, 0, videoInfo.duration)if (videoInfo.firstFrame != null) {binding.firstFrame.setImageBitmap(videoInfo.firstFrame)} else {CoroutineScope(Dispatchers.Main).launch {videoInfo.firstFrame = getVideoThumbnail(context, videoInfo.localPathUri)binding.firstFrame.setImageBitmap(videoInfo.firstFrame)}}binding.playBtn.setOnClickListener { handleVideoPlayer() }binding.clickScreen.setOnClickListener { handleClickScreen() }binding.seekbar.setOnSeekBarChangeListener(this)if (!binding.firstFrame.isVisible) restoreVideoPlayer()if (!binding.seekbar.isVisible) {binding.currentPosition.isVisible = truebinding.seekbar.isVisible = truebinding.duration.isVisible = truebinding.playBtn.isVisible = true}}/*** 更新seekbar*/private fun updateVideoSeekbar(progress: Int, currentPosition: Long, duration: Long) {binding.currentPosition.text = convertToVideoTimeFromSecond(currentPosition / 1000)binding.seekbar.progress = progressbinding.duration.text = convertToVideoTimeFromSecond(duration / 1000)}private fun handleVideoPlayer() {when {binding.firstFrame.isVisible -> {seekbarTouchFinishProgress = -1Lbinding.firstFrame.isVisible = falsebinding.playBtn.setImageResource(R.drawable.ic_pause)// 初始化播放binding.videoPlayer.backButton?.isVisible = falseif (videoInfo?.width ?: 0f > VIDEO_RESOLUTION_BEYOND_2K || videoInfo?.height ?: 0f > VIDEO_RESOLUTION_BEYOND_2K) {PlayerFactory.setPlayManager(SystemPlayerManager::class.java)} else {PlayerFactory.setPlayManager(IjkPlayerManager::class.java)}binding.videoPlayer.setUp(videoInfo?.localPathUri, true, "")binding.videoPlayer.startPlayLogic()binding.videoPlayer.setGSYVideoProgressListener(this)binding.videoPlayer.setVideoAllCallBack(object : GSYSampleCallBack() {override fun onAutoComplete(url: String?, vararg objects: Any?) {super.onAutoComplete(url, *objects)restoreVideoPlayer()updateVideoSeekbar(0, 0, videoInfo?.duration ?: 0)}})if (binding.seekbar.isVisible) {seekbarDelayDismiss()} else {binding.playBtn.isVisible = false}}binding.videoPlayer.currentState == GSYVideoView.CURRENT_STATE_PAUSE -> {binding.playBtn.setImageResource(R.drawable.ic_pause)binding.videoPlayer.onVideoResume(false)// playBtn不可见,则底部seekbar不可见if (binding.seekbar.isVisible) {seekbarDelayDismiss()} else {binding.playBtn.isVisible = false}}binding.videoPlayer.isInPlayingState -> {binding.playBtn.setImageResource(R.drawable.ic_play)binding.videoPlayer.onVideoPause()handler.removeCallbacks(runnable)}}}private fun handleClickScreen() {when {binding.firstFrame.isVisible -> {if (binding.videoPlayer.currentState == -1 || binding.videoPlayer.currentState == CURRENT_STATE_NORMAL) {binding.currentPosition.isVisible = !binding.currentPosition.isVisiblebinding.seekbar.isVisible = !binding.seekbar.isVisiblebinding.duration.isVisible = !binding.duration.isVisiblebinding.playBtn.isVisible = true}}binding.videoPlayer.currentState == GSYVideoView.CURRENT_STATE_PAUSE -> {binding.currentPosition.isVisible = !binding.currentPosition.isVisiblebinding.seekbar.isVisible = !binding.seekbar.isVisiblebinding.duration.isVisible = !binding.duration.isVisiblebinding.playBtn.isVisible = true}binding.videoPlayer.isInPlayingState -> {if (binding.playBtn.isVisible) {if (binding.seekbar.isVisible) {handler.post(runnable)} else {binding.currentPosition.isVisible = truebinding.seekbar.isVisible = truebinding.duration.isVisible = trueseekbarDelayDismiss()}} else {if (!binding.seekbar.isVisible) {binding.currentPosition.isVisible = truebinding.seekbar.isVisible = truebinding.duration.isVisible = true}binding.playBtn.isVisible = trueseekbarDelayDismiss()}}}}/*** 进度条延迟3秒消失*/private fun seekbarDelayDismiss() {handler.removeCallbacks(runnable)handler.postDelayed(runnable, DELAY_DISMISS_3_SECOND)}/*** 还原播放*/private fun restoreVideoPlayer() {binding.firstFrame.isVisible = truebinding.playBtn.setImageResource(R.drawable.ic_play)binding.playBtn.isVisible = truebinding.currentPosition.isVisible = truebinding.seekbar.isVisible = truebinding.duration.isVisible = trueif (handler != null) handler.removeCallbacks(runnable)binding.videoPlayer.onVideoPause()binding.videoPlayer.release()binding.videoPlayer.onVideoReset()binding.videoPlayer.setVideoAllCallBack(null)}override fun onProgress(progress: Long, secProgress: Long, currentPosition: Long, duration: Long) {if (seekbarTouchFinishProgress != -1L && progress <= binding.seekbar.progress) {return}seekbarTouchFinishProgress = -1if (seekbarTouchFinishProgressNotInitial != -1L) {seekbarTouchFinishProgress = seekbarTouchFinishProgressNotInitialbinding.videoPlayer.seekTo(seekbarTouchFinishProgressNotInitial)seekbarTouchFinishProgressNotInitial = -1return}updateVideoSeekbar(progress.toInt(), currentPosition, videoInfo?.duration ?: 0)}override fun onStartTrackingTouch(seekBar: SeekBar) {handler.removeCallbacks(runnable)}override fun onProgressChanged(seekBar: SeekBar, progress: Int, fromUser: Boolean) {}override fun onStopTrackingTouch(seekBar: SeekBar) {val position = (seekBar.progress.toLong() + 1) * (videoInfo?.duration ?: 0) / 100if (binding.videoPlayer.isInPlayingState) seekbarTouchFinishProgress = positionbinding.currentPosition.text = convertToVideoTimeFromSecond(position / 1000)binding.videoPlayer.seekTo(position)if (binding.videoPlayer.isInPlayingState && binding.videoPlayer.currentState != GSYVideoView.CURRENT_STATE_PAUSE) seekbarDelayDismiss()if (binding.videoPlayer.currentState == -1 || binding.videoPlayer.currentState == CURRENT_STATE_NORMAL) {seekbarTouchFinishProgressNotInitial = position}}}最后,附上 xml 文件。

1)media_detail_item_adapter.xml

<?xml version="1.0" encoding="utf-8"?>

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"android:layout_width="match_parent"android:layout_height="match_parent"><com.agg.mocamera.portal.feature.home.view.VideoPlayerViewGroupandroid:id="@+id/vpViewGroup"android:layout_width="match_parent"android:layout_height="match_parent"android:visibility="gone" /><com.github.chrisbanes.photoview.PhotoViewandroid:id="@+id/ivAvatarDetail"android:layout_width="match_parent"android:layout_height="match_parent" /><com.davemorrissey.labs.subscaleview.SubsamplingScaleImageViewandroid:id="@+id/ivAvatarDetailLarge"android:layout_width="match_parent"android:layout_height="match_parent"android:visibility="invisible" /></androidx.constraintlayout.widget.ConstraintLayout>2)video_player_view.xml

<?xml version="1.0" encoding="utf-8"?>

<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android"android:layout_width="match_parent"android:layout_height="match_parent"android:background="#121212"><FrameLayoutandroid:id="@+id/surface_container"android:layout_width="match_parent"android:layout_height="match_parent"android:gravity="center" /></RelativeLayout>3)video_player_view_group.xml

<?xml version="1.0" encoding="utf-8"?>

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"xmlns:app="http://schemas.android.com/apk/res-auto"xmlns:tools="http://schemas.android.com/tools"android:id="@+id/videoViewParent"android:layout_width="match_parent"android:layout_height="match_parent"tools:ignore="SpUsage"><com.agg.mocamera.portal.feature.home.view.VideoPlayerViewandroid:id="@+id/videoPlayer"android:layout_width="match_parent"android:layout_height="match_parent" /><Viewandroid:id="@+id/clickScreen"android:layout_width="match_parent"android:layout_height="match_parent" /><ImageViewandroid:id="@+id/firstFrame"android:layout_width="match_parent"android:layout_height="match_parent"android:contentDescription="@null"app:layout_constraintBottom_toBottomOf="parent"app:layout_constraintEnd_toEndOf="parent"app:layout_constraintStart_toStartOf="parent"app:layout_constraintTop_toTopOf="parent" /><ImageViewandroid:id="@+id/playBtn"android:layout_width="48dp"android:layout_height="48dp"android:contentDescription="@null"android:src="@drawable/ic_play"app:layout_constraintBottom_toBottomOf="parent"app:layout_constraintEnd_toEndOf="parent"app:layout_constraintStart_toStartOf="parent"app:layout_constraintTop_toTopOf="parent" /><com.agg.ui.MBTextViewandroid:id="@+id/currentPosition"android:layout_width="wrap_content"android:layout_height="20dp"android:layout_marginStart="15dp"android:layout_marginBottom="58dp"android:gravity="center"android:textColor="#FFFFFF"android:textSize="12dp"app:layout_constraintBottom_toBottomOf="parent"app:layout_constraintStart_toStartOf="parent"tools:text="00:00" /><SeekBarandroid:id="@+id/seekbar"style="@style/Widget.AppCompat.ProgressBar.Horizontal"android:layout_width="0dp"android:layout_height="24dp"android:duplicateParentState="false"android:max="100"android:maxHeight="6dp"android:minHeight="6dp"android:progress="0"android:progressDrawable="@drawable/video_seek_bar_progress"android:splitTrack="false"android:thumb="@drawable/ic_video_seek_bar_thumb"app:layout_constraintBottom_toBottomOf="@id/currentPosition"app:layout_constraintEnd_toStartOf="@id/duration"app:layout_constraintStart_toEndOf="@id/currentPosition"app:layout_constraintTop_toTopOf="@id/currentPosition" /><com.agg.ui.MBTextViewandroid:id="@+id/duration"android:layout_width="wrap_content"android:layout_height="20dp"android:layout_marginEnd="15dp"android:gravity="center"android:textColor="#FFFFFF"android:textSize="12dp"app:layout_constraintBottom_toBottomOf="@id/currentPosition"app:layout_constraintEnd_toEndOf="parent"app:layout_constraintTop_toTopOf="@id/currentPosition"tools:text="04:59" /></androidx.constraintlayout.widget.ConstraintLayout>5.6 获取数据并加载到 View 显示

private var allPhotoInfoList: MutableList<MediaInfo> = mutableListOf()private var mixMediaInfoList = MixMediaInfoList()private var bigDetailAdapter: MediaDetailItemAdapter? = nullprivate fun initAllMediaInfoList() {viewModel.getSysPhotos(contentResolver)viewModel.getSysVideos(contentResolver)}override fun initViewModel() {viewModel.getSysPhotosLiveData.observe(this) { photoList ->Log.i(TAG, "initViewModel: photoList = ${photoList.size}")if (!mixMediaInfoList.isLoadPhotoListFinish) {mixMediaInfoList.isLoadPhotoListFinish = truemixMediaInfoList.photoList.addAll(photoList)if (mixMediaInfoList.isLoadVideoListFinish) {lifecycleScope.launch { updateViewPager(mixMediaInfoList.getMaxMediaList()) }}}}viewModel.getSysVideosLiveData.observe(this) { videoList ->Log.i(TAG, "initViewModel: videoList = ${videoList.size}")if (!mixMediaInfoList.isLoadVideoListFinish) {mixMediaInfoList.isLoadVideoListFinish = truemixMediaInfoList.videoList.addAll(videoList)if (mixMediaInfoList.isLoadPhotoListFinish) {lifecycleScope.launch { updateViewPager(mixMediaInfoList.getMaxMediaList()) }}}}}override fun onDestroy() {super.onDestroy()bigDetailAdapter?.releaseAllVideos()}5.7 页面前后滑处理

override fun scrollForward() {val position = binding.bvFeedPhotoContent.currentItemLog.i(TAG, "scrollForward: position=$position,size=${allPhotoInfoList.size}")binding.bvFeedPhotoContent.currentItem = position + 1}override fun scrollBackward() {val position = binding.bvFeedPhotoContent.currentItemLog.i(TAG, "scrollBackward: position=$position,size=${allPhotoInfoList.size}")binding.bvFeedPhotoContent.currentItem = position - 1}5.8 gradle 依赖

// CameraX core library using the camera2 implementation// The following line is optional, as the core library is included indirectly by camera-camera2// implementation "androidx.camera:camera-core:${camerax_version}"implementation "androidx.camera:camera-camera2:${CAMERAX}"// If you want to additionally use the CameraX Lifecycle libraryimplementation "androidx.camera:camera-lifecycle:${CAMERAX}"// If you want to additionally use the CameraX View classimplementation "androidx.camera:camera-view:${CAMERA_VIEW}"// If you want to additionally use the CameraX Extensions library// implementation "androidx.camera:camera-extensions:1.0.0-alpha31"implementation "com.github.zhpanvip:BannerViewPager:${BANNER_VIEW_PAGER}"implementation "com.github.chrisbanes:PhotoView:${PHOTO_VIEW}"implementation "com.davemorrissey.labs:subsampling-scale-image-view-androidx:${SUBSAMPLING_SCALE_IMAGE_VIEW_ANDROIDX}"// ijkplayerimplementation "com.github.CarGuo.GSYVideoPlayer:gsyVideoPlayer-java:${GSY_VIDEO_PLAYER}"implementation "com.github.CarGuo.GSYVideoPlayer:gsyVideoPlayer-armv7a:${GSY_VIDEO_PLAYER}"implementation 'com.tencent.tav:libpag:4.2.41'CAMERAX = "1.1.0-alpha11"CAMERA_VIEW = "1.0.0-alpha31"BANNER_VIEW_PAGER = "3.5.0"PHOTO_VIEW = "2.0.0"SUBSAMPLING_SCALE_IMAGE_VIEW_ANDROIDX = "3.10.0"GSY_VIDEO_PLAYER = "v8.3.4-release-jitpack"

6. ✅ 小结

对于 AR 眼镜上的 Camera 功能,可以去实现很多有意思的产品功能,本文只是一个基础的拍照、录像和查看实现方案,更多业务细节请参考产品逻辑去创造。

另外,由于本人能力有限,如有错误,敬请批评指正,谢谢。