思维迭代:利用内心对话进行自主大型语言模型推理

Iteration of Thought: Leveraging Inner Dialogue for Autonomous Large Language Model Reasoning

URL:https://arxiv.org/abs/2409.12618

注:翻译可能存在误差,详细内容建议查看原始文章。

桑托什·库马尔·拉杜 1 , 雅萨明·诺里·杰莱阿尼 , 阿拉·古卡西安 1 , 和 奥克塔伊·格克塔斯 1

Santosh Kumar Radha 1 , Yasamin Nouri Jelyani , Ara Ghukasyan 1 , and Oktay Goktas 1

1 阿格诺斯蒂克公司,325 前街西,多伦多,安大略省 M5V 2Y1 2 多伦多大学,60 圣乔治街,多伦多,安大略省,M5S 1A7,加拿大

1 Agnostiq Inc., 325 Front St W, Toronto, ON M5V 2Y1 2 University of Toronto, 60 St George St, Toronto, Ontario, M5S 1A7, Canada

摘要

Abstract

迭代式人类互动是利用大型语言模型(LLM)高级语言处理能力的常见且有效的方法。通过以对话方式使用结构良好的提示,人类用户可以有效地引导LLM发展出更加深思熟虑和准确的回答。受此见解启发,我们提出了一种名为“思维迭代”(IoT)框架来增强LLM回答质量,该框 架通过生成针对输入问题及当前LLM响应迭代的“思维”激发提示实现这一目标。与静态或准静态方法(如“思考链”CoT或“思想树”ToT)不同,IoT的推理路径是动态调整的,基于不断演变的情境,并且不会产生最终被丢弃的探索性思维分支。IoT框架包含三个组成部分:(1)内 部对话代理(IDA),负责生成指导性的、具体情境下适用的提示;(2)LLM代理(LLMA),处理这些提示来改进其回答;以及(3)迭代提问循环,实现前两个组件间的对话过程。我们介绍该框架的两种变体:自主思维迭代(AIoT),其中由LLM决定何时停止迭代;引导式思维迭代(GIoT),始终强制执行 固定次数的迭代。我们在不同数据集上探索了IoT的表现,这些数据集跨越诸如GPQA数据集中复杂的推理任务、24点游戏中的问题解决(“Game of 24”)、速解纵横字谜(“Mini Crosswords”)以及源自HotpotQA数据集的多跳问答等众多领域。我们的结果表明,IoT为LLM自主回答完善提供了一个可行的框架,并在CoT上展现出显著改进,从而推动了减少人类干预的更加适应性和高效的推理系统的实现。

Iterative human engagement is a common and effective means of leveraging the advanced language processing power of large language models (LLMs). Using well-structured prompts in a conversational manner, human users can effectively influence an LLM to develop more thoughtful and accurate responses. Motivated by this insight, we propose the Iteration of Thought (IoT) framework for enhancing LLM responses by generating “thought”-provoking prompts vis a vis an input query and the current iteration of an LLM’s response. Unlike static or semi-static approaches, e.g. Chain of Thought (CoT) or Tree of Thoughts (ToT), IoT adapts its reasoning path dynamically, based on evolving context, and without generating alternate exp lor at ive thoughts which are ultimately discarded. The three components of the IoT framework are (1) an Inner Dialogue Agent (IDA) responsible for generating instructive, context-specific prompts; (2) an LLM Agent (LLMA) that processes these prompts to refine its responses; and (3) an iterative prompting loop that implements a conversation between the former two components. We introduce two variants of our framework: Autonomous Iteration of Thought (AIoT), where an LLM decides when to stop iterating, and Guided Iteration of Thought (GIoT), which always forces a fixed number iterations. We investigate the performance of IoT across various datasets, spanning complex reasoning tasks from the GPQA dataset, exp lor at ive problem-solving in Game of 24 , puzzle solving in Mini Crosswords , and multi-hop question answering from the HotpotQA dataset. Our results show that IoT represents a viable paradigm for autonomous response refinement in LLMs, showcasing significant improvements over CoT and thereby enabling more adaptive and efficient reasoning systems that minimize human intervention.

1 引言

1 Introduction

像 GPT-3、PaLM(Anil 等人,2023年)、以及它们的后继者,包括 GPT-4(OpenAI,2023年)、Gemini(Team 等人,2023年)、LLaMA(Dubey 等人,2024年)和 Claude 的大型语言模型(LLM)的发展已经革新了自然语言处理。LLM 使人工智能系统能够以惊人的熟练程度执行广泛的任务。在人类-LLM 相互作用的背景下,从实践经验中得出的一个关键观察是:LLM 的响应质量通常会随着反复提示和用户反馈而提高。最近的研究表明,简单的提示可能会导致校准错误,而更复杂、迭代式的提示策略则显著提高了准确性和可靠性(Krishna 等人,2024年)。这些结果表明,在适当情境的输入序列下,LLM 可以更加有效地利用他们的内部知识库(江等,2020;Petroni等,2019;Talmor等,2020;Roberts等,2020)来提供更丰富、更细致的答案(Sloman,1996)。

The development of Large Language Models (LLMs) like GPT-3 , PaLM ( Anil et al. , 2023 ), and their successors, including GPT-4 ( OpenAI , 2023 ), Gemini ( Team et al. , 2023 ), LLaMA ( Dubey et al. , 2024 ), and Claude , has revolutionized natural language processing. LLMs have empowered AI systems to perform a wide range of tasks with remarkable proficiency. In the context of human-LLM interaction, a critical observation from practical experience is that the quality of LLM responses tends to improve with repeated prompting and user feedback. Recent research demonstrated that naïve prompting can lead to calibration errors, while more sophisticated, iterative prompting strategies significantly improve both accuracy and reliability ( Krishna et al. , 2024 ). These results suggest that, given context-appropriate sequences of inputs, LLMs can much more effectively leverage their internal knowledge base ( Jiang et al. , 2020 ; Petroni et al. , 2019 ; Talmor et al. , 2020 ; Roberts et al. , 2020 ) to provide richer, more nuanced answers ( Sloman , 1996 ).

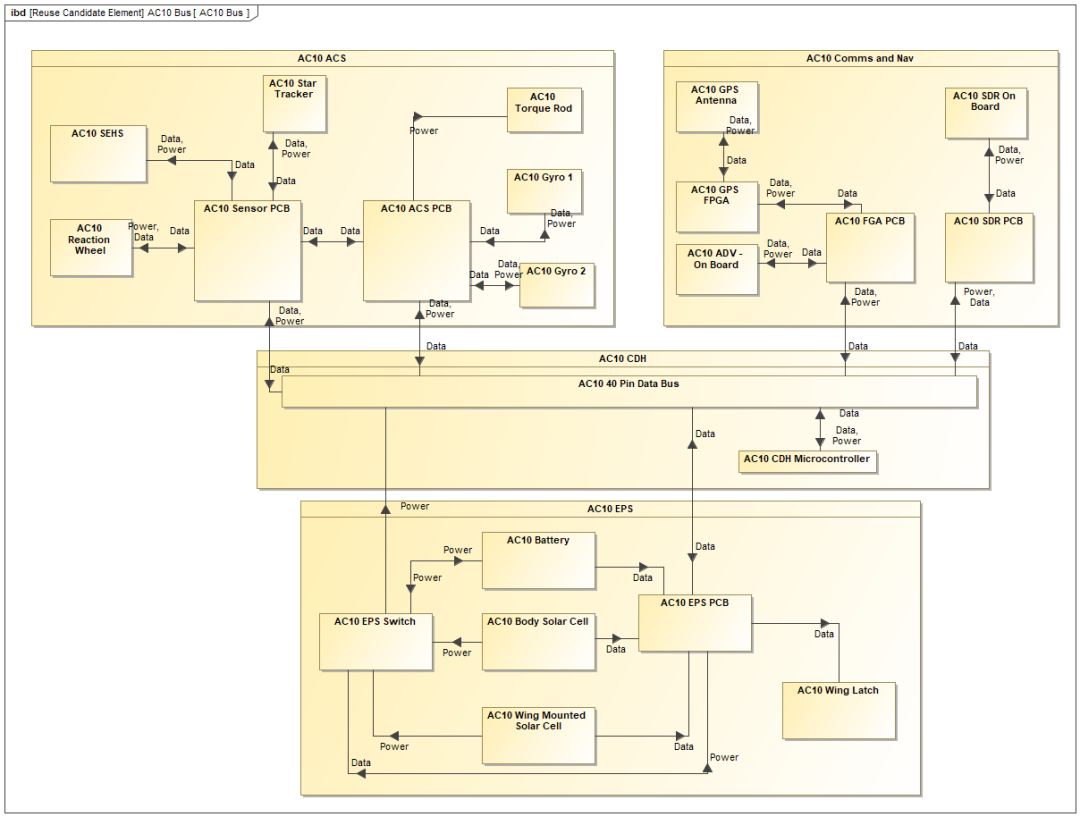

图 1:说明增强 LLM 理解能力的不同提示策略。输入-输出(IO)方法采用直接的输入输出方式,没有中间推理过程。链式思维(CoT)( Wei 等人, 2022 ) 提示引入了一个单一、线性的推理路径,而树状思维(ToT)( Yao 等人, 2024 ) 方法通过并行探索多个推理路径进行扩展。提出的迭代思维(IoT)框架(本研究) 引入了内对话代理(IDA),用于动态调整推理路径,实现跨路径的自适应探索以提高响应准确性。

人类用户与大型语言模型(LLM)的交互通常如下进行:用户向LLM提出问题,收到初步回复后,如果答案不完整或欠佳,用户会通过重申背景线索来进一步指导LLM(例如,提醒LLM其角色、建议考虑更多信息,或是指出需要改进的回答部分)。这种往返交流有助于缩小LLM的关注范围,同时减少了用户的搜寻工作量,因为大部分推理和信息检索工作由LLM承担。

A human user’s interaction with in an LLM often proceeds as follows: the user poses a question to the LLM, receives an initial response, and, if the answer is incomplete or suboptimal, provides additional guidance to the LLM by reiterating contextual clues ( e.g. by reminding the LLM of its role, suggesting additional information to consider, or highlighting specific parts of the response that need refinement). This back-and-forth process helps narrow the focus of the LLM while reducing the research effort required from the user, since the LLM is responsible for the bulk of the reasoning and information retrieval.

我们确定了两种主要的人类与LLM交互形式。在第一种交互形式中,用户简单地引导LLM浏览其内部知识库。例如,假设有一个场景,LLM生成的代码因缺少括号而出现语法错误。用户可能会提示它“验证语法”,这将导致LLM在后续响应中纠正错误。在第二种交互形式中,用户引入新信息以改进LLM的回答。例如,可以要求LLM提供某个特定城市的最新天气信息,但它却无法访问实时数据。在这种情况下,用户可以提供这些信息(使用工具或API),然后提示LLM建议适合当地天气的着装或推荐访问的目的地。总的来说,第一种交互形式引导LLM更好地利用其内部知识,而第二种交互形式则涉及用新信息增强LLM的知识库。

We identify two predominant forms of human-LLM interaction. In the first form of interaction, the user simply guides an LLM through its own internal knowledge base. For example, consider a scenario where an LLM generates code that is syntactically incorrect due to a missing bracket. The user might prompt it to “verify the syntax,” leading the LLM to correct the error in a subsequent response. In the second for of interaction, the user introduces new information to improve the LLM’s response. For example, an LLM may be asked to provide up-to-date weather information for a specific city, but lacks access to real-time data. In this case, the user can supply this information (using a tool or API), then prompt the LLM to e.g. recommend weather-appropriate clothing or destination to visit in that locale. All together, the first form an interaction leads the LLM to better utilize its internal knowledge, whereas the second form of interaction involves augmenting the LLM’s knowledge with new information.

研究显示,提示语的表达方式能够显著影响模型在不同场景下的表现,这证明了迭代提问改进大语言模型(LLM)应答的潜力(布朗,2020年;奥帕尔-翁等人,2024年)。图1展示了从简单的输入输出(IO)方法到更高级的方法的进展,如思维链(CoT)(魏等人,2022年)和思维树(ToT)(姚等人,2024年)ThCoT在单一的线性路径上引入了顺序推理步骤,而ToT则平行探索多个推理途径,形成分支结构以优化输出。

The potential of iterative prompting to improve LLM responses is supported by research showing that prompt phrasing can significantly influence a model’s performance in various settings ( Brown , 2020 ; Opsahl-Ong et al. , 2024 ). Figure 1 illustrates the progression from simple Input-Output (IO) approaches to more advanced methods like Chain-of-Thought (CoT) ( Wei et al. , 2022 ) and Tree-ofThought (ToT) ( Yao et al. , 2024 )ThCoT introduces sequential reasoning steps along a single linear path, while ToT explores multiple reasoning pathways in parallel, forming a branching structure to optimize the output.

这些方法代表了“推理框架”,它们依赖于静态或半静态提示,可能难以适应每个查询和回答情境的不断变化,这可能会限制LLM回应的质量。思考链(CoT)提示鼓励LLM阐述其中间推理步骤,从而在复杂任务上表现出更好的性能。同样,相关的思维链方法(Sahoo等人,2024年,等其他方法)沿多个路径进行推理,考虑到更广泛的潜在回答,其中大多数生成后会被弃用,这在解决谜题或填字游戏等更具探索性的任务上表现得更好。其他框架如自我精炼(Madaan等人,2024年)和自我验证(Weng等人,2022年)使LLM能够迭代地批评并改进其输出,但仍依赖于静态或半静态提示。在一个更广泛的背景下,通过推理技术而不是大量训练来追求改善推理的价值,在OpenAI的全新一系列 o1模型(OpenAI, 2024年)等近期进展中得到了进一步强调。这些专有模型被专门设计为在回应之前花更多时间“思考”问题,专注于利用推理解决科学、编码和数学领域的复杂任务。这些发展凸显了人工智能社区的一个更广泛的转变,即转向训练后的推理能力增强作为一种更具可扩展性的方法。

these methods represent “reasoning frameworks” that rely on static or semi-static prompts, which may struggle to adapt to the evolving context of each query and response, potentially limiting the quality of LLM responses. CoT prompting encourages LLMs to articulate its intermediate reasoning steps, which leads to better performance on complex tasks. Similarly, the related ToT approach (among other methods ( Sahoo et al. , 2024 )) reasons along multiple paths to consider a wider breadth of potential responses, most of which are generated then discarded, leading to better performance on more exp lor at ive tasks like solving puzzles or crosswords. Other frameworks like Self-Refine ( Madaan et al. , 2024 ) and Self-Verification ( Weng et al. , 2022 ) enable LLMs to iterative ly critique and refine their outputs, but still rely on static or semi-static prompts. In a broader context, the value of pursuing improved reasoning with inference techniques, as opposed to extensive training, is underscored by more recent advancements such as OpenAI’s new series of o1 models ( OpenAI , 2024 ). These proprietary models are specifically designed to spend more time “thinking” through problems before responding, focusing on inference to solve complex tasks in science, coding, and math. Such developments highlight a broader shift in the AI community toward post-training enhancement of reasoning capabilities as a more scalable approach.

在本项工作中,注意到缺乏旨在复制人类与大型语言模型(LLM)互动的动态性质的推理框架,我们提出IOT(IoT)作为一种自主、迭代和适应性的方法来处理没有人类反馈的LLM推理。

In this work, noting the lack of reasoning frameworks that strive to replicate the dynamic nature of human-LLM interactions, we propose IoT as an autonomous, iterative, and adaptive approach to LLM reasoning without human feedback.

1.1 思想迭代(IoT)

1.1 Iteration of thought (IoT)

与上述静态和半静态框架不同,IoT利用内部对话代理(IDA)在每次迭代中调整和完善其推理路径。这使得能够进行适应性探索,跨越不同的推理树,从而促进生成更灵活、更具情境感知的响应过程。与现有方法的比较如图 1 所示。

Unlike the aforementioned static and semi-static frameworks, IoT utilizes an Inner Dialogue Agent (IDA) to adjust and refine its reasoning path during each iteration. This enables adaptive exploration across different reasoning trees, fostering a more flexible and context-aware response generation process. A comparison to existing methods is shown schematically in Figure 1 .

IOT的核心框架由三个主要组件组成。更多细节也提供在第2节中。

The core IoT framework is composed of three main components. Further details are also provided in Section 2 .

内在对话代理(IDA):IDA 作为“引导者”,根据原始用户查询和 LLM 的前一响应动态生成上下文敏感提示。调整后的提示服务于迭代地引导 LLM 趋向更精确、准确的答案。数学上,可以将 IDA 表示为函数 C : C: C: Q × R × K ′ → P \mathcal{Q}\times\mathcal{R}\times\mathcal{K}^{\prime}\rightarrow\mathcal{P} Q×R×K′→P ,其中 Q \mathcal{Q} Q 是可能查询的空间, R \mathcal{R} R 是潜在 LLM 的空间,而 P 是生成提示的空间。在每一步中,它采用当前查询 q ∈ Q q \in Q q∈Q 和前一响应 r ∈ R r \in R r∈R 生成新提示 p ∈ P p \in P p∈P 。这一过程使得提示生成 动态化 ,将 IDA 与更为僵化的手段(如 CoT)区分开来,并允许其适应不断变化的上下文。

• Inner dialogue agent (IDA): The IDA functions as a “guide” that dynamically generates context-sensitive prompts based on the original user query and the LLM’s previous response. The adjusted prompts servce to iterative ly lead the LLM toward more refined and accurate answers. Mathematically, the IDA can be represented as a function C : C: C: Q × R × K ′ → P \mathcal{Q}\times\mathcal{R}\times\mathcal{K}^{\prime}\rightarrow\mathcal{P} Q×R×K′→P , here Q \mathcal{Q} Q is the space of possible queries, R \mathcal{R} R is the space of potential LLM es, and P is the space of gen prompts. At each step, it ta current query ∈Q and the previous response r ∈R to generate a new prompt ∈P . This process makes prompt generation dynamic , differentiating IoT from more rigid approaches like CoT and allowing it to adapt to an evolving context.

• LLM代理(LLMA):LLMA体现了LLM的核心推理能力,并处理IDA动态生成的提示。它利用LLM的内部知识 K K K来形成其响应。正式地,我们将LLA建模为一个函数 L : Q × L\,:\,\mathcal{Q}\times L:Q× P × K → R \mathcal{P}\times K\to\mathcal{R} P×K→RP×→R。LLMA以查询 q q q、提示 p p p和知识库 K K K作为输入,然后生成精炼的响应 r r r。LLMA还识别其自身推理中的不确定区域或空白,向IDA提供反馈以便相应调整提示。这种互动创建了一个闭环系统,在没有外部输入的情况下持续提高答案的质量。

• LLM agent (LLMA): The LLMA embodies the core reasoning capabilities of an LLM and processes the IDA’s dynamically generated prompts. It uses an LLM’s internal knowledge K K K ne its responses. Formally, we mod l the LL A as a function L : Q × L\,:\,\mathcal{Q}\times L:Q× P × K → R \mathcal{P}\times K\to\mathcal{R} P×K→R P × →R . The LLMA take as input a query q , prompt p and a knowledge base K then generates a refined response r . The LLMA also identifies areas of uncertainty or gaps in its own reasoning, providing feedback for the IDA to adjust prompts accordingly. This interaction creates a closed-loop system that continuously improves the quality of answers without external inputs.

• 迭代提示循环:IoT中的迭代过程涉及到识别代理(IDA)和低级逻辑模块(LLMA)之间的来回通信。在每次迭代 i i i时,IDA根据原始查询 q q q和LLM上一次的响应 r i − 1 r_{i-1} ri−1生成一个新的提示 p i = C ( q , r i − 1 ) p_{i}=C(q,r_{i-1}) pi=C(q,ri−1)。LLMA对 p i p_{i} pi做出反应 r i = L ( q , p i , K ) r_{i}=L(q,p_{i},K) ri=L(q,pi,K)。这一循环持续进行,直到找到一个满意的答案 r ∗ r^{*} r∗或达到任意设定的最大迭代次数为止。这种来回交互的策略使得IoT能够穿行复杂的推理路径,高效地探索各种潜在解决方案。此外,分别为IDA和LLMA引入不同的LLM可以使每个代理作为开放系统(冯·贝塔兰菲,1950)运作,在其中进行内部知识交换。在这种情况下,整个系统作为一个封闭系统运作,具有一个综合的知识库,从而增强了没有外部输入的内部推理能力。

• Iterative prompting loop: The iterative process in IoT involves a back-and-forth between the IDA and LLMA. At each iteration i i i , the IDA generates a new prompt p i = C ( q , r i − 1 ) p_{i}=C(q,r_{i-1}) pi=C(q,ri−1) based on the original query q q q and the LLM’s previous response r i − 1 r_{i-1} ri−1 . The LLMA en responds to p i p_{i} pi with r i = L ( q , p i , K ) r_{i}=L(q,p_{i},K) ri=L(q,pi,K) . This loop continues until a satisfactory answer r ∗ r^{*} r∗ is found or the arbitrary maximum iteration count is reached. This back-and-forth approach allows IoT to navigate complex reasoning paths to efficiently explore various potential solutions. Moreover, introducing distrinct LLMs for the IDA and LLMA respectively can allow each agent to function as an open system ( Von Berta la nffy , 1950 ) where internal knowledge is exchanged. In this scenario, the overall system behaves as a closed system with a combined knowledge base, enhancing internal reasoning without external input.

在以下各节中,我们详细分析了我们的IoT框架,描述了我们的实验方法,并讨论了实证结果。我们还通过在各种数据集上的实验结果展示了该框架的有效性,在这些数据集中,与现有推理方法相比,观察到了显著的改进。

In the sections that follow, we present a detailed analysis of our IoT framework, describe our experimental methodology, and discuss empirical results. We also demonstrate the framework’s effectiveness with experimental results on the various datasets, where significant improvements are observed over existing reasoning methods.

2 框架与实现

2 Framework and implementation

在本工作中,我们使用IoT的两个不同变体:自主思维迭代(AIoT)和引导思维迭代(GIoT)。在AIoT变体中,LLMA本身决定何时生成了满意的响应。这一决策体现在一个布尔输出信号“迭代停止”中。在收到正向信号后终止通常会导致比强制最大值更少的迭代次数。反过来,这导致评估速度更快但探索程度降低,但在面对复杂查询时有提前停止的风险。相比之下,GIoT采用更为严格策略,规定了固定数量的迭代次数。GIoT采取相反的策略,旨在全面探讨推理路径以最小化过早收敛的情况,尽管这样做会增加额外的计算成本,并且存在冗余或重复迭代的风险。

In this work, we use two distinct variants of IoT: Autonomous Iteration of Thought (AIoT) and Guided Iteration of Thought (GIoT). In the AIoT variant, the LLMA itself decides when it has generated a satisfactory response. This decision is reflected in a Boolean output signal, iteration stop . Termination following a positive signal usually leads to fewer iterations than the enforced maximum. This, in turn, leads to faster evaluation with less exploration, but risks premature stops when facing more complex queries. Conversely, GIoT employs a more regimented strategy by mandating a fixed number of iterations. GIoT employs the opposite strategy, aiming for comprehensive exploration of reasoning paths to minimize premature convergence, at additional computational cost and with the risk of redundant or repetitive iterations.

我们实现了框架,包括两个变体,作为一个Python库(AgnostiqHQ,2024年),使用Pydantic(Pydantic,2024年)为来自LLMs的原始响应提供输出模式。

We implemented the IoT framework, including both variants, as a Python library ( AgnostiqHQ , 2024 ), using Pydantic ( Pydantic , 2024 ) to provide provide output schemas for raw responses from LLMs.

图 2:使用框架处理样本查询的示意图。为了说明目的,提出了一个简化的疑问。这里使用了引导式IOT变体(GIoT),迭代次数设置为 2。每个灰色框包含IOT的一个单独迭代,其中IDA以黄色显示,LLMA以绿色显示。

Figure 2: Schematic example of processing a sample query with the IoT framework. A simplistic question is asked for illustrative purposes. The guided IoT variant (GIoT) is utilized here, with the number of iterations set to 2. Each grey boxes contains an individual iteration of IoT, with the IDA shown in yellow and the LLMA in green.

2.1 自主迭代思维(AIoT)

2.1 Autonomous iteration of thought (AIoT)

在AIoT中,LLM还在每一步判断生成的答案是否足够。这通过一个输出信号“迭代停止”来表示,如果设置为“真”,则表明LLM认为其答案已经最终且完整。完整的AIoT过程如下方的伪代码算法1所示。附录A中也提供了一个AIoT序列示例。

In AIoT, the LLM also makes a determination at each step on whether the answer it has generated is sufficient. This is represented by an output signal, iteration stop , which, if set to True , indicates that the LLM believes its answer is final and complete. The full AIoT process is shown in pseudocode Algorithm 1, below. A sample AIoT sequence is also provided in Appendix A .

q ∈ Q q\in\mathcal{Q} q∈Q,LLM 配置由 IDA 给出的公式为 C : Q × R × K ′ → P C:\mathcal{Q}\times\mathcal{R}\times\mathcal{K}^{\prime}\to\mathcal{P} C:Q×R×K′→P,给定的 LLMA 由 L : Q × P × K → R . L:\mathcal{Q}\times\mathcal{P}\times\mathcal{K}\rightarrow\mathcal{R}. L:Q×P×K→R.表示。迭代最大次数 Q = T ∈ N + Q = T\in\mathbb{N}^{+} Q=T∈N+ ,以及停止准则 F : R × C → { 0 , 1 } \mathcal{F}:\mathcal{R}\times\mathcal{C}\rightarrow\{0,1\} F:R×C→{0,1} 。

1: r 0 ← L ( q r_{0}\leftarrow L(q r0←L(q, “Initial Prompt” , K ) ▹ \triangleright ▹ 使用 LLMA 生成初始响应

2: i ← 1 i\gets1 i←1 ← ▹ \triangleright ▹ 初始化迭代计数器

3: 迭代停止至 p ← F ( r 0 , C ) \mathsf{p}\gets\mathcal{F}(r_{0},\mathcal{C}) p←F(r0,C) ▹ \triangleright ▹ 初始响应的停止条件评估

4: 当 ¬iteration_stop ∧ i ≤ T i\leq T i≤Tdo ▹ \triangleright ▹ 直至达到停止标准或最大迭代次数

5: p← C ( q, r ) ▷ IDA 根据查询和上次响应生成新提示 i i − 1

6: r i ← L ( q , p i , K ) r_i \leftarrow L(q, p_i , K) ri←L(q,pi,K) ▹ \triangleright ▹ LLMA 对 IDA 提示生成新响应

7: iteration_stop ← F ( r i , C ) \leftarrow\mathcal{F}(r_{i},\mathcal{C}) ←F(ri,C) ▹ \triangleright ▹ 当前响应的停止条件评估

8: i ← i + 1 i \leftarrow i + 1 i←i+1 ▹ \triangleright ▹ 迭代计数器增一

9: end while

10: 输出: r i − 1 r_{i-1} ri−1 ▹ \triangleright ▹ 满足停止标准的那个响应,或在 T T T次迭代后的最后响应

Input: q ∈ Q q\in\mathcal{Q} q∈Q , LLM configuration with IDA given by C : Q × R × K ′ → P C:\mathcal{Q}\times\mathcal{R}\times\mathcal{K}^{\prime}\to\mathcal{P} C:Q×R×K′→P , LLMA given by L : Q × P × K → R . L:\mathcal{Q}\times\mathcal{P}\times\mathcal{K}\rightarrow\mathcal{R}. L:Q×P×K→R. Q maximum number of iterations T ∈ N + T\in\mathbb{N}^{+} T∈N+ ∈ , and a stopping criterion F : R × C → { 0 , 1 } \mathcal{F}:\mathcal{R}\times\mathcal{C}\rightarrow\{0,1\} F:R×C→{0,1} F R × C →{ } . 1: r 0 ← L ( q r_{0}\leftarrow L(q r0←L(q q, “Initial Prompt” , K ) ▹ \triangleright ▹ Generate the initial response using LLMA 2: i i ← 1 i\gets1 i←1 ← ▹ \triangleright ▹ Initialize the iteration counter 3: iteration s to p ← F ( r 0 , C ) \mathsf{p}\gets\mathcal{F}(r_{0},\mathcal{C}) p←F(r0,C) ▹ \triangleright ▹ Evaluate stopping condition for the initial response 4: while ¬ iteration stop ∧ ∧ i ≤ T \wedge\,i\leq T ∧i≤T ≤ do ▹ \triangleright ▹ Continue until stopping criteria or maximum iterations reached 5: p ← C ( q, r ) ▷ IDA generates a new prompt based on the query and the last response i i − 1 6: r i ← L ( q, p i , K ) ▹ \triangleright ▹ LLMA generates a new response to the IDA prompt 7: iteration stop ← F ( r i , C ) \leftarrow\mathcal{F}(r_{i},\mathcal{C}) ←F(ri,C) ▹ \triangleright ▹ Evaluate stopping condition for the current response 8: i ← i + 1 ▹ \triangleright ▹ Increment the iteration counter 9: end while 10: Output: r i − 1 r_{i-1} ri−1 ▹ \triangleright ▹ The last response that met stopping criteria or final response after T T T iterations

2.2 引导式思维迭代(GIoT)

2.2 Guided iteration of thought (GIoT)

指导式思维迭代(GIoT)代表了一种更具控制性的迭代过程。在GIoT中,迭代会持续预定义的步数 N − 1 N-1 N−1 ,仅在第 N N N 次迭代时允许LLM决定是否已得出最终答案。在这里,IDA继续为前 N − 1 N-1 N−1 次迭代生成新提示 p i = C ( q , r i − 1 ) p_{i}=C(q,r_{i-1}) pi=C(q,ri−1) ,而不允许LM过早得出结论。在最后的迭代中,要求LLMA根据之前步骤积累的信息提供确定性答案 r r ∗ r^{*} r∗ 。

The guided variant of Iteration of Thought (GIoT) represents a more controlled iterative process. In GIoT, the iteration continues for a predefined number of steps N − 1 N-1 N−1 , and only in the N N N -th iteration is the LLM allowed to decide if it has reached the final answer. Here, the IDA continues to generate new prompts p i = C ( q , r i − 1 ) p_{i}=C(q,r_{i-1}) pi=C(q,ri−1) for the first N − 1 N-1 N−1 iterations without allowing the L M to conclude early. In the final iteration, the LLMA is asked to provide a conclusive answer r r ∗ r^{*} r∗ based on the accumulated information from previous steps.

像AIoT一样,GIoT确保LLM充分探索其内部知识空间,并在更大程度上精炼输出。然而,与AIoT不同的是,GIoT接受额外生成的代价作为避免过早得出结论的一种折衷。完整的GIoT过程在算法2中以伪代码形式给出。图2也提供了一个GIoT序列示例。

Like AIoT, GIoT ensures that the LLM thoroughly explores its internal knowledge space and refines its output to a greater extent. However, unlike AIoT, GIoT admits the cost of additional generations as a compromise to prevent premature conclusion. The full GIoT process is shown in pseudocode in Algorithm 2. A sample GIoT sequence is also provided in Figure 2 .

总之,选择AIoT还是GIoT决定了核心IOT框架中的迭代模式。每个变体都允许框架从不同角度接近LLM响应的迭代细化。

To summarize, the choice of AIoT or GIoT defines the mode of iteration in the core IoT framework. Each variant allows the framework to approach the iterative refinement of LLM responses from different angles.

3 结果

3 Results

为了全面评估IoT框架,我们在不同模型、数据集和推理策略下进行了一系列实验。考虑到这些评估的计算成本,我们选择了特定的模型-数据集组合来研究在不同条件下的性能和可扩展性。这种方法使我们能够针对地理解推理能力和迭代策略如何影响LLM响应的整体质量。以下部分描述了设计用于探索这些方面的实验,包括它们的设置、目标以及从每个实验中得出的洞见。

To comprehensively evaluate the IoT framework, we conducted a series of experiments across various models, datasets, and reasoning strategies. Given the computational expense these evaluations, we selected specific model-dataset combinations to investigate performance and s cal ability under different conditions. This approach enables us to provide a targeted understanding of how reasoning capability and iteration strategy affect the overall quality of LLM responses. The following sections describe the experiments designed to explore these aspects, including their setups, objectives, and the insights derived from each.

表 1:在 GPQA Diamond 数据集上不同方法的准确性(及相对改进)比较。

图 3:不同方法在 GPQA 评估中的准确性比较。

3.1 在GPQA问卷中评估IOT

3.1 Assessing IoT on the GPQA questionnaire

在这个实验中,我们量化了IoT回答GPQA Diamond数据集的问题的能力,该数据集由Rein等人于2023年发布。这些问题要求进行深度推理和全面的内部知识,即使是能力强大的LLMs在总分上也低于 50 % 50\% 50%(Dubey等人,2024)。

In this experiment, we quantify IoT’s ability to accurately answer questions from the GPQA Diamond dataset ( Rein et al. , 2023 ). These questions are known to require deep reasoning and comprehensive internal knowledge, with even the highly capable LLMs yielding overall scores under 50 % 50\% 50% ( Dubey et al. , 2024 ).

我们在GPT-4o迷你版上比较了AIoT/GIoT与CoT,这是一个专有模型(OpenAI,2023年)。CoT是一种广泛使用的推理策略,涉及引导模型经历逐步的思考过程。通过将CoT与AIoT/GIoT进行比较,我们的目的是了解动态迭代而非遵循预定义步骤所带来的潜在改进。

We compare AIoT/GIoT with CoT on GPT-4o mini , a proprietary model ( OpenAI , 2023 ). CoT is a widely used reasoning strategy that involves guiding the model through a step-by-step thinking process. By comparing CoT with AIoT/GIoT, we aim to understand the potential improvements resulting from iterating dynamically rather than following predefined steps.

这些实验的结果展示在表 1 和图 3 中,很明显传统的 CoT 与基线 IO 方法的表现相当,这表明刚性的逐步骤推理可能对 GPQA 不够有效。同时,GIoT 的表现明显优于 IO 和 CoT,平均准确率高出 2.62 % 2.62\% 2.62%。然而,GIoT 在其准确率分布上也显示出比 IO 和 CoT 更大的方差。这一结果的一种解释是,在正确且完整的思维模式已经形成之前就被强制迭代可能导致发散,因为会出现幻觉(Huang 等人,2023)的情况。而 AIoT 相对于避免这个问题更为有效。

The results of these experiments are presented in Table 1 andFigure 3 ere it, is clear that conventional CoT performs about on par with the baseline IO approach, indicating that rigid step-by-step reasoning may not be effective for GPQA. Meanwhile, GIoT performs significantly better than IO and CoT with a modest 2.62 % 2.62\% 2.62% higher accuracy (on average). However, GIoT also exhibits larger variance than IO and CoT in its distribution of accuracy scores. One interpretation of this result is that forced iterations can lead to divergence due to hallucination ( Huang et al. , 2023 ) in cases where a correct and complete thought pattern is established well before the mandated number of iterations have been performed. AIoT, on the other hand, is more effective at avoiding this issue.

在整体上,表现为最有效的策略,平均准确性比IO基线提高了 14.11 % 14.11\% 14.11%,并且在所有测试方法中具有最低的方差。AIoT在准确度分数上的较低方差意味着在不同类型问题上的表现更加一致。结合更高的平均分,这一出色的结果归因于AIoT动态自主、情境感知的迭代终止策略,这防止了对响应空间无成效或反成效的探索。值得注意的是,我们的分析显示AIoT大约在 60 % 60\% 60%的任务中只需一次迭代就可以完成,并且大约 90 % 90\% 90%在两次迭代内完成。这反映了AIoT在导航推理空间时避免过度迭代的效率。因此我们可以推断,在适用的情况下,AIoT的优势在于避免了探索不足(如IO和CoT所见)和过度探索(与GIoT相关的一种风险)的陷阱。

emerges as the most effective strategy overall, with a 14.11 % 14.11\% 14.11% improvement in average accuracy over the IO baseline and the lowest variance among all methods tested. Lower variance in AIoT’s accuracy scores implies more consistent performance across different types of questions. Together with a higher average score, this superior result is attributed to AIoT’s dynamic autonomous, contextaware termination of iterations, which prevents unproductive or counterproductive exploration of the response space. Notably, our analysis shows that AIoT completes approximately 60 % 60\% 60% of tasks within a single iteration and approximately 90 % 90\% 90% within two iterations. This reflects AIoT’s efficiency in navigating the reasoning space without over-iteration. We therefore infer that AIoT’s advantage, wherever applicable, is avoiding the pitfalls of both under- (as seen in IO and CoT) and overexploration (a risk associated with GIoT).

3.2 评估在探索性问题解决任务中的应用

3.2 Assessing IoT on exp lor at ive problem-solving tasks

为了评估我们的框架相比于现有最先进技术的有效性,我们使用《24点游戏》和《迷你填字游戏》任务进行比较分析。这些游戏在由姚等人发表于2024年的ToT创世纪论文中占据了显著位置,它们易于理解、解题时颇有挑战但结果容易验证。ToT非常适合那些从多样化的探索性推理路径中获益的问题,这得益于其系统性的搜索策略,该策略遍历众多可能的解图以找到最优答案。我们进行此次实验的动机是评估我们的IoT方法是否能够有效迭代向最优解发展,而无需生成大量备选并被抛弃的方案。考虑到这一点,本次实验的目标是在与CoT相比时展现我们的IoT框架所具有的相对优势,同时认识到了ToT的固有优势,至少从其总体解决能力来看,在需要更广泛的探索性方法的背景中更为明显。

To evaluate the effectiveness of our IoT (Iterative of Thought) framework against the state-of-theart, we conduct a comparative analysis using the Game of 24 and Mini Crosswords tasks. These games, featured prominently in the ToT genesis paper by Yao et al. ( 2024 ), are easy to understand, challenging to solve, but easy to verify. ToT is well-suited for problems that benefit from a wide variety of exploratory reasoning paths, owing to its systematic search strategy that traverses many possible solution graphs to find the optimal answer. Our motivation for this experiment is to assess whether our IoT method can effectively iterate towards optimal solutions without generating a multitude of alternate, discarded responses. With this in mind, our goal in this experiment is to compare the relative advantage of our IoT framework compared to CoT, recognizing the inherent advantages of ToT, at least in terms its overall solution ability, in contexts benefiting from a broader exploratory approach.

24点游戏的玩法是使用四个给定数字和基本运算符及括号 { + ˉ , − , × , ÷ ˉ , ( , ) } \{\bar{+},-,\times,\bar{\div},(,)\} {+ˉ,−,×,÷ˉ,(,)}构造算术表达式,目标得到的数值为24。这个任务不仅需要计算能力,更需要从不同运算组合中探索有效路径的战略性推理。用于此任务的数据集(正如ToT研究所使用的),包含了各种情形,在这些情况中挑战在于在多种可能解法中找到最有效的途径。同样地,迷你纵横字谜游戏要求根据一组线索解决5x5的填字格子。完成这些格子需要词汇理解以及模式识别能力,并且能够生成符合垂直和水平约束、结构连贯的单词序列。迷你纵横字谜任务的复杂性也在于测试多个可能的词适配兼容性,依据反馈与约束逐步优化选择。这两个数据集因此对于评估模型在合理搜索空间内尝试多种解法的能力是很有价值的。

The Game of 24 involves generating an arithmetic expression using four given numbers and basic operations and brackets { + ˉ , − , × , ÷ ˉ , ( , ) } \{\bar{+},-,\times,\bar{\div},(,)\} {+ˉ,−,×,÷ˉ,(,)} to arrive at the number 24. This task requires not only computational ability but also strategic reasoning to explore different combinations of operations. The dataset for this task, as used in the ToT study, consists of various instances where the challenge lies in finding the most efficient path to the solution amidst multiple possibilities. Similarly, the Mini Crosswords task involves solving 5x5 crossword grids based on a set of clues. Solving these grids requires lexical reasoning and pattern recognition, as well as the ability to generate coherent word sequences that fit both vertical and horizontal constraints. The complexity of the Mini Crosswords task also stems from the need to test the compatibility of multiple potential word fits, refining choices based on feedback and constraints. Both datasets are therefore valuable for assessing a model’s ability to try out various solutions within a reasonable search space.

图 4:不同方法(GIoT,AIoT,CoT,IO)在 Mini Crossword: Letters、Mini Crossword: Words 和 Game of 24 任务上的性能比较。箱形图表示不同试验中平均准确率百分比的分布。

这些任务的结果在图 4 中进行了可视化,揭示了不同方法之间的显著性能差异。值得注意的是,在两个任务中,GIoT 平均表现优于 AIoT、CoT 和 IO 方法。这一结果与我们对 GIoT 是 AIoT 更具探索性替代方案的理解一致。通过迫使模型探索多条推理路径,GIoT 增加了得出正确答案的可能性,这与 ToT 方法在有益的蛮力探索方面相吻合。

Results for these tasks are visualized in Figure 4 , which reveals distinct performance differences between the various methods. Notably, GIoT on average outperforms the AIoT, CoT, and IO methods across both tasks. This result is consistent with the understanding that GIoT is a more exploratory alternative to AIoT. By compelling the model to explore multiple reasoning paths, GIoT enhances the likelihood of arriving at a correct answer, aligning with the ToT approach in terms of beneficial brute-force exploration.

关于“迷你填字游戏”任务,原始的ToT研究(使用了GPT-4)与CoT相比展示了显著提升,字母的成功率提高了 92.1 % 92.1\% 92.1%,单词成功率提升了 284.6 % 284.6\% 284.6%(姚等, 2024)。相比之下,我们实验中使用功能较弱的GPT-4o迷你模型显示,在字母方面,GIoT达到 35.5 % 35.5\% 35.5%的成功率,对于单词则是 90.6 % 90.6\% 90.6%,与CoT相比。同时,AIoT分别有 28.3 % 28.3\% 28.3%和 74.5 % 74.5\% 74.5%的增长。尽管这些差异比ToT的研究报告中的小,但需要在GPT-4o迷你模型与GPT-4的限制性的背景下考虑。值得注意的是,在这个任务中,ToT性能更优主要是因为它能够探索更广泛的回答范围,可能接受比GIoT更高的计算成本。

Regarding the Mini Crosswords task, the original ToT study (which used GPT-4 ) demonstrated substantial improvements over CoT, with success rate increases of 92.1 % 92.1\% 92.1% for letters and 284.6 % 284.6\% 284.6% for words ( Yao et al. , 2024 ). In comparison, our experiments using the less capable GPT-4o mini model show that GIoT achieves a success rate of 35.5 % 35.5\% 35.5% for letters and a 90.6 % 90.6\% 90.6% success rate for words, as compared to CoT. Meanwhile, AIoT shows gains of 28.3 % 28.3\% 28.3% and 74.5 % 74.5\% 74.5% , respectively. Although these differences are smaller than those reported for ToT, they should be considered in context with the limitions of GPT-4o mini versus GPT-4 . It is also important to note that the superior performance of ToT in this task is primarily due to its capacity to explore a broader range of answers, potentially admitting a higher computational cost than GIoT.

在IoT结果中观察到的较高方差,特别是在“迷你填字游戏”任务中,表明与CoT和IO相比,解决方案的探索更加多样化,尽管并不总是富有成效。虽然这种多样性在某些情况下可能是有利的,但在更受限制的问题空间中,它可能导致次优收敛。

The higher variance observed in the IoT results, particularly in the Mini Crosswords task, suggests a more diverse albeit not always productive exploration of solutions compared to CoT and IO. While this diversity can be advantageous in some scenarios, it may lead to sub-optimal convergence in more constrained problem spaces.

在 24 点游戏 的任务中,也出现了类似的性能差异模式。在这里,ToT 框架表现出显著的提升,成功比率从与 CoT 结合时的 4.0 % 4.0\% 4.0% 增加到与 ToT 结合时的 74 % 74\% 74%(在宽度为 5 的情况下),相对改善达到了 1750 % 1750\% 1750% (姚等人., 2024)。相比之下,我们的 GIoT 方法实现了比 CoT 高出显著的 266.4 % 266.4\% 266.4% 的提升,而 AIoT 则显示出 168.4 % 168.4\% 168.4% 的增加。这些结果反映了我们迭代精炼方法在算术问题解决场景中的有效性,尽管与 ToT 相比仍存在性能差距。GIoT 结构化的多步推理确保了对解空间的更彻底探索,这与 ToT 探索性本质一致,但在我们的封闭系统方法约束下运行。我们方法与 ToT 的关键区别在于反馈机制:虽然 ToT 从其能够探索更广泛的解空间或接收外部正确性检查中获益,但我们的方法,尤其是 AIoT,在某些情况下可能会自信地选择错误答案。因此,整合外部验证工具或反馈可以显著提高 IoT 在此任务及其他类似任务上的性能。

A similar pattern of performance differences emerges in the Game of 24 task. Here, the ToT framework showed a dramatic improvement, with success rates increasing from 4.0 % 4.0\% 4.0% with CoT to 74 % 74\% 74% with ToT (at a breadth of 5), marking a relative improvement of 1750 % 1750\% 1750% ( Yao et al. , 2024 ). In comparison, our GIoT method achieves a notable 266.4 % 266.4\% 266.4% improvement over CoT, while AIoT shows a 168.4 % 168.4\% 168.4% increase. These results reflect the effectiveness of our iterative refinement approach in arithmetic problem-solving scenarios, even though a performance gap remains compared to ToT. The structured, multi-step reasoning of GIoT ensures a more thorough exploration of the solution space, which aligns with the exploratory nature of ToT but operates within the constraints of our closed-system approach. A key distinction between our method and ToT is the feedback mechanism: while ToT benefits from its ability to explore more extensive solution spaces or receive external correctness checks, our methods, especially AIoT, can lead to cases where incorrect answers are confidently selected. Integrating external validation tools or feedback could therefore significantly enhance IoT’s performance on this and similar tasks.

3.3 在多情境推理和检索任务中评估IOT

3.3 Assessing IoT on multi-context reasoning and retrieval tasks

在我们的最终实验中,我们在HotpotQA-Hard数据集上评估IoT,这是一个多跳问答的具有挑战性的基准,要求进行复杂的综合推理。与只需要简单信息检索的更简单的任务不同,HotpotQA涉及到跨多个文档的复杂信息合成,要求模型在各种上下文之间切换焦点以构建连贯的答案。这需要弥合隐含的信息缺口、解决歧义和整合分散的证据。

In our final experiment, we evaluate IoT on the HotpotQA-Hard dataset, a challenging benchmark for multi-hop question answering that demands sophisticated aggregate reasoning. Unlike simpler tasks that require straightforward information retrieval, HotpotQA involves complex information synthesis across multiple documents, requiring models to shift focus between various contexts to build a coherent answer. This necessitates bridging implicit information gaps, resolving ambiguities, and integrating scattered evidence.

回答HotpotQA問題經常涉及多個相互連結的步驟,其中初始發現必須用來指導進一步的證據檢索。這一過程反映了物聯網(IoT)的核心優勢:能夠適應性地探索不同的推理路徑,動態整合上下文,並迭代精煉結論。在這裡,IoT的身份認證代理(IDA)發揮著關鍵作用,通過引導LLMA根據中間輸出重訪並調整其焦點,促進對問題空間的更全面探討。這樣的機制對於HotpotQA任務至關重要,在這些任務中,模型必須不斷地在新合成信息的照耀下重新評估先前的結論,最終導致更穩健、更準確的最終答案。

Answering a HotpotQA question often involves several interconnected steps where initial findings must be used to guide further evidence retrieval. This process mirrors the key strengths of IoT: its ability to adaptively explore different reasoning paths, dynamically integrate context, and iterative ly refine conclusions. The IoT’s IDA plays a pivotal role here by guiding the LLMA to revisit and adjust its focus based on intermediate outputs, promoting more comprehensive exploration of the problem space. Such a mechanism is crucial for HotpotQA tasks, where the model must constantly re-evaluate earlier conclusions in light of newly synthesized information, ultimately leading to a more robust and accurate final answer.

对于本次实验,再次使用GPT-4o迷你版作为引擎,我们采用三个评估指标来衡量AIoT与CoT的性能:精确匹配(EM)、F1分数和ROUGEL分数。这些指标从不同方面揭示了多跳问答的表现:EM测量了与实际答案完全一致的比例,提供了严格的模型准确性标准;F1分数平衡了准确率和召回率,反映了部分正确的情况;而ROUGE-L通过评估生成答案与参考答案之间的最长公共子序列来突出语义连贯性。

For this experiment, again using GPT-4o mini as our engine, we benchmark the performance of AIoT against CoT using on three evaluation metrics: Exact Match (EM), F1 score, and ROUGEL score. These metrics capture different facets of multi-hop QA performance: EM measures the proportion of exact matches with the ground truth, providing a stringent gauge of model accuracy; the F1 score balances precision and recall, capturing partial correctness; and ROUGE-L evaluates the longest common sub-sequence between generated and reference answers, highlighting semantic coherence.

(A)IoT 的动态特性使其能够根据查询的复杂性自主调整推理深度,促进了对推理路径的灵活探索,这是 CoT 静态、逐步的方法可能缺乏的。这种灵活性使IOT框架能够更好地处理 HotpotQA 固有的模糊性,例如跨上下文解决冲突或消解实体。这也可以作为自我纠正机制,有助于及早识别推理中的空白或错误,并促使在后续迭代中进行进一步探索。

The dynamic nature of (A)IoT allows it to autonomously adapt the depth of reasoning based on the complexity of the query, facilitating a flexible exploration of reasoning paths that CoT’s static, stepby-step approach may lack. This flexibility enables the IoT framework to better handle the inherent ambiguities of HotpotQA, such as resolving conflicts or disambiguating entities across contexts. This can also serve as a self-correcting mechanism, helping to recognize gaps or errors in reasoning early on and prompting further exploration in subsequent iterations.

图 5:AIoT 与 CoT 在 HotPotQA-Hard 数据集上的性能比较

我们在HotpotQA-Hard数据集上的结果清楚地表明AIoT相较于CoT具有明显优势。如图5所示,AIoT在精确匹配(EM)得分上达到了0.53,在F1分数上得到了0.699,并且ROUGE-L得分为0.72,在任务的几乎每一个实例中都显著超越了CoT。这些指标证明了AIoT在处理多跳问答任务中的复杂性方面的有效性,其中模型必须动态地整合和综合来自不同文档的信息。

Our results on the HotpotQA-Hard dataset reveal a clear advantage for AIoT over CoT. As shown in Figure 5 , AIoT achieves an Exact Match (EM) score of 0.53, an F1 score of 0.699, and a ROUGE-L score of 0.72, significantly outperforming CoT on almost every instance of the task. These metrics demonstrate the effectiveness of AIoT in managing the complexities inherent in multi-hop question answering tasks, where models must dynamically integrate and synthesize information from various documents.

观察到的性能提升与IOT框架背后的的核心原则相一致。通过使用引导推理路径的内部对话代理,AIoT能更好地处理不同信息之间的模棱两可和隐含联系。F1分数的提高表明了AIoT有能力部分纠正初始错误,并在多轮迭代中不断优化其回答。同样地,更高的ROUGE-L分数反映了AIoT生成的答案不仅在事实上是正确的,而且与实际答案保持语义上的一致性。这些结果证明了我们的假设:对于需要跨不连续上下文进行复杂信息整合的任务而言,迭代式的、自适应的推理方式是必不可少的。

The observed performance gains are consistent with the core principles underlying the IoT framework. Using an Inner Dialogue Agent that steers the reasoning path, AIoT can better navigate ambiguities and implicit connections between different pieces of information. Improvements in the F1 score are indicative of AIoT’s ability to partially correct initial errors and refine its answers over multiple iterations. Similarly, the higher ROUGE-L score reflects AIoT’s capacity to generate answers that are not only factually correct but also maintain semantic alignment with the ground truth. These results validate our hypothesis that iterative, adaptive reasoning is essential for tasks requiring complex information synthesis across disjoint contexts.

为了使我们的发现具有更丰富的背景,我们将我们的AIoT方法与刘等人(2024)提出的AgentLite框架进行比较。AgentLite建立在一种新颖的分层多智能体协调技术之上,支持结构化的多智能体系统,在这种系统中,一个管理智能体统筹一组团队智能体,每个智能体负责推理的不同方面。在他们的HotpotQA数据集实验中,刘等人(2024)利用AgentLite实施了具备跨多个文档进行多跳推理能力的智能体。这些智能体的动作空间设计有三个主要成员:维基百科搜索、思考和结束。在这个框架内测试了各种模型,包括GPT-4,这是一个比我们实验中使用的GPT-4o mini更知识渊博的一般性模型(OpenAI,2024)。

To contextual ize our findings, we compare our AIoT approach with the AgentLite framework introduced by Liu et al. ( 2024 ). AgentLite is built on a novel hierarchical multi-agent orchestration technique that supports structured multi-agent systems, where a manager agent coordinates a set of team agents, each handling different aspects of reasoning. In their experiments on the HotpotQA dataset, Liu et al. ( 2024 ) utilized AgentLite to implement agents capable of multi-hop reasoning across multiple documents. The action space for these agents was designed with three primary members: Wikipedia Search , Think , and Finish . Various models were tested within this framework, including GPT-4 , a generally more knowledgeable model ( OpenAI , 2024 ) than GPT-4o mini utilized in our experiments.

表 2:AIoT 框架与 AgentLite 基准在 HotpotQA-Hard 数据集上的性能比较。

将我们的AIoT方法与AgentLite(表2)的结果进行比较,可以发现AIoT在所有方面的F1和EM得分都更高。AIoT框架的0.699的F1得分和0.53的EM得分超越了AgentLite实验中使用的最强大模型的结果,比如GPT-4-0613 和 GPT-4-32k-0613。这表明虽然AgentLite提供了一种稳健的结构化推理方法,但它可能缺乏AIoT所提供的适应性和细化能力。通过专注于自主、自我引导的迭代过程,AIoT有效地重新审视和调整其推理,增强了对上下文的更深入整合,并对问题领域进行了更加全面的探索。这种比较也验证了我们的方法在利用动态推理的优势,在多跳问答场景中优于更静态的代理框架。

Comparing results from our AIoT approach to AgentLite (Table 2 ) shows that AIoT achieves higher F1 and EM scores across the board. The AIoT framework’s F1 score of 0.699 and EM score of 0.53 surpass the results of even the most potent models used in the AgentLite experiments, such as GPT-4-0613 and GPT-4-32k-0613 . This suggests that while AgentLite offers a robust approach to structured reasoning, it may lack the adaptability and refinement capabilities that AIoT provides. By focusing on an autonomous, self-guided iteration process, AIoT effectively revisits and re calibrates its reasoning, allowing for deeper context integration and a more comprehensive exploration of the problem space. This comparison also validates the advantages of our approach in leveraging dynamic reasoning to outperform more static agentic frameworks in a multi-hop QA scenario.

为了进一步说明IOT(IoT)框架的有效性,我们注意到它在HotpotQA上的多跳推理方面也超越了最近的方法,例如由王等(2024a),王等(2024b)和Jiapeng等(2024)描述的那些方法。尽管这些研究相对于CoT方法展示了改进,但IoT实现的F1和EM分数的增长比上述工作中报告的要大。虽然并非所有这些研究都使用了GPT-4o mini,这使得直接比较不那么直观,但仍很明显的是,从CoT到我们的IoT框架的准确度跃升更为显著。

请注意,在我的回答中,“”被正确转换为“()”。

To further illustrate the effectiveness of the IoT framework, we note that it also outperforms recent methods for multi-hop reasoning on HotpotQA, such as those described by Wang et al. ( 2024a ), Wang et al. ( 2024b ), and Jiapeng et al. ( 2024 ). Although these studies demonstrate improvements over the CoT approach, the increase in F1 and EM scores achieved by IoT is larger than those reported in the aforementioned works. While not all these studies utilize GPT-4o mini , which makes direct comparisons less straightforward, it remains evident that the jump in accuracy from CoT to our IoT framework is much more pronounced.

4 IOT的优势与劣势

4 Strengths and weaknesses of IoT

IOT(IoT)的一个定性优势在于其内在的概念透明度和可解释性。如同情境链(CoT)和其他类似方法一样,IoT通过一系列不断演进的输出提供了清晰的推理过程轨迹。然而,不同于其他“多阶段思考”方法的是,IoT的序列中还包含了由IDA生成的明确指导信息。这意味着每一个步骤都伴随着一个理由,底层的大语言模型将其等同于来自人类用户的提示对待。因此,对IoT输出序列(示例见附录A)的事后分析可以揭示模型在收到调整路径指令时自我修正的能力。除了增强模型的可解释性外,这一洞察能够促进与大语言模型更高效的互动。

One qualitative benefit of IoT is its inherent conceptual transparency and explain ability. Like CoT and similar methods, IoT provides a clear trace of its reasoning process through a sequence of evolving outputs. However, unlike other “multi-thought” methods, IoT’s sequence also includes explicit guidance generated by the IDA. This means each step is accompanied by a rationale that the underlying LLM treats equivalently to prompts from a human user. As a result, post hoc analysis of IoT’s output sequences (example in Appendix A ) can reveal the model’s capacity to self-correct when provided with course-adjusting instructions. In addition to enhancing the model’s explain ability, this insight can inform more efficient interactions with LLMs in general.

值得注意的是,IOT(IoT)框架本质上并非与常识理论(CoT)或自我一致的常识理论(Self-Consistent CoT,王等., 2022 年)相悖。原则上可以将IoT与CoT结合,创建一种混合方法,即IoT ◦ CoT,在这种方法中,内部对话代理(IDA)和大型语言模型代理(LLMA)都采用基于CoT的推理方式。这样的组合能够放大结构化推理的优势,同时保留迭代精炼的灵活性。此外,尽管我们的实验中IDA和LLMA均使用了相同的基线大型语言模型,但这些代理可以设置为不同,以便利用不同的模型或架构,改变系统的总基线知识为 K ⊗ K ′ K\otimes K^{\prime} K⊗K′ (Creswell和Shanahan, 2022 年)。

It is important to note that the IoT framework is not inherently orthogonal to CoT nor Self-Consistent CoT ( Wang et al. , 2022 ). One could in principle combine IoT with CoT to create a hybrid method, IoT ◦ CoT, where both the Inner Dialogue Agent (IDA) and LLM Agent (LLMA) use CoT-based reasoning. Such combinations could amplify the benefits of structured reasoning while retaining the flexibility of iterative refinement. Additionally, while our experiments used the same base LLM for both IDA and LLMA, these agents can be made distinct to leverage different models or architectures, changing the total base knowledge of the system to be K ⊗ K ′ K\otimes K^{\prime} K⊗K′ ( Creswell and Shanahan , 2022 ).

由于LLM的多面性,基于LLM的代理框架并不难扩展和组合。近期的研究表明,更大规模的代理群体可以带来更佳的推理表现(Li等人, 2024),但超过10-15个代理后改善率会递减。因此,IOT(IoT)自然的发展路径可能是将IDA扩展为一个包含专门子代理的元代理,这些子代理可能根据每个查询动态地定义或者不是。将IDA的知识库表示为 K ′ ˙ = ⨂ j M K j ˙ \dot{K^{\prime}}\,=\,\bigotimes_{j}^{M}\dot{K^{j}} K′˙=⨂jMKj˙,其中IDA群体的大小 M ∈ N + M\,\in\,\mathbb{N}^{+} M∈N+成为一个任意参数,指示构成IDA子代理的不同LLM的数量。本工作中介绍的IOT(IoT)(当 K = K ′ K=K^{\prime} K=K′时),是这个泛化家族中“最小”的成员,但仍足以提供强大的推理能力。根据Li等人(2024),增加群体大小 M M M理论上会提升IoT中的推理表现,尽管以额外复杂性和更大硬件预算为代价,目的是支撑众多不同的LLM。 M > 1 M>1 M>1也引入了可能的挑战性任务,即对子代理输出进行排序或解决它们指导中的冲突。关于复杂度问题,使用更大型号的LLMs在一个较小规模的群体中可能是增加 K ′ K^{\prime} K′大小而不增加 M M M的一个可行替代方案。

Owing largely to the versatility of LLMs, agentic LLM-based frameworks are not difficult to expand and compose. Recent work has suggested that larger ensembles of agents can lead to better reasoning performance ( Li et al. , 2024 ), with the rate of improvement diminishing beyond 10-15 agents. A natural progression of IoT could therefore be an expansion of the IDA into a meta-agent consisting of specialized sub-agents, which may or may not be dynamically defined on a per-query basis. Taking the knowledge base of the IDA to be K ′ ˙ = ⨂ j M K j ˙ \dot{K^{\prime}}\,=\,\bigotimes_{j}^{M}\dot{K^{j}} K′˙=⨂jMKj˙ , the size of the IDA ensemble, M ∈ N + M\,\in\,\mathbb{N}^{+} M∈N+ , becomes an arbitrary parameter indicating the number of distinct LLMs behind the IDA’s constituent sub-agents. IoT as introduced in this work (with K = K ′ K=K^{\prime} K=K′ ) represents the “smallest” member of this generalized family and still suffices to deliver powerful reasoning capabilities. Based on Li et al. ( 2024 ), increasing the ensemble size M M M could be expected to improve reasoning performance in IoT, though at cost of additional complexity and a larger hardware budget to power a multitude of distinct LLMs. M > 1 M>1 M>1 also introduces the potentially challenging task of ranking sub-agent outputs or resolving conflicts in their guidance. Regarding complexity, using larger LLMs in a smaller ensemble may be a viable alternative for increasing the size of K ′ K^{\prime} K′ without increasing M M M .

IOT的自主迭代在人类干预不切实际或不可能的情况下提供了显著的优势——这意味着系统被限制为需独立运行。在需要快速且持续做出决策的情境下,实现人为监督是困难的。在此类情况下,IOT的自主推理能力可成为宝贵的资源。此外,由IOT生成的思想序列(参见图2和附录A)可以作为精调现有模型的宝贵资料,可能增强其推理能力。自主性与改进模型训练的双重优势使得IOT在构建更强大、更加自给自足系统方面成为强有力的工具。

IoT’s autonomous iteration also offers significant advantages in situations where human intervention is impractical or impossible — such that systems are constrained to function independently. Human oversight is difficult to achieve in contexts that demand rapid and continuous decision-making, for example. Here, IoT’s autonomous reasoning capabilities can be a valuable asset. Moreover, the thought sequences generated by IoT (see Figure 2 and Appendix A ) could serve as a valuable resource for fine-tuning existing models, potentially enhancing their reasoning capabilities. This dual benefit of autonomy and improved model training makes IoT a powerful tool in building more robust, self-sufficient systems.

关于IOT(IoT)的两个变体,我们的结果显示尽管AIoT提供了一种带有自主决策停止迭代的高效方法,但它也经常错误地判断了其响应的完整性,导致过早收敛。这一限制可以通过引入反馈代理(Chen等人, 2023),使用启发式引导技术(Jung等人, 2022),甚至允许人为干预或外部知识检验来克服。这将创建一个半自主框架,在效率与稳健性之间取得平衡(Wu等人, 2022)。而GIoT则规定了迭代次数,这在多步推理任务中可以提升性能,但如果模型自信地偏离正确推理,则可能会增加幻觉的风险。适当的减少幻觉的技术可以进一步优化GIoT在复杂任务中的实用性(Tonmoy等人, 2024)。

针对IOT的两种变体,我们的成果表明尽管AIoT提供了一种通过自主决策停止迭代的高效途径,但是这种方式也经常会错误判定其响应的完整性,导致收敛过早。这个局限性可以通过加入反馈代理 (Chen 等人, 2023),采用诱发式引导技术(Jung 等人, 2022),甚至容许人类干预或进行外部知识验证等方法来解决。这种方式将打造一个半自动的框架,实现效率与稳健性的平衡(Wu等人, 2022)。相反地,GIoT设定了迭代次数的限制,这种方法能在多步骤推理任务中提高表现,但若模型自信地误入歧途则可能增加幻觉的风险。恰当的技术用来减少幻觉,可以进一步提升GIoT在复杂任务的应用价值(Tonmoy等人, 2024)。

Regarding the two variants of IoT, our results demonstrate that while AIoT provides an efficient approach with autonomous decisions to stop iterating, it also often misjudges the completeness of its responses, leading to premature convergence. This limitation could be addressed by incorporating feedback agents ( Chen et al. , 2023 ), using techniques like maieutic prompting ( Jung et al. , 2022 ), or even allowing for human intervention or external knowledge checks. This would create a semiautonomous framework that balances efficiency with robustness ( Wu et al. , 2022 ). On the other hand, GIoT forces a fixed number of iterations, which can improve performance in multi-step reasoning tasks, but may also increase the risk of hallucination if the model confidently drifts into incorrect reasoning. Appropriate techniques to reduce hallucination could further refine GIoT’s utility in complex tasks ( Tonmoy et al. , 2024 ).

5 结论与未来工作

5 Conclusion and future work

在本项工作中,我们引入了“思维迭代”(IoT)框架,在此框架中,内部对话代理(IDA)与大型语言模型代理(LLMA)进行递归的对话,以执行各种复杂的推理任务,如解谜游戏(24点游戏、迷你填字游戏)和回答难题问卷(GPQA、HotpotQA)。在实验中,我们采取了该框架的两个变体,分别为“自主式”(AIoT)和“引导式”(GIoT),以比较这些任务中的迭代终止机制。总是进行固定次数迭代的GIoT,在24点游戏中相较于自行决定终止条件的AIoT表现出更佳性能;然而,在GPQA上,AIoT则表现更优。两个变体在迷你填字游戏上的表现相近,并且在所有对比中都优于广为人知的“思维链”(CoT)框架。我们还对比了我们的IoT框架与分层的AgentLite框架在多情境HotpotQA任务中的表现,结果显示IoT框架分别在F1分数和EM分数上比AgentLite提高了约35%和44%。综上所述,我们的结果表明IoT可以成功地为低复杂性代理框架引入富有成效的动态性质。

In this work, we introduced the Iteration of Thought (IoT) framework, in which an Inner Dialogue Agent (IDA) iterative ly converses with an LLM Agent (LLMA) to perform various complex reasoning tasks like solving puzzles ( Game of 24 , Mini Crosswords ) and answering difficult questionnaires (GPQA, HotpotQA). We employed two variants of this framework in our experiments, qualified as “autonomous” (AIoT) and “guided” (GIoT) respectively, to compare iteration-terminating mechanisms across these tasks. GIoT, the variant that always performs a fixed number of iterations, was seen to perform better than AIoT, the variant that self-determines termination, in Game of 24 . On the other hand, AIoT had superior performance on GPQA. Both variants performed similarly on Mini Crosswords and always performed better than the wellknown Chain of Thought (CoT) framework, wherever compared. We also compared our IoT framework against the hierarchical AgentLite framework on the multi-context HotpotQA task, finding improvements of approximately a 35 % 35\% 35% in the F1 score and 44 % 44\% 44% in the EM score over AgentLite . All together, our results demonstrate that IoT can succes fully introduce productive dynamism into low-complexity agentic frameworks.

公众号:曲奇自然语言处理

![[大语言模型] LINFUSION:1个GPU,1分钟,16K图像](https://i-blog.csdnimg.cn/direct/89f40abe651740ccbf16eec14116d5dd.png)