一、引言

对于单模型来说,模型的抗干扰能力低,且难以拟合复杂的数据。

所以可以集成多个模型的优缺点,提高泛化能力。

集成学习一般有三种:boosting是利用多个弱学习器串行,逐个纠错,构造强学习器。

bagging是构造多个独立的模型,然后增强泛化能力。

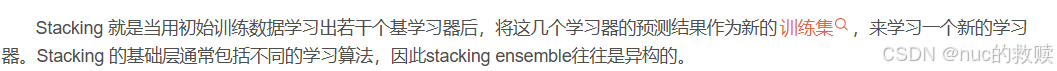

而stacking结合了以上两种方式,将xy先进行n-fold,然后分给n个基学习器学习,再将n个输出的预测值进行堆叠,形成新的样本数据作为x。新的x和旧的y交给第二层模型进行拟合。

二、代码

import numpy as np

from sklearn.model_selection import KFold

from sklearn import datasets

from sklearn.linear_model import LogisticRegression

from sklearn.ensemble import RandomForestClassifier

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

class MyStacking:

# 初始化模型参数

def __init__(self, estimators, final_estimator, cv=5, method='predict'):

self.cv = cv

self.method = method

self.estimators = estimators

self.final_estimator = final_estimator

# 模型训练

def fit(self, X, y):

# 获得一级输出

dataset_train = self.stacking(X, y)

# 模型融合

self.final_estimator.fit(dataset_train, y)

# 堆叠输出

def stacking(self, X, y):

kf = KFold(n_splits=self.cv, shuffle=True, random_state=2021)

# 获得一级输出

dataset_train = np.zeros((X.shape[0], len(self.estimators)))

for i, model in enumerate(self.estimators):

for (train, val) in kf.split(X, y):

X_train = X[train]

X_val = X[val]

y_train = y[train]

y_val_pred = model.fit(X_train, y_train).predict(X_val)

dataset_train[val, i] = y_val_pred

self.estimators[i] = model

return dataset_train

# 模型预测

def predict(self, X):

datasets_test = np.zeros((X.shape[0], len(self.estimators)))

for i, model in enumerate(self.estimators):

datasets_test[:, i] = model.predict(X)

return self.final_estimator.predict(datasets_test)

# 模型精度

def score(self, X, y):

datasets_test = np.zeros((X.shape[0], len(self.estimators)))

for i, model in enumerate(self.estimators):

datasets_test[:, i] = model.predict(X)

return self.final_estimator.score(datasets_test, y)

if __name__ == '__main__':

X, y = load_iris(return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(

X, y, train_size=0.7, random_state=0)

estimators = [

RandomForestClassifier(n_estimators=10),

GradientBoostingClassifier(n_estimators=10)

]

clf = MyStacking(estimators=estimators,

final_estimator=LogisticRegression())

clf.fit(X_train, y_train)

print(clf.score(X_train, y_train))

print(clf.score(X_test, y_test))

![[Redis#15] 持久化 | AOF | rewrite | aof_buf | 混合持久化](https://i-blog.csdnimg.cn/img_convert/859ad4ed131e3211e302a98c4a9e3ca8.png)