本博客内容翻译自作者于 2024 年 9 月在亚马逊云科技开发者社区发表的同名博客: “Mastering Amazon Bedrock Custom Models Fine-tuning (Part 2): Data Preparation for Fine-tuning”

亚马逊云科技开发者社区为开发者们提供全球的开发技术资源。这里有技术文档、开发案例、技术专栏、培训视频、活动与竞赛等。帮助中国开发者对接世界最前沿技术,观点,和项目,并将中国优秀开发者或技术推荐给全球云社区。如果你还没有关注/收藏,看到这里请一定不要匆匆划过,点这里让它成为你的技术宝库!

概述

在上一篇文章《Amazon Bedrock 模型微调实践(一):微调基础篇》中,我们探讨了微调和检索增强生成(RAG)技术,概述了它们并根据具体用例提供了选择合适方法的建议。我们提供了关于微调入门的见解,并展示了一个使用 Amazon SageMaker 对 Llama 模型进行微调的示例,演示了数据预处理、超参数调优、评估等过程,帮助开发人员理解微调过程。

在本篇文章中,我们将继续指导你创建必要的资源和准备数据集,为在下一集中使用 Amazon Bedrock 微调 Claude 3 Haiku 模型做好数据准备。

跟随本文的示例分析,最后你将创建一个 IAM 角色,一个 S3 存储桶,以及训练、验证和测试数据集,这些数据集将按照所需格式准备,以支持下一集来进行微调。

先决条件

在开始数据准备过程之前,请确保你有创建和管理 IAM 角色、S3 存储桶以及访问 Amazon Bedrock 的所需权限。如果你不是管理员角色,将需要赋予你的 IAM 角色以下的托管策略:

-

IAMFullAccess

-

AmazonS3FullAccess

-

AmazonBedrockFullAccess

你也可以参考文档,在 Amazon Bedrock 控制台中创建自定义模型。

设置

首先,确保安装或升级所需的 Python 包到指定版本:

!pip install --upgrade pip

%pip install --no-build-isolation --force-reinstall \"boto3>=1.28.57" \"awscli>=1.29.57" \"botocore>=1.31.57"

!pip install -qU --force-reinstall langchain typing_extensions pypdf urllib3==2.1.0

!pip install -qU ipywidgets>=7,<8

!pip install jsonlines

!pip install datasets==2.15.0

!pip install pandas==2.1.3

!pip install matplotlib==3.8.2

然后,导入所有所需的库和依赖项:

import warnings

warnings.filterwarnings('ignore')

import json

import os

import sys

import boto3

import time

import pprint

from datasets import load_dataset

import random

import jsonlines

以及设置将要使用的各种亚马逊云科技的服务客户端,包括 S3、Bedrock 等:

session = boto3.session.Session()

region = session.region_name

sts_client = boto3.client('sts')

account_id = sts_client.get_caller_identity()["Account"]

s3_suffix = f"{region}-{account_id}"

bucket_name = f"bedrock-haiku-customization-{s3_suffix}"

s3_client = boto3.client('s3')

bedrock = boto3.client(service_name="bedrock")

bedrock_runtime = boto3.client(service_name="bedrock-runtime")

iam = boto3.client('iam', region_name=region)import uuid

suffix = str(uuid.uuid4())

role_name = "BedrockRole-" + suffix

s3_bedrock_finetuning_access_policy="BedrockPolicy-" + suffix

customization_role = f"arn:aws:iam::{account_id}:role/{role_name}"

你还可以打印出主要的配置项,例如:region、role 名称、S3 桶名称、策略名称等,以便你在需要时随时找到它们:

print("region:", region)

print("role_name:", role_name)

print("bucket_name:", bucket_name)

print("s3_bedrock_finetuning_access_policy:", s3_bedrock_finetuning_access_policy)

print("customization_role:", customization_role)

创建存放微调数据的 S3 桶

创建 S3 存储桶,将用于存储微调模型所需的微调数据集:

# Create S3 bucket for knowledge base data source

s3bucket = s3_client.create_bucket(Bucket=bucket_name,## Uncomment the following if you run into errorsCreateBucketConfiguration={'LocationConstraint':region,},

)

创建角色和策略

然后,创建角色和策略来运行在 Amazon Bedrock 上的模型自定义微调工作。

下面这个 JSON 对象定义了信任关系,允许 Amazon Bedrock 服务去承担一个角色,从而使它能够与其他所需的亚马逊云科技的服务进行通信。这些条件限制了只有特定的账户 ID 和 Bedrock 服务的特定组件(model_customization_job)才能承担该角色。

ROLE_DOC = f"""{{"Version": "2012-10-17","Statement": [{{"Effect": "Allow","Principal": {{"Service": "bedrock.amazonaws.com"}},"Action": "sts:AssumeRole","Condition": {{"StringEquals": {{"aws:SourceAccount": "{account_id}"}},"ArnEquals": {{"aws:SourceArn": "arn:aws:bedrock:{region}:{account_id}:model-customization-job/*"}}}}}}]

}}

"""

下面这个 JSON 对象定义了 Amazon Bedrock 将承担的角色权限,它将被允许访问用于存放我们的微调数据集的 S3 存储桶,并启用这些存储桶的一些对象操作:

ACCESS_POLICY_DOC = f"""{{"Version": "2012-10-17","Statement": [{{"Effect": "Allow","Action": ["s3:AbortMultipartUpload","s3:DeleteObject","s3:PutObject","s3:GetObject","s3:GetBucketAcl","s3:GetBucketNotification","s3:ListBucket","s3:PutBucketNotification"],"Resource": ["arn:aws:s3:::{bucket_name}","arn:aws:s3:::{bucket_name}/*"]}}]

}}"""

你可以把它们汇总列举,以方便详细了解角色等相关信息:

response = iam.create_role(RoleName=role_name,AssumeRolePolicyDocument=ROLE_DOC,Description="Role for Bedrock to access S3 for haiku finetuning",

)

pprint.pp(response)role_arn = response["Role"]["Arn"]

pprint.pp(role_arn)response = iam.create_policy(PolicyName=s3_bedrock_finetuning_access_policy,PolicyDocument=ACCESS_POLICY_DOC,

)

pprint.pp(response)policy_arn = response["Policy"]["Arn"]

pprint.pp(policy_arn)

最后,需要将已定义的策略附加到指定的角色:

iam.attach_role_policy(RoleName=role_name,PolicyArn=policy_arn,

)

为微调和评估准备 CNN 新闻文章数据集

将使用的数据集是来自 CNN 的一组新闻文章及其相关摘要。更多关于该数据集的信息可参考:https://huggingface.co/datasets/cnn_dailymail?trk=cndc-detail

首先,从 HuggingFace 加载 CNN 新闻文章数据集:

#Load cnn dataset from huggingface

dataset = load_dataset("cnn_dailymail",'3.0.0')print(dataset)

列出并洞察数据集中的文章数量:

DatasetDict({train: Dataset({features: ['article', 'highlights', 'id'],num_rows: 287113})validation: Dataset({features: ['article', 'highlights', 'id'],num_rows: 13368})test: Dataset({features: ['article', 'highlights', 'id'],num_rows: 11490})

})

提供的数据集包含了三个不同的子数据集 -- train, validation, 和 test:

1/ 对于train子数据集,有 287,113 个样本

2/ 对于validation子数据集,有 13,368 个样本

3/ 对于test子数据集,有 11,490 个样本

为了微调 Haiku 模型,训练数据必须采用 JSONL 格式,每一行代表一个训练记录。如下所示:

{"system": string, "messages": [{"role": "user", "content": string}, {"role": "assistant", "content": string}]}

{"system": string, "messages": [{"role": "user", "content": string}, {"role": "assistant", "content": string}]}

{"system": string, "messages": [{"role": "user", "content": string}, {"role": "assistant", "content": string}]}

具体来说,训练数据格式必须与该文档中描述的 MessageAPI 的数据要求对齐:https://docs.aws.amazon.com/bedrock/latest/userguide/model-parameters-anthropic-claude-messages.html?trk=cndc-detail

在每一行中,system 消息是可选的上下文信息和对 Haiku 模型的指令,例如:指定特定目标或角色等,也称为系统提示(system prompt)。

`user` 输入对应于用户的指令,而 `assistant` 输入是微调后模型给出的期望回应。

指令微调的常见提示结构,通常包括:

1/ 系统提示

2/ 指令

3/ 提供附加上下文的输入

以下代码定义了将添加到 MessageAPI 的系统提示,以及将在每篇文章前添加的指令头,它们共同构成了每个数据点的 user 内容。

system_string = "Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request."instruction = """instruction:Summarize the news article provided below.input:

"""

对于 `assistant` 部分,我们将引用文章的摘要/要点(summary/highlights)。数据点转换代码如下所示:

datapoints_train=[]

for dp in dataset['train']:temp_dict={}temp_dict["system"] = system_stringtemp_dict["messages"] = [{"role": "user", "content": instruction+dp['article']},{"role": "assistant", "content": dp['highlights']}]datapoints_train.append(temp_dict)

一个经过处理的数据点示例如下:

print(datapoints_train[4]){'system': 'Below is an intruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.', 'messages': [{'role': 'user', 'content': 'instruction:\n\nSummarize the news article provided below.\n\ninput:\n(CNN) -- The National Football League has indefinitely suspended Atlanta Falcons quarterback Michael Vick without pay, officials with the league said Friday. NFL star Michael Vick is set to appear in court Monday. A judge will have the final say on a plea deal. Earlier, Vick admitted to participating in a dogfighting ring as part of a plea agreement with federal prosecutors in Virginia. "Your admitted conduct was not only illegal, but also cruel and reprehensible. Your team, the NFL, and NFL fans have all been hurt by your actions," NFL Commissioner Roger Goodell said in a letter to Vick. Goodell said he would review the status of the suspension after the legal proceedings are over. In papers filed Friday with a federal court in Virginia, Vick also admitted that he and two co-conspirators killed dogs that did not fight well. Falcons owner Arthur Blank said Vick\'s admissions describe actions that are "incomprehensible and unacceptable." The suspension makes "a strong statement that conduct which tarnishes the good reputation of the NFL will not be tolerated," he said in a statement. Watch what led to Vick\'s suspension » . Goodell said the Falcons could "assert any claims or remedies" to recover $22 million of Vick\'s signing bonus from the 10-year, $130 million contract he signed in 2004, according to The Associated Press. Vick said he would plead guilty to one count of "Conspiracy to Travel in Interstate Commerce in Aid of Unlawful Activities and to Sponsor a Dog in an Animal Fighting Venture" in a plea agreement filed at U.S. District Court in Richmond, Virginia. The charge is punishable by up to five years in prison, a $250,000 fine, "full restitution, a special assessment and 3 years of supervised release," the plea deal said. Federal prosecutors agreed to ask for the low end of the sentencing guidelines. "The defendant will plead guilty because the defendant is in fact guilty of the charged offense," the plea agreement said. In an additional summary of facts, signed by Vick and filed with the agreement, Vick admitted buying pit bulls and the property used for training and fighting the dogs, but the statement said he did not bet on the fights or receive any of the money won. "Most of the \'Bad Newz Kennels\' operations and gambling monies were provided by Vick," the official summary of facts said. Gambling wins were generally split among co-conspirators Tony Taylor, Quanis Phillips and sometimes Purnell Peace, it continued. "Vick did not gamble by placing side bets on any of the fights. Vick did not receive any of the proceeds from the purses that were won by \'Bad Newz Kennels.\' " Vick also agreed that "collective efforts" by him and two others caused the deaths of at least six dogs. Around April, Vick, Peace and Phillips tested some dogs in fighting sessions at Vick\'s property in Virginia, the statement said. "Peace, Phillips and Vick agreed to the killing of approximately 6-8 dogs that did not perform well in \'testing\' sessions at 1915 Moonlight Road and all of those dogs were killed by various methods, including hanging and drowning. "Vick agrees and stipulates that these dogs all died as a result of the collective efforts of Peace, Phillips and Vick," the summary said. Peace, 35, of Virginia Beach, Virginia; Phillips, 28, of Atlanta, Georgia; and Taylor, 34, of Hampton, Virginia, already have accepted agreements to plead guilty in exchange for reduced sentences. Vick, 27, is scheduled to appear Monday in court, where he is expected to plead guilty before a judge. See a timeline of the case against Vick » . The judge in the case will have the final say over the plea agreement. The federal case against Vick focused on the interstate conspiracy, but Vick\'s admission that he was involved in the killing of dogs could lead to local charges, according to CNN legal analyst Jeffrey Toobin. "It sometimes happens -- not often -- that the state will follow a federal prosecution by charging its own crimes for exactly the same behavior," Toobin said Friday. "The risk for Vick is, if he makes admissions in his federal guilty plea, the state of Virginia could say, \'Hey, look, you admitted violating Virginia state law as well. We\'re going to introduce that against you and charge you in our court.\' " In the plea deal, Vick agreed to cooperate with investigators and provide all information he may have on any criminal activity and to testify if necessary. Vick also agreed to turn over any documents he has and to submit to polygraph tests. Vick agreed to "make restitution for the full amount of the costs associated" with the dogs that are being held by the government. "Such costs may include, but are not limited to, all costs associated with the care of the dogs involved in that case, including if necessary, the long-term care and/or the humane euthanasia of some or all of those animals." Prosecutors, with the support of animal rights activists, have asked for permission to euthanize the dogs. But the dogs could serve as important evidence in the cases against Vick and his admitted co-conspirators. Judge Henry E. Hudson issued an order Thursday telling the U.S. Marshals Service to "arrest and seize the defendant property, and use discretion and whatever means appropriate to protect and maintain said defendant property." Both the judge\'s order and Vick\'s filing refer to "approximately" 53 pit bull dogs. After Vick\'s indictment last month, Goodell ordered the quarterback not to report to the Falcons training camp, and the league is reviewing the case. Blank told the NFL Network on Monday he could not speculate on Vick\'s future as a Falcon, at least not until he had seen "a statement of facts" in the case. E-mail to a friend . CNN\'s Mike Phelan contributed to this report.'}, {'role': 'assistant', 'content': "NEW: NFL chief, Atlanta Falcons owner critical of Michael Vick's conduct .\nNFL suspends Falcons quarterback indefinitely without pay .\nVick admits funding dogfighting operation but says he did not gamble .\nVick due in federal court Monday; future in NFL remains uncertain ."}]}

对于验证数据集和测试数据集,也如下代码所示,执行相同的数据预处理过程。

datapoints_valid=[]

for dp in dataset['validation']:temp_dict={}temp_dict["system"] = system_stringtemp_dict["messages"] = [{"role": "user", "content": instruction+dp['article']},{"role": "assistant", "content": dp['highlights']}]datapoints_valid.append(temp_dict)datapoints_test=[]

for dp in dataset['test']:temp_dict={}temp_dict["system"] = system_stringtemp_dict["messages"] = [{"role": "user", "content": instruction+dp['article']},{"role": "assistant", "content": dp['highlights']}]datapoints_test.append(temp_dict)

接下来,我们将定义一些辅助函数。

通过修改在每个数据集中包含的数据点数量和最大字符串长度,来进一步处理数据点。函数将把我们的数据集转换为 JSONL 文件,如下代码所示:

def dp_transform(data_points,num_dps,max_dp_length):"""This function filters and selects a subset of data points from the provided list based on the specified maximum length and desired number of data points.""" lines=[]for dp in data_points:if len(dp['system']+dp['messages'][0]['content']+dp['messages'][1]['content'])<=max_dp_length:lines.append(dp)random.shuffle(lines)lines=lines[:num_dps]return linesdef jsonl_converter(dataset,file_name):"""This function writes the provided dataset to a JSONL (JSON Lines) file."""print(file_name)with jsonlines.open(file_name, 'w') as writer:for line in dataset:writer.write(line)

Haiku 模型对微调数据集的要求如下:

-

上下文长度可达到 32,000 个 tokens

-

训练数据集不能超过 10,000 条记录

-

验证数据集不能超过 1,000 条记录

为简单起见,我们将按如下方式处理数据集:

train=dp_transform(datapoints_train,1000,20000)

validation=dp_transform(datapoints_valid,100,20000)

test=dp_transform(datapoints_test,10,20000)

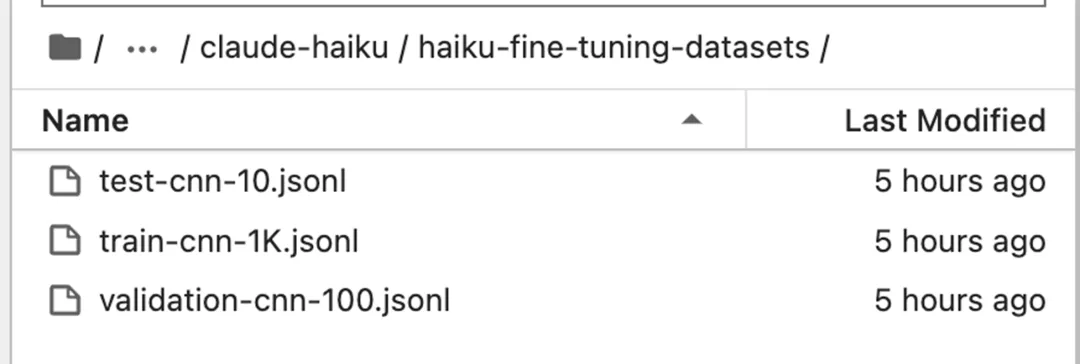

创建本地数据集目录

将处理后的数据保存在本地,并转换为 JSONL 格式,代码如下所示:

dataset_folder="haiku-fine-tuning-datasets"

train_file_name="train-cnn-1K.jsonl"

validation_file_name="validation-cnn-100.jsonl"

test_file_name="test-cnn-10.jsonl"

!mkdir haiku-fine-tuning-datasets

abs_path=os.path.abspath(dataset_folder)jsonl_converter(train,f'{abs_path}/{train_file_name}')

jsonl_converter(validation,f'{abs_path}/{validation_file_name}')

jsonl_converter(test,f'{abs_path}/{test_file_name}')

处理后的数据集上传到 S3

以下代码块将创建的训练、验证和测试数据集上传到 S3 存储桶。

训练和验证数据集将用于 Haiku 模型微调作业,测试数据集将用于评估微调后的 Haiku 模型与基础 Haiku 模型的性能。

s3_client.upload_file(f'{abs_path}/{train_file_name}', bucket_name, f'haiku-fine-tuning-datasets/train/{train_file_name}')

s3_client.upload_file(f'{abs_path}/{validation_file_name}', bucket_name, f'haiku-fine-tuning-datasets/validation/{validation_file_name}')

s3_client.upload_file(f'{abs_path}/{test_file_name}', bucket_name, f'haiku-fine-tuning-datasets/test/{test_file_name}')s3_train_uri=f's3://{bucket_name}/haiku-fine-tuning-datasets/train/{train_file_name}'

s3_validation_uri=f's3://{bucket_name}/haiku-fine-tuning-datasets/validation/{validation_file_name}'

s3_test_uri=f's3://{bucket_name}/haiku-fine-tuning-datasets/test/{test_file_name}'

小结

如果你对为微调 Haiku 模型准备数据感兴趣,可以参考 GitHub 。

按照本文中概述的步骤,你应该已成功准备好使用 Amazon Bedrock 微调 Haiku 模型进行新闻文章摘要所需的资源和微调数据集。设置好 IAM 角色、S3 存储桶和处理过的数据集后,你就可以继续进行微调过程了,这将在下一篇文章中介绍,敬请期待。

注:本文封面图像是使用 Amazon Bedrock 上的 SDXL 1.0 模型生成的。提示词如下:

“A developer and a data scientist sitting in a café, laptop without a logo, excitedly discussing model fine-tuning, comic, graphic illustration, comic art, graphic novel art, vibrant, highly detailed, colored, 2d”

文章来源:Amazon Bedrock 模型微调实践(二):数据准备篇