论文: https://arxiv.org/abs/2207.12598

MOTIVATION

We are interested in whether classifier guidance can be performed without a classifier.

- Classifier guidance complicates the diffusion model training pipeline

- it requires training an extra classifier

- this classifier must be trained on noisy data so it is generally not possible to plug in a pre-trained classifier.

- Furthermore, classifier guidance mixes a score estimate with a classifier gradient during sampling

- classifier-guided diffusion sampling can be interpreted as attempting to confuse an image classifier with a gradient-based adversarial attack.

- This raises the question of whether classifier guidance is successful at boosting classifier-based metrics such as FID and Inception score (IS) simply because it is adversarial against such classifiers.

CONTRIBUTION

- we present classifier-free guidance, our guidance method which avoids any classifier entirely

- Rather than sampling in the direction of the gradient of an image classifier, classifier-free guidance instead mixes the score estimates of a conditional diffusion model and a jointly trained unconditional diffusion model.

BACKGROUND

Continuous Time Training

q ( z λ ∣ x ) = N ( α λ x , σ λ 2 I ) , w h e r e α λ 2 = 1 / ( 1 + e − λ ) , σ λ 2 = 1 − α λ 2 q ( z λ ∣ z λ ′ ) = N ( ( α λ / α λ ′ ) z λ ′ , σ λ ∣ λ ′ 2 I ) , w h e r e λ < λ ′ , σ λ ∣ λ ′ 2 = ( 1 − e λ − λ ′ ) σ λ 2 \begin{aligned}q(\mathbf{z}_{\lambda}|\mathbf{x})&=\mathcal{N}(\alpha_{\lambda}\mathbf{x},\sigma_{\lambda}^{2}\mathbf{I}), \mathrm{where} \alpha_{\lambda}^{2}=1/(1+e^{-\lambda}), \sigma_{\lambda}^{2}=1-\alpha_{\lambda}^{2}\\q(\mathbf{z}_{\lambda}|\mathbf{z}_{\lambda^{\prime}})&=\mathcal{N}((\alpha_{\lambda}/\alpha_{\lambda^{\prime}})\mathbf{z}_{\lambda^{\prime}},\sigma_{\lambda|\lambda^{\prime}}^{2}\mathbf{I}), \mathrm{where} \lambda<\lambda^{\prime}, \sigma_{\lambda|\lambda^{\prime}}^{2}=(1-e^{\lambda-\lambda^{\prime}})\sigma_{\lambda}^{2}\end{aligned} q(zλ∣x)q(zλ∣zλ′)=N(αλx,σλ2I),whereαλ2=1/(1+e−λ),σλ2=1−αλ2=N((αλ/αλ′)zλ′,σλ∣λ′2I),whereλ<λ′,σλ∣λ′2=(1−eλ−λ′)σλ2

- x ∼ p ( x ) \mathbf{x}\sim p(\mathbf{x}) x∼p(x):initial distribution

- z = { z λ ∣ λ ∈ [ λ min , λ max ] } \mathbf{z}=\{\mathbf{z}_{\lambda}\mid\lambda\in[\lambda_{\operatorname*{min}},\lambda_{\operatorname*{max}}]\} z={zλ∣λ∈[λmin,λmax]}

- p ( z ) p(z) p(z)( or p ( z λ ) ) p({z_{\lambda}})) p(zλ)):the marginal of z z z(or z λ ) {z_{\lambda}}) zλ)when x ∼ p ( x ) \mathbf{x}\sim p(\mathbf{x}) x∼p(x) and z ∼ q ( z ∣ x ) \mathbf{z}\sim q(\mathbf{z}|\mathbf{x}) z∼q(z∣x)

- λ = log α λ 2 / σ λ 2 , \lambda=\log\alpha_{\lambda}^{2}/\sigma_{\lambda}^{2}, λ=logαλ2/σλ2,:the log signal-to-noise ratio of z λ z_{\lambda} zλthe forward process runs in the direction of decreasing λ.

Conditioned on x

- forward process: q ( z λ ′ ∣ z λ , x ) = N ( μ ~ λ ′ ∣ λ ( z λ , x ) , σ ~ λ ′ ∣ λ 2 I , q(\mathbf{z}_{\lambda^{\prime}}|\mathbf{z}_{\lambda},\mathbf{x})=\mathcal{N}(\tilde{\boldsymbol{\mu}}_{\lambda^{\prime}|\lambda}(\mathbf{z}_{\lambda},\mathbf{x}),\tilde{\sigma}_{\lambda^{\prime}|\lambda}^{2}\mathbf{I}, q(zλ′∣zλ,x)=N(μ~λ′∣λ(zλ,x),σ~λ′∣λ2I,

- μ ~ λ ′ ∣ λ ( z λ , x ) = e λ − λ ′ ( α λ ′ / α λ ) z λ + ( 1 − e λ − λ ′ ) α λ ′ x \tilde{\boldsymbol{\mu}}_{\lambda'|\lambda}(\mathbf{z}_{\lambda},\mathbf{x})=e^{\lambda-\lambda'}(\alpha_{\lambda'}/\alpha_{\lambda})\mathbf{z}_{\lambda}+(1-e^{\lambda-\lambda'})\alpha_{\lambda'}\mathbf{x} μ~λ′∣λ(zλ,x)=eλ−λ′(αλ′/αλ)zλ+(1−eλ−λ′)αλ′x

- σ ~ λ ′ ∣ λ 2 = ( 1 − e λ − λ ′ ) σ λ ′ 2 \tilde{\sigma}_{\lambda'|\lambda}^{2}=(1-e^{\lambda-\lambda'})\sigma_{\lambda'}^{2} σ~λ′∣λ2=(1−eλ−λ′)σλ′2

- reverse process generative model

- start from p θ ( z λ m i n ) = N ( 0 , I ) p_{\theta}(\mathbf{z}_{\lambda_{\mathrm{min}}})=\mathcal{N}(\mathbf{0},\mathbf{I}) pθ(zλmin)=N(0,I)

- p θ ( z λ ′ ∣ z λ ) = N ( μ ~ λ ′ ∣ λ ( z λ , x θ ( z λ ) ) , ( σ ~ λ ′ ∣ λ 2 ) 1 − v ( σ λ ∣ λ ′ 2 ) v ) p_\theta(\mathbf{z}_{\lambda'}|\mathbf{z}_\lambda)=\mathcal{N}(\tilde{\boldsymbol{\mu}}_{\lambda'|\lambda}(\mathbf{z}_\lambda,\mathbf{x}_\theta(\mathbf{z}_\lambda)),(\tilde{\sigma}_{\lambda'|\lambda}^2)^{1-v}(\sigma_{\lambda|\lambda'}^2)^v) pθ(zλ′∣zλ)=N(μ~λ′∣λ(zλ,xθ(zλ)),(σ~λ′∣λ2)1−v(σλ∣λ′2)v)

- During sampling, we apply this transition along an increasing sequence λ m i n = λ 1 < ⋅ ⋅ ⋅ < λ T = λ m a x λ_{min} = λ_1 < · · · < λ_T = λ_{max} λmin=λ1<⋅⋅⋅<λT=λmax for T timesteps;

- parameterize x θ x_θ xθ in terms of ϵ \epsilon ϵ-prediction: x θ ( z λ ) = ( z λ − σ λ ϵ θ ( z λ ) ) / α λ x_θ(z_λ) = (z_λ−σ_λ{\epsilon}_θ(z_λ))/α_λ xθ(zλ)=(zλ−σλϵθ(zλ))/αλ

- If the model x θ x_θ xθ is correct, then as T →∞, we obtain samples from an SDE whose sample paths are distributed as p ( z ) p(z) p(z) (Song et al., 2021b), and we use p θ ( z ) p_θ(z) pθ(z) to denote the continuous time model distribution.

- The variance

- The variance is a log-space interpolation of σ ~ λ ′ ∣ λ 2 \tilde{\sigma}_{\lambda^{\prime}|\lambda}^{2} σ~λ′∣λ2 and σ λ ′ ∣ λ 2 {\sigma}_{\lambda^{\prime}|\lambda}^{2} σλ′∣λ2【通过一个对数空间的插值方法进行连接】

- we found it effective to use a constant hyperparameter v v vrather than learned z λ z_λ zλ-dependent v v v.【这种插值方法使用了一个恒定的超参数 v v v,而不是依赖于 λ λ λ的可学习参数 v v v】

- Note that the variances simplify to σ ~ λ ′ ∣ λ 2 \tilde{\sigma}_{\lambda^{\prime}|\lambda}^{2} σ~λ′∣λ2 as λ’ → λ, so v v v has an effect only when sampling with non-infinitesimal timesteps as done in practice.【在实际的采样过程中,时间步长通常不是无穷小的,因此超参数 v v v对于确定在每一步中的方差变化很重要】

- the mean

- The reverse process mean comes from an estimate x θ ( z λ ) x_θ(z_λ) xθ(zλ)( x θ x_θ xθ ignore input λ λ λ for simple) ≈ x plugged into q ( z λ ′ ∣ z λ , x ) q(z_{λ'}|z_λ, x) q(zλ′∣zλ,x)

- we train on the objective: E ϵ , λ [ ∥ ϵ θ ( z λ ) − ϵ ∥ 2 2 ] \mathbb{E}_{\boldsymbol{\epsilon},\lambda}\big[\|\boldsymbol{\epsilon}_\theta(\mathbf{z}_\lambda)-\boldsymbol{\epsilon}\|_2^2\big] Eϵ,λ[∥ϵθ(zλ)−ϵ∥22]

- ϵ ∼ N ( 0 , I ) \epsilon\sim\mathcal{N}(\mathbf{0},\mathbf{I}) ϵ∼N(0,I)

- z λ = α λ x + σ λ ϵ , \mathbf{z}_{\lambda}=\alpha_{\lambda}\mathbf{x}+\sigma_{\lambda}\mathbf{\epsilon}, zλ=αλx+σλϵ,

- λ \lambda λ:is drawn from a distribution p ( λ ) p(\lambda) p(λ) over [ λ min , λ max ] [\lambda_{\min},\lambda_{\max}] [λmin,λmax]

- when p ( λ ) p(λ) p(λ) is uniform, the objective of score matching over multiple noise scales is proportional to the variational lower bound on the marginal log likelihood of the latent variable model ∫ p θ ( x ∣ z ) p θ ( z ) d z , \int p_{\theta}(\mathbf{x}|\mathbf{z})p_{\theta}(\mathbf{z})d\mathbf{z}, ∫pθ(x∣z)pθ(z)dz,ignoring the term for the unspecified decoder p θ ( x ∣ z ) p_{\theta}(\mathbf{x}|\mathbf{z}) pθ(x∣z)and for the prior at p θ ( x ∣ z ) p_{\theta}(\mathbf{x}|\mathbf{z}) pθ(x∣z)【当 p(λ) 不是均匀分布时,去噪得分匹配的目标可以被解释为加权变分下界】

- If p ( λ ) p(λ) p(λ)is not uniform, the objective can be interpreted as weighted variational lower bound whose weighting can be tuned for sample quality【当 p(λ) 不是均匀分布时,去噪得分匹配的目标可以被解释为加权变分下界,其权重可以根据需要调整以优化样本质量。】

choose of p ( λ ) p(λ) p(λ)

- we sample λ λ λ via λ = − 2 l o g t a n ( a u + b ) λ = −2 log\ tan(au + b) λ=−2log tan(au+b) for uniformly distributed u ∈ [ 0 , 1 ] u ∈ [0, 1] u∈[0,1], where b = a r c t a n ( e − λ m a x / 2 ) b = arctan(e^{−λ_{max}/2}) b=arctan(e−λmax/2) and a = a r c t a n ( e − λ m i n / 2 ) − b a = arctan(e^{−λ_{min}/2}) − b a=arctan(e−λmin/2)−b.

- This represents a hyperbolic secant distribution modified to be supported on a bounded interval. 【这种方法得到的 p(λ) 是一个修改后的双曲正割分布,它被调整为支持于在有界区间 [λmin,λmax] 。】

- For finite timestep generation, we use λ λ λvalues corresponding to uniformly spaced u ∈ [ 0 , 1 ] u ∈ [0, 1] u∈[0,1], and the final generated sample is x θ ( z λ m a x ) x_θ(z_{λ_{max}} ) xθ(zλmax).【在有限时间步长生成中,λ 的值对应于均匀间隔的 u ∈ [ 0 , 1 ] u ∈ [0, 1] u∈[0,1]最终生成的样本是 x θ ( z λ m a x ) x_θ(z_{λ_{max}} ) xθ(zλmax)】

- loss for ϵ θ ( z λ ) {\epsilon}_θ(z_λ) ϵθ(zλ) is denoising score matching for all λ λ λ

- the score ϵ θ ( z λ ) {\epsilon}_θ(z_λ) ϵθ(zλ)learned by our model estimates the gradient of the log-density of the distribution of our noisy data z λ z_λ zλ【模型学习得到的得分函数 ϵ θ ( z λ ) {\epsilon}_θ(z_λ) ϵθ(zλ)用来估计加噪数据 z λ z_λ zλ分布的对数梯度的】

- ϵ θ ( z λ ) ≈ − σ λ ∇ z λ log p ( z λ ) \epsilon_{\theta}(\mathbf{z}_{\lambda})\approx-\sigma_{\lambda}\nabla_{\mathbf{z}_{\lambda}}\log p(\mathbf{z}_{\lambda}) ϵθ(zλ)≈−σλ∇zλlogp(zλ)

- because we use unconstrained neural networks to define ϵ θ \epsilon_{\theta} ϵθ, there need not exist any scalar potential whose gradient is $ ϵ θ \epsilon_{\theta} ϵθ【 ϵ θ \epsilon_{\theta} ϵθ是通过无约束神经网络定义的,不一定存在一个标量势能函数,其梯度恰好等于 ϵ θ \epsilon_{\theta} ϵθ】

- Sampling from the learned diffusion model resembles using Langevin diffusion to sample from a sequence of distributions p ( z λ ) p(z_λ) p(zλ) that converges to the conditional distribution p ( x p(x p(xof the original data x.

METHODS

CLASSIFIER GUIDANCE

diffusion score: ϵ θ ( z λ , c ) ≈ − σ λ ∇ z λ log p ( z λ ∣ c ) \boldsymbol{\epsilon}_{\theta}(\mathbf{z}_{\lambda},\mathbf{c}) \approx -\sigma_{\lambda}\nabla_{\mathbf{z}_{\lambda}}\operatorname{log}p(\mathbf{z}_{\lambda}|\mathbf{c}) ϵθ(zλ,c)≈−σλ∇zλlogp(zλ∣c)

where the diffusion score ϵ θ ( z λ , c ) ≈ − σ λ ∇ z λ log p ( z λ ∣ c ) \boldsymbol{\epsilon}_{\theta}(\mathbf{z}_{\lambda},\mathbf{c}) \approx -\sigma_{\lambda}\nabla_{\mathbf{z}_{\lambda}}\operatorname{log}p(\mathbf{z}_{\lambda}|\mathbf{c}) ϵθ(zλ,c)≈−σλ∇zλlogp(zλ∣c) is modified to include the gradient of the log likelihood of an auxiliary classifier model p θ ( c ∣ z λ ) p_θ(c|z_λ) pθ(c∣zλ) as follows:

ϵ ~ θ ( z λ , c ) = ϵ θ ( z λ , c ) − w σ λ ∇ z λ log p θ ( c ∣ z λ ) ≈ − σ λ ∇ z λ [ log p ( z λ ∣ c ) + w log p θ ( c ∣ z λ ) ] , \tilde{\boldsymbol{\epsilon}}_{\theta}(\mathbf{z}_{\lambda},\mathbf{c})=\boldsymbol{\epsilon}_{\theta}(\mathbf{z}_{\lambda},\mathbf{c})-w\sigma_{\lambda}\nabla_{\mathbf{z}_{\lambda}}\log p_{\theta}(\mathbf{c}|\mathbf{z}_{\lambda})\approx-\sigma_{\lambda}\nabla_{\mathbf{z}_{\lambda}}[\log p(\mathbf{z}_{\lambda}|\mathbf{c})+w\log p_{\theta}(\mathbf{c}|\mathbf{z}_{\lambda})], ϵ~θ(zλ,c)=ϵθ(zλ,c)−wσλ∇zλlogpθ(c∣zλ)≈−σλ∇zλ[logp(zλ∣c)+wlogpθ(c∣zλ)],

- w:a parameter that controls the strength of the classifier guidance

this modified score ϵ ~ θ ( z λ , c ) \tilde{\boldsymbol{\epsilon}}_{\theta}(\mathbf{z}_{\lambda},c) ϵ~θ(zλ,c) is then used in place of ϵ θ ( z λ , c ) {\boldsymbol{\epsilon}}_{\theta}(\mathbf{z}_{\lambda},c) ϵθ(zλ,c)when sampling from the diffusion model, resulting in approximate samples from the distribution:

p ~ θ ( z λ ∣ c ) ∝ p θ ( z λ ∣ c ) p θ ( c ∣ z λ ) w . \tilde{p}_{\theta}(\mathbf{z}_{\lambda}|\mathbf{c})\propto p_{\theta}(\mathbf{z}_{\lambda}|\mathbf{c})p_{\theta}(\mathbf{c}|\mathbf{z}_{\lambda})^{w}. p~θ(zλ∣c)∝pθ(zλ∣c)pθ(c∣zλ)w.

As guidance strength is increased, each conditional places probability mass farther away from other classes and towards directions of high confidence given by logistic regression, and most of the mass becomes concentrated in smaller regions. This behavior can be seen as a simplistic manifestation of the Inception score boost and sample diversity decrease that occur when classifier guidance strength is increased in an ImageNet model.

Applying classifier guidance with weight w + 1 w + 1 w+1 to an unconditional model would theoretically lead to the same result as applying classifier guidance with weight w w w to a conditional model

- because p ~ θ ( z λ ∣ c ) ∝ p θ ( z λ ∣ c ) p θ ( c ∣ z λ ) w ∝ p θ ( z λ ∣ c ) p θ ( c ∣ z λ ) w + 1 . \tilde{p}_{\theta}(\mathbf{z}_{\lambda}|\mathbf{c})\propto p_{\theta}(\mathbf{z}_{\lambda}|\mathbf{c})p_{\theta}(\mathbf{c}|\mathbf{z}_{\lambda})^{w}\propto p_{\theta}(\mathbf{z}_{\lambda}|\mathbf{c})p_{\theta}(\mathbf{c}|\mathbf{z}_{\lambda})^{w+1}. p~θ(zλ∣c)∝pθ(zλ∣c)pθ(c∣zλ)w∝pθ(zλ∣c)pθ(c∣zλ)w+1.;

- or in terms of scores: ϵ θ ( z λ ) − ( w + 1 ) σ λ ∇ z λ log p θ ( c ∣ z λ ) ≈ − σ λ ∇ z λ [ log p ( z λ ) + ( w + 1 ) log p θ ( c ∣ z λ ) ] = − σ λ ∇ z λ [ log p ( z λ ∣ c ) + w log p θ ( c ∣ z λ ) ] , \begin{aligned} \boldsymbol{\epsilon}_{\theta}(\mathbf{z}_{\lambda})-(w+1)\sigma_{\lambda}\nabla_{\mathbf{z}_{\lambda}}\operatorname{log}p_{\theta}(\mathbf{c}|\mathbf{z}_{\lambda})& \approx-\sigma_{\lambda}\nabla_{\mathbf{z}_{\lambda}}[\operatorname{log}p(\mathbf{z}_{\lambda})+(w+1)\operatorname{log}p_{\theta}(\mathbf{c}|\mathbf{z}_{\lambda})] \\ &=-\sigma_{\lambda}\nabla_{\mathbf{z}_{\lambda}}[\log p(\mathbf{z}_{\lambda}|\mathbf{c})+w\log p_{\theta}(\mathbf{c}|\mathbf{z}_{\lambda})], \end{aligned} ϵθ(zλ)−(w+1)σλ∇zλlogpθ(c∣zλ)≈−σλ∇zλ[logp(zλ)+(w+1)logpθ(c∣zλ)]=−σλ∇zλ[logp(zλ∣c)+wlogpθ(c∣zλ)],

CLASSIFIER-FREE GUIDANCE

- 联合训练无条件和条件模型:Instead of training a separate classifier model, we choose to train an unconditional denoising diffusion model p θ ( z ) p_θ(z) pθ(z) parameterized through a score estimator ϵ θ ( z λ ) {\epsilon}_{\theta}(\mathbf{z}_{\lambda}) ϵθ(zλ) together with the conditional model p θ ( z ∣ c ) p_θ(z|c) pθ(z∣c)parameterized through ϵ θ ( z λ , c ) {\epsilon}_{\theta}(\mathbf{z}_{\lambda},c) ϵθ(zλ,c).

- Frist,We use a single neural network to parameterize both models,

- unconditional model: ϵ θ ( z λ ) = ϵ θ ( z λ , c = ∅ ) \epsilon_{\theta}(\mathbf{z}_{\lambda})=\epsilon_{\theta}(\mathbf{z}_{\lambda},\mathbf{c}=\varnothing) ϵθ(zλ)=ϵθ(zλ,c=∅).

- conditional model: ϵ θ ( z λ , c ) \epsilon_{\theta}(\mathbf{z}_{\lambda},c) ϵθ(zλ,c)

- We jointly train the unconditional and conditional models simply by randomly setting c to the unconditional class identifier ∅ with some probability puncond, set as a hyperparameter

- Then we perform sampling using the following linear combination of the conditional and unconditional score estimates:

ϵ ~ θ ( z λ , c ) = ( 1 + w ) ϵ θ ( z λ , c ) − w ϵ θ ( z λ ) \tilde{\boldsymbol{\epsilon}}_\theta(\mathbf{z}_\lambda,\mathbf{c})=(1+w)\boldsymbol{\epsilon}_\theta(\mathbf{z}_\lambda,\mathbf{c})-w\boldsymbol{\epsilon}_\theta(\mathbf{z}_\lambda) ϵ~θ(zλ,c)=(1+w)ϵθ(zλ,c)−wϵθ(zλ)- this Eq has no classifier gradient present, so taking a step in the ϵ θ ~ \tilde{\boldsymbol{\epsilon}_{\theta}} ϵθ~ direction cannot be interpreted as a gradient-based adversarial attack on an image classifier.

- Furthermore, ϵ θ ~ \tilde{\boldsymbol{\epsilon}_{\theta}} ϵθ~ is constructed from score estimates that are non-conservative vector fields due to the use of unconstrained neural networks,

- so there in general cannot exist a scalar potential such as a classifier log likelihood for which ϵ θ ~ \tilde{\boldsymbol{\epsilon}_{\theta}} ϵθ~is the classifier-guided score.

- Classifier-Free-Guidance VS Classifier-Guidance

- inspired by the gradient of an implicit classifier p i ( c ∣ z λ ) ∝ p ( z λ ∣ c ) / p ( z λ ) p^{i}(\mathbf{c}|\mathbf{z}_{\lambda})\propto p(\mathbf{z}_{\lambda}|\mathbf{c})/p(\mathbf{z}_{\lambda}) pi(c∣zλ)∝p(zλ∣c)/p(zλ)

- If we had access to exact scores ϵ ∗ ( z λ , c ) \epsilon^*(\mathbf{z}_\lambda, c) ϵ∗(zλ,c)(of p ( z λ ∣ c ) p(\mathbf{z}_\lambda|c) p(zλ∣c)), ϵ ∗ ( z λ ) \epsilon^*(\mathbf{z}_\lambda) ϵ∗(zλ)(of p ( z λ ) p(\mathbf{z}_\lambda) p(zλ)),

- then the gradient of this implicit classifier would be ∇ z λ log p i ( c ∣ z λ ) = − 1 σ λ [ ϵ ∗ ( z λ , c ) − ϵ ∗ ( z λ ) ] \nabla_{\mathbf{z}_\lambda} \log p^i(c|\mathbf{z}_\lambda) = -\frac{1}{\sigma_\lambda} [\epsilon^*(\mathbf{z}_\lambda, c) - \epsilon^*(\mathbf{z}_\lambda)] ∇zλlogpi(c∣zλ)=−σλ1[ϵ∗(zλ,c)−ϵ∗(zλ)]

- and classifier guidance with this implicit classifier would modify the score estimate into ϵ ~ ∗ ( z λ , c ) = ( 1 + w ) ϵ ∗ ( z λ , c ) − w ϵ ∗ ( z λ ) \tilde{\epsilon}^*(\mathbf{z}_\lambda, c) = (1 + w) \epsilon^*(\mathbf{z}_\lambda, c) - w \epsilon^*(\mathbf{z}_\lambda) ϵ~∗(zλ,c)=(1+w)ϵ∗(zλ,c)−wϵ∗(zλ)

- this Eq resembles Eq mentioned above,but they differs

- The ϵ ~ ∗ ( z λ , c ) ( C l a s s i f i e r − G u i d a n c e ) \tilde{\epsilon}^*(\mathbf{z}_\lambda, c)(Classifier-Guidance) ϵ~∗(zλ,c)(Classifier−Guidance)is constructed from the scaled classifier gradient ϵ ∗ ( z λ , c ) − ϵ ∗ ( z λ ) \epsilon^*(\mathbf{z}_\lambda,\mathbf{c})-\boldsymbol{\epsilon}^*(\mathbf{z}_\lambda) ϵ∗(zλ,c)−ϵ∗(zλ)

- the ϵ ∗ ( z λ , c ) ( C l a s s i f i e r − F r e e − G u i d a n c e ) {\epsilon}^*(\mathbf{z}_\lambda, c)(Classifier-Free-Guidance) ϵ∗(zλ,c)(Classifier−Free−Guidance)is constructed from the estimate ϵ θ ( z λ , c ) − ϵ θ ( z λ ) \epsilon_{\theta}(\mathbf{z}_{\lambda},\mathbf{c})-\epsilon_{\theta}(\mathbf{z}_{\lambda}) ϵθ(zλ,c)−ϵθ(zλ), and this expression is not in general the (scaled) gradient of any classifier, again because the score estimates are the outputs of unconstrained neural networks.

- inspired by the gradient of an implicit classifier p i ( c ∣ z λ ) ∝ p ( z λ ∣ c ) / p ( z λ ) p^{i}(\mathbf{c}|\mathbf{z}_{\lambda})\propto p(\mathbf{z}_{\lambda}|\mathbf{c})/p(\mathbf{z}_{\lambda}) pi(c∣zλ)∝p(zλ∣c)/p(zλ)

EXPERIMENTS

-

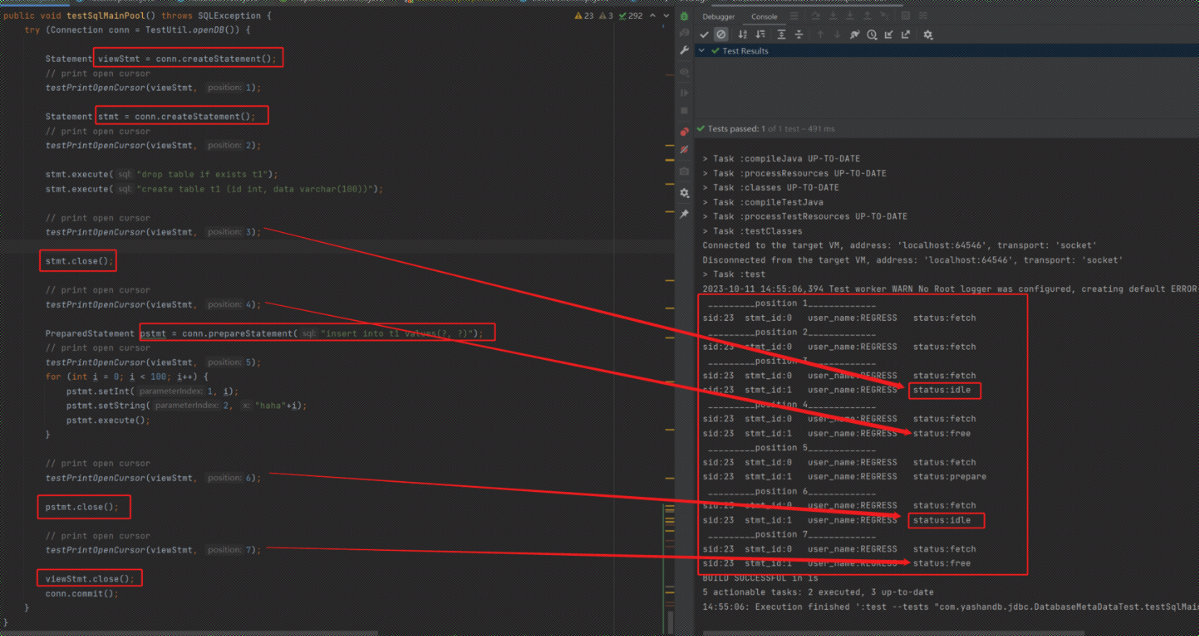

实验目的:实验的主要目的是证明无需分类器引导能够实现与分类器引导相似的 FID(Fréchet Inception Distance)和 IS(Inception Score)之间的权衡,并且理解无需分类器引导的行为。

-

实验设置:作者在下采样的类条件 ImageNet 数据集上训练了扩散模型。这是研究 FID 和 IS 权衡的标准设置,从 BigGAN 论文开始。

-

模型架构和超参数:为了与之前的工作进行公平比较,作者使用了与 Dhariwal & Nichol (2021) 的引导扩散模型相同的模型架构和超参数设置,尽管这些设置是为分类器引导调整的,可能对无需分类器引导不是最优的。

-

无需分类器引导的实现:作者展示了无需分类器引导的结果,证明了纯生成扩散模型能够合成与其他类型生成模型可能的极高保真度样本。

-

实验结果: 实验结果显示,无需分类器引导能够在 FID 和 IS 之间实现类似的权衡,并且在某些情况下,作者的模型在样本质量指标上与之前的作品相比具有竞争力,有时甚至更优。

-

引导强度的调整:

- 作者通过调整引导强度参数 w w w 来展示在 64x64 和 128x128 类条件 ImageNet 生成中样本质量的影响。实验结果表明,使用较小的引导强度可以获得最佳的 FID 结果,而较强的引导强度可以获得最佳的 IS 结果。

- 作者通过调整引导强度参数 w w w 来展示在 64x64 和 128x128 类条件 ImageNet 生成中样本质量的影响。实验结果表明,使用较小的引导强度可以获得最佳的 FID 结果,而较强的引导强度可以获得最佳的 IS 结果。

-

无条件训练概率的调整:

- 作者研究了在训练过程中无条件生成的概率 p uncond p_{\text{uncond}} puncond 对样本质量的影响。实验结果表明,较小的 p uncond p_{\text{uncond}} puncond 值(如 0.1 或 0.2)在整个 IS/FID 前沿上的表现优于 p uncond = 0.5 p_{\text{uncond}} = 0.5 puncond=0.5。

- 作者研究了在训练过程中无条件生成的概率 p uncond p_{\text{uncond}} puncond 对样本质量的影响。实验结果表明,较小的 p uncond p_{\text{uncond}} puncond 值(如 0.1 或 0.2)在整个 IS/FID 前沿上的表现优于 p uncond = 0.5 p_{\text{uncond}} = 0.5 puncond=0.5。

-

采样步骤数量的调整:

- 作者还研究了采样步骤数量 T T T 对 128x128 ImageNet 模型样本质量的影响。实验结果表明,增加 T T T 可以提高样本质量,但对于该模型, T = 256 T = 256 T=256 在样本质量和采样速度之间取得了良好的平衡。

- 作者还研究了采样步骤数量 T T T 对 128x128 ImageNet 模型样本质量的影响。实验结果表明,增加 T T T 可以提高样本质量,但对于该模型, T = 256 T = 256 T=256 在样本质量和采样速度之间取得了良好的平衡。