0. 准备

In the video, I said the exam is 3 hours. With the latest version of the exam, it is now only 2 hours. The contents of this course has been updated with the changes required for the latest version of the exam.Below are some references:

Certified Kubernetes Administrator: https://www.cncf.io/certification/cka/

Exam Curriculum (Topics): https://github.com/cncf/curriculum

Candidate Handbook: https://www.cncf.io/certification/candidate-handbook

Exam Tips: http://training.linuxfoundation.org/go//Important-Tips-CKA-CKADUse the code - DEVOPS15 - while registering for the CKA or CKAD exams at Linux Foundation to get a 15% discount.https://www.zhaohuabing.com/post/2022-02-08-how-to-prepare-cka/

1. 核心概念

https://github.com/kodekloudhub/certified-kubernetes-administrator-course

https://www.zhaohuabing.com/post/2022-02-08-how-to-prepare-cka/

1. master组件

etcd

scheduler

controller-manager: node-controller, replication-controller,。。。

apiserver

etcd

put key value

get key

apiserver

作用:

1. 认证用户

2. 校验请求

3. 校验数据

4. 更新etcd

5. 调度

6. kubeletcat /etc/kubernetes/manifests/kube-apiserver.yamlcat /etc/systemd/system/kube-apiserver.serviceps -aux | grep kube-apiserver

controller-manager

1. watch status

2. remediate situationcommon controllers:

node

namespace

deployment

rc

rs

sa

pv

pvc

job

cronjob

stateful-set

daemonsetAll controllers:

attachdetach, bootstrapsigner, clusterrole-aggregation, cronjob, csrapproving,

csrcleaner, csrsigning, daemonset, deployment, disruption, endpoint, garbagecollector,

horizontalpodautoscaling, job, namespace, nodeipam, nodelifecycle, persistentvolume-binder,persistentvolume-expander, podgc, pv-protection, pvc-protection, replicaset, replicationcontroller,resourcequota, root-ca-cert-publisher, route, service, serviceaccount, serviceaccount-token, statefulset, tokencleaner, ttlcat /etc/kubernetes/manifests/kube-controller-manager.yamlcat /etc/systemd/system/kube-controller-manager.serviceps -aux | grep kube-controller-manager

scheduler

1. filter node

2. rank node

3. 资源请求和限制

4. 污点和容器

5. 节点选择和亲和度cat /etc/kubernetes/manifests/kube-scheduler.yaml

ps -aux | grep kube-scheduler

2. worker组件

kubelet

kube-proxy

kubelet

1. 注册节点

2. 创建pod, 生命周期管理

kube-proxy

负载均衡

3. pod

node 下可以有多个pod

pod 下可以有多个containerkubectl run nginx --image nginx

kubectl get pods

4. yaml

apiVersion:

kind:

metadata:name: xxxlabels:app: xxxtype: yyy

spec:containers:- name: nginx-container // first itemimage: nginxkind version

Pod v1

Service v1

Replicaset apps/v1

Deployment apps/v1kubectl get pods

kubectl describe pod my-app

5. 线上测试环境

Link: https://kodekloud.com/courses/labs-certified-kubernetes-administrator-with-practice-tests/先用邮箱注册

https://kodekloud.com/courses/labs-certified-kubernetes-administrator-with-practice-tests/?utm_source=udemy&utm_medium=labs&utm_campaign=kubernetes然后点击右侧 enroll in this course, (Apply the coupon code) udemystudent151113练习:https://kodekloud.com/topic/practice-test-pods-2/

6. rs

1. 基本概念

# rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:name: myapp-rclabels:app: myapptype: frontend

spec:template:metadata:name: myapp-rclabels:app: myapptype: frontendspec:containers:- name: nginx-container // first itemimage: nginxreplicas: 3kubectl create -f rc.yaml

kubectl get rc

kubectl get pods# rs.yaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:name: myapp-rslabels:app: myapptype: frontend

spec:template:metadata:name: myapp-rslabels:app: myapptype: frontendspec:containers:- name: nginx-container // first itemimage: nginxreplicas: 3selector:matchLabels:type: frontend# label & selector# scale

replicas: 6kubectl replace -f rs-scale.yaml

kubectl scale --replicas=6 -f rs-scale.yaml

kubectl scale --replicas=6 replicaset myapp-replicaset // type name

2. 线上测试

3. solution

题目是删除one of the four, 不要删除busybox rs, 否则后面就无法操作了fix new-replica-setkubectl edit rs xxx --- busybox --- 删除原有的 kubectl delete pods -A Now scale the ReplicaSet up to 5 PODs.kubectl scale replicaset --replicaset=5 xxxrsNow scale the ReplicaSet down to 2 PODs.kubectl scale replicaset --replicaset=2 xxxrskubectl edit rs xxxrs

7. deployment

1. 基本概念

# deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: myapp-rslabels:app: myapptype: frontend

spec:template:metadata:name: myapp-rslabels:app: myapptype: frontendspec:containers:- name: nginx-container //first itemimage: nginxreplicas: 3selector:matchLabels:type: frontendkubectl get all

kubectl get deploy

kubectl get rs

kubectl get podsCreate an NGINX Podkubectl run nginx --image=nginxGenerate POD Manifest YAML file (-o yaml). Don't create it(--dry-run)kubectl run nginx --image=nginx --dry-run=client -o yamlCreate a deploymentkubectl create deployment nginx --image=nginx Generate Deployment YAML file (-o yaml). Don't create it(--dry-run)kubectl create deployment nginx --image=nginx --dry-run=client -o yamlGenerate Deployment YAML file (-o yaml). Don't create it(--dry-run) with 4 Replicas (--replicas=4)kubectl create deployment --image=nginx nginx --dry-run=client -o yaml > nginx-deployment.yamlSave it to a file, make necessary changes to the file (for example, adding more replicas) and then create the deployment.kubectl create -f nginx-deployment.yamlORIn k8s version 1.19+, we can specify the --replicas option to create a deployment with 4 replicas.kubectl create deployment nginx --image=nginx --replicas=4 --dry-run=client -o yaml > nginx-deployment.yaml

2. 线上测试

3. solution

最后一题:kubectl create deployment httpd-frontend --image=httpd:2.4-alpinekubectl scale --replicaset=3 deployment httpd-frontend或者cp 现有yaml文件,改改也可以

8. namespace

1. 基本概念

apiVersion: apps/v1

kind: Deployment

metadata:name: myapp-rsnamespace: devlabels:app: myapptype: frontend

spec:template:metadata:name: myapp-rslabels:app: myapptype: frontendspec:containers:- name: nginx-container // first itemimage: nginxreplicas: 3selector:matchLabels:type: frontend# namespace.yaml

apiVersion: v1

kind: Namespace

metadata:name: devkubectl create namespace devkubectl -n dev get pods

kubectl get pods -ns=dev

kubectl get pods --namespace=dev

kubectl config set-context $(kubectl config current-context) --namespace=devkubectl get pods --all-namespace / -A#resourceQuota

apiVersion: v1

kind: ResourceQuota

metadata:name: compute-quotenamespace: devspec:hard:pods: "10"requests.cpu: "4"requests.memory: 5Gilimit.cpu: "10"limit.memory: 10Gi

2. 线上测试

3. solution

kubectl get ns --no-headers | wc -l

kubectl -n research get pods --no-headers

kubectl run redis --image=redis --dry-run=client -o yaml > ns.yamlkubectl get pods --all-namespace | grep blueping www.baidu.com 80# marking

db-service:3306# dev

db-service.dev.svc.cluster.local

9. service

1. 基础知识

ClusterIP

NodePort

LoadBalance

ExternalName# nodePort.yaml

apiVersion: v1

kind: Service

metadata:name: myapp-service

spec:type: NodePortports:- targetPort: 80port: 80nodePort: 30008selector:app: myapp // 通过selector 选择label, 同一label能负载均衡type: frontend# clusterIp

apiVersion: v1

kind: Service

metadata:name: myapp-service

spec:type: ClusterIPports:- targetPort: 80port: 80selector:app: myapp // 通过selector 选择label, 同一label能负载均衡type: frontendkubectl get svc# loadBalance.yaml

apiVersion: v1

kind: Service

metadata:name: myapp-service

spec:type: LoadBalanceports:- targetPort: 80port: 80 nodePort: 30008http://example-1.com

http://example-2.comcloud

2. 线上实验

3. solution

kubectl expose deployment simple-webapp-deployment --name=webapp-service --target-port=8080 --type=NodePort --port=8080 --dry-run=client -o yaml > svc.yaml

10. 声明式和命令式

1. 基础知识

命令式:仅需一步就对集群做了修改kubectl run/expose/edit/scale/set image/ create/replace/delete -f xxx.yamlkubectl replace --force -f xx.yaml声明式:核心思想是apply命令使用的是patch API,该API可以认为是一种update操作,先计算patch请求,发送patch请求,操作的范围是object的指定字段而不是整个objectkubectl apply -f xx.yamllive object configurationannotations: kubectl.kubernetes.io/last-applied-configuration: {"apiversion": ...}2. 线上测试

多用--helpCreate a pod called httpd using the image httpd:alpine in the default namespace. Next, create a service of type ClusterIP by the same name (httpd). The target port for the service should be 80.Try to do this with as few steps as possible.'httpd' pod created with the correct image?

'httpd' service is of type 'ClusterIP'?

'httpd' service uses correct target port 80?

'httpd' service exposes the 'httpd' pod?

3. solution

kubectl edit pod httpd run:httpd app:httpdkubectl run httpd --image=httpd:alpine --port 80 --expose // 最快

2. scheduler

1. manual schedule

将pod绑定到固定node, 也称固定节点调度

# 方式一

apiVersion: v1

kind: Binding

metadata:name: nginx

target:apiVersion: v1kind: Nodename: node02# 方式二:

apiVersion: v1

kind: Pod

metadata:name: nginx

spec:nodeName: node01containers:- name: nginximage: nginx

线上测试

要等结果出来后,自己验证没有问题再点击check

solution

2. label&selector

label & selector

selector:matchLabels:app: monitoring-agenttemplate:metadata:labels:app: monitoring-agent

线上测试

kubectl get all --show-labels | grep "env=prod"kubectl get pods -l env=dev --no-headers | wc -l

solution

3. taints & tolerations

# taints node

kubectl taint nodes node-name key=value:taint-effect(NoSchedule/PreferNoSchedule/NoExecute)# tolerations pod

kubectl taint nodes node1 app=blue:NoScheduleapiVersion: v1

kind: Pod

metadata:name: nginx

spec:containers:- name: nginximage: nginxtolerations:- key: appoperator: "Equal"value: blueeffect: NoSchedule# taints effect

NoSchedule

PreferNoSchedule

NoExecutemaster default NoSchedulekubectl describe node master | grep taint

线上测试

kubectl get nodes kubectl taint node node01 spray=mortein:NoSchedulekubectl run bee --image=nginx -o yaml > new.yamltolerations:- key: appoperator: "Equal"value: blueeffect: NoSchedulekubectl explain pod.spec

kubectl explain pod --recursive | less

kubectl explain pod --recursive | grep -A5 tolerations10. Remove the taint on controlplane, which currently has the taint effect of NoSchedule.

kubectl taint node controlplane node-role.kubernetes.io/master:NoSchedule-

solution

4. node selector

apiVersion: v1

kind: Pod

metadata:name: nginx

spec:containers:- name: nginximage: nginxnodeSelector:size: largekubectl label node node_name key=value

5. node affinity

apiVersion: v1

kind: Pod

metadata:name: nginx

spec:containers:- name: nginximage: nginxaffinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:// preferredDuringSchedulingIgnoredDuringExecution// requiredDuringSchedulingRequiredDuringExecutionnodeSelectorTerms:- matchExpressions:- key: sizeoperator: NotIn/In/Exists(没有下面的values)values: - Large- Medium线上测试

Which nodes can the pods for the blue deployment be placed on?

Make sure to check taints on both nodes!answer:kubectl describe node controlplane | grep -i taint发现都没有taintsSet Node Affinity to the deployment to place the pods on node01 only.answer:kubectl create deployment blue --image=nginx --replicas=3 --dry-run=client -o yaml > deploy.yamlapiVersion: apps/v1

kind: Deployment

metadata:labels:app: bluename: blue

spec:replicas: 3selector:matchLabels:app: bluetemplate:metadata:labels:app: bluespec:containers:- image: nginxname: nginxresources: {}affinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:- matchExpressions:- key: coloroperator: Invalues:- bluekubectl get deployments.apps blue -o yaml > blue.yamlvim blue.yaml

6. resource limits

apiVersion: apps/v1

kind: Pod

metadata:labels:app: bluename: blue

spec:containers:- image: nginxname: nginxresources:requests:memory: "1Gi/256Mi"cpu: 1/100m(0.1)limits:memory: "2Gi"cpu: 2

7. daemonsets

kube-proxy

networkingapiVersion: apps/v1

kind: DaemonSet

metadata:labels:app: bluename: blue

spec:selector:matchLabels:app: bluetemplate:metadata:labels:app: bluespec:containers:- image: nginxname: nginxkubectl get daemonset/ds

线上测试

1. kubectl -n kube-proxy get pods | grep proxy2. kubectl create deployment elastic --image=xxx --namespace=xxx -o yaml> deploy.yamlvim deploy.yaml kind: DaemonSet

8. static pods

/etc/kubernetes/manifests自动重启,无法删除,更新,只读created by the kubelet

deploy control plane components as static pods

线上测试

How many static pods exist in this cluster in all namespaces?

ls /etc/kubernetes/manifests2.

kubernetes run static-busybox --image=busybox --dry-run=client -o yaml > busybox.yaml// busybox.yaml

apiVersion: v1

kind: Pod

metadata:creationTimestamp: nulllabels:run: static-busyboxname: static-busybox

spec:containers:- image: busyboxname: static-busyboxcommand: ["bin/sh", "-c", "sleep 1000"]resources: {}dnsPolicy: ClusterFirstrestartPolicy: Always

status: {}cp busybox.yaml /etc/kubernetes/manifests3.

kubectl get node node01 -o wide

ssh node01ps -ef | grep kubeletgrep -i static /var/lib/xxxcd /etc/just

rm -f xxx.yaml

9. multi scheduler(todo)

apiVersion: v1

kind: Pod

metadata:labels:run: static-busyboxname: my-scheduler

spec:containers:- image: busyboxname: static-busybox- command:- kube-scheduler- --address=- --scheduler-name=my-custom-scheduler- --leader-elect=falsestatus: {}# pod.yaml

apiVersion: v1

kind: Pod

metadata:creationTimestamp: nulllabels:run: static-busyboxname: static-busybox

spec:containers:- image: busyboxname: static-busyboxschedulerName: my-custom-schedulerkubectl get eventskubectl logs my-custom-scheduler -n kube-system

线上测试

1. Deploy an additional scheduler to the cluster following the given specification.Use the manifest file used by kubeadm tool. Use a different port than the one used by the current one.CheckCompleteIncompleteNamespace: kube-systemName: my-schedulerStatus: RunningCustom Scheduler Nameanswer:未成功10. config scheduler

3. logging&monitor

// 查看cpu, mem

kubectl top node/pod // 查看日志

kubectl logs -f xxpod1xx xxxpod2xxxkubectl logs -f xxpodxx -c container

4. lifecycle

1. update & rollback

kubectl rollout status/history deployment/xx-deploymentreplicaskubectl set image deployment/myapp-deployment nginx=nginx:1.9.1kubectl rollout undo deployment/xxx-deploymentkubectl get rsstrategy:type: Recreate / RollingUpdate

2. comands & arguments

apiVersion: v1

kind: Pod

metadata:labels:run: static-busyboxname: static-busybox

spec:containers:- image: busyboxname: static-busyboxcommand:- "bin/sh"- "-c"- "sleep"args: ["1"]

线上测试

Create a pod with the given specifications. By default it displays a blue background. Set the given command line arguments to change it to greenCheckCompleteIncomplete

Pod Name: webapp-green

Image: kodekloud/webapp-color

Command line arguments: --color=greenargs: ["--color=green"]

3. env

# 使用一

env:- name: APP_COLORvalue: pink# 使用二, configmap

env:- name: APP_COLORvalueFrom:configMapKeyRef:xxx# 使用三,secret

env:- name: APP_COLORvalueFrom: secretKeyRef:xxx

4. configmap

// 声明式

apiVersion: v1

data:APP_COLOR: darkblue

kind: ConfigMap

metadata:name: webapp-config-mapnamespace: default// 交互式

kubectl create cm xxx --from_literal=key=value

kubectl create cm xxx --from_file=app_config.propertieskubectl get cm

kubectl describe cm# 完整使用container:# 方式一env <[]Object>name <string>value <string>valueFrom <Object>configMapKeyRef <Object>key <string>name <string>optional <boolean>fieldRef <Object>apiVersion <string>fieldPath <string>resourceFieldRef <Object>containerName <string>divisor <string>resource <string>secretKeyRef <Object>key <string>name <string>optional <boolean># 方式二 - envFrom <[]Object> 前面的 - 如果检测过不了,往下移动去掉前面的 - 就好了- configMapRef <Object>name <string>optional <boolean>- secretRef <Object>name <string>optional <boolean>

5. secrets

// 交互式

kubectl create secret generic xxx --from-literal=key1=value1 --from-literal=key2=value2

kubectl create secret generic xxx --from_file = xxx// 声明式

apiVersion: v1

kind: Secret

metadata:name:

data:xxx: hash // echo -n "xx" | base64 [--decode]kubectl get secret [xx -o yaml]

kubectl describe secret// 使用

- envFrom <[]Object> 前面的 - 如果检测过不了,往下移动去掉前面的 - 就好了- secretRef <Object>name <string>env:

- name <string>valueFrom <Object>secretKeyRef <Object>key <string>name <string>// volume

volumes:

- name: app-secret-volumesecret:secretName: app-sec

6. multi container pods

apiVersion: v1

kind: Pod

metadata:labels:run: static-busyboxname: static-busybox

spec:containers:- image: busyboxname: static-busybox- image: xxxname: xxx1

7. init pod

apiVersion: v1

kind: Pod

metadata:name: myapp-podlabels:app: myapp

spec:containers:- name: myapp-containerimage: busybox:1.28command: ['sh', '-c', 'echo The app is running! && sleep 3600']initContainers:- name: init-myserviceimage: busyboxcommand: ['sh', '-c', 'git clone xx; done;']If any of the initContainers fail to complete, Kubernetes restarts the Pod repeatedly until the Init Container succeeds.apiVersion: v1

kind: Pod

metadata:name: myapp-podlabels:app: myapp

spec:containers:- name: myapp-containerimage: busybox:1.28command: ['sh', '-c', 'echo The app is running! && sleep 3600']initContainers:- name: init-myserviceimage: busybox:1.28command: ['sh', '-c', 'until nslookup myservice; do echo waiting for myservice; sleep 2; done;']- name: init-mydbimage: busybox:1.28command: ['sh', '-c', 'until nslookup mydb; do echo waiting for mydb; sleep 2; done;']线上测试

Edit the pod to add a sidecar container to send logs to Elastic Search. Mount the log volume to the sidecar container.Only add a new container. Do not modify anything else. Use the spec provided below.Name: app

Container Name: sidecar

Container Image: kodekloud/filebeat-configured

Volume Mount: log-volume

Mount Path: /var/log/event-simulator/Existing Container Name: app

Existing Container Image: kodekloud/event-simulator//

apiVersion: v1

kind: Pod

metadata:name: myapp-podlabels:app: myapp

spec:containers:- name: myapp-containerimage: busybox:1.28command: ['sh', '-c', 'echo The app is running! && sleep 3600']- image: kodekloud/filebeat-configuredname: sidecarvolumeMounts:- mountPath: /var/log/event-simulator/name: log-volume

5. cluster maintenance

1. os update

https://v1-20.docs.kubernetes.io/zh/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade/

// 驱逐

kubectl drain node-1

// 不可调度

kubectl cordon node-2

// 可调度

kubectl uncordon node-1--------------------------

kubeadm upgrade plan

master:

kubectl cordon master

apt-mark unhold kubeadm && \

apt-get update && apt-get install -y kubeadm=1.20.0-00 && \

apt-mark hold kubeadmkubeadm upgrade plankubeadm upgrade apply v1.20.0apt-mark unhold kubelet kubectl && \apt-get update && apt-get install -y kubelet=1.20.0-00 kubectl=1.20.0-00 && \apt-mark hold kubelet kubectlsudo systemctl daemon-reload

sudo systemctl restart kubeletkubectl uncordon master

kubectl get nodesnode:

kubectl drain node-1apt-get upgrade -y kubeadm=1.20.0-00

kubeadm upgrade node config --kubelet-version v1.20.0

apt-get upgrade -y kubelet=1.20.0-00 kubectl=1.20.0-00

sudo systemctl daemon-reload

sudo systemctl restart kubelet

kubectl get nodeskubectl uncordon node-1--------------------------

kubectl drain node-2

。。。

kubectl uncordon node-2

--------------------------

kubectl drain node-3

。。。

kubectl uncordon node-3// cannot delete Pods not managed by ReplicationController, ReplicaSet, Job, DaemonSet or StatefulSet (use --force to override): default/hr-appkubectl drain node01 --ignore-daemonsets --force

2. backup & restore(todo)

# backup

// resource configuration

kubectl get all --all-namespaces -o yaml > all.yaml// etcd

kubectl describe pod -n kube-system | grep etcd 找到配置ETCDCTL_API=3 etcdctl snapshot save snapshot.db --endpoints=https://127.0.0.1:2379 \

--cacert=/etc/kubernetes/pki/etcd/ca.crt \

--cert=/etc/kubernetes/pki/etcd/server.crt \

--key=/etc/kubernetes/pki/etcd/server.keyETCDCTL_API=3 etcdctl \

snapshot status snapshot.db// pv# restore

// etcd

service kube-apiserver stopETCDCTL_API=3 etcdctl \

snapshot restore snapshot.db --data-dir=/var/lib/etcd-from-backupls /var/lib/etcd-from-backupcd /etc/kubernetes/manifestsvim etcd.yaml volumes:- hostpath:path: /var/lib/etcd-from-backuptype: xxxsystemctl daemon-reload

service etcd restartservice kube-apiserver startkubectl get pods,deploy,svc

线上测试:

Where is the ETCD server certificate file located?

Note this path down as you will need to use it later/etc/kubernetes/pki/server.crt

/etc/kubernetes/pki/etcd/peer.crt

/etc/kubernetes/pki/etcd/ca.crt

/etc/kubernetes/pki/etcd/server.crt ****

6. security(todo)

1. authentication

kubectl create user user1

kubectl list userkubectl create sa sa1

kubectl get sa// static password

command:

- kube-apiserver

- --basic-auth-file=// static token

command:

- kube-apiserver

- --token-auth-file=

2. tls

ssh-keygen

cat ~/.ssh/authorized_keys// tls in kubernetes

caserver:

apiserver: apiserver.crt, apiserver.key 下面的都和apiserver打交道

etcd: etcd.crt, etcd.key

kubelet: kubelet.crt, kubelet.keyclient:

kube-scheduler: scheduler.key scheduler.crt

kube-proxy

kube-controller-manager

admin// ca

openssl genrsa -out ca.key 2048 //设置密码 123456openssl req -new -key ca.key -out ca.csr //输入上面的密码,common Name: hub.atguigu.com, 最后一个提示改密码,直接回车cp ca.key ca.key.org

openssl rsa -in ca.key.org -out ca.key //去掉密码,不然会失败(需要输入密码)openssl x509 -req -in ca.csr -signkey ca.key -out ca.crt// admin

openssl genrsa -out admin.key 2048

openssl req -new -key admin.key -subj "/CN=kube-admin" -out admin.csr

openssl x509 -req -in admin.csr -CA ca.crt -CAkey ca.key -out admin.crt...curl https://kube-apiserver:6443/api/v1/pod --key admin.key -cert admin.crt --cacert ca.crtclusters:

- cluster:certificate-authority: ca.crtserver: https://kube-apiserver:6443name: kubernetes// view ca details

cat /etc/systemd/system/kube-apiserver.service

cat /etc/kubernetes/manifests/kube-apiserver.yaml# apiserver/etc/kubernetes/pkiopenssl x509 -in /etc/kubernetes/pki/apiserver.crt -text -nooutIssuer: CN = etcd-casubject:ValidityNot Before: Feb 4 15:07:34 2022 GMTNot After : Feb 4 15:07:34 2023 GMTX509v3 Subject Alternative Name: DNS:controlplane, DNS:kubernetes, DNS:kubernetes.default, DNS:kubernetes.default.svc, DNS:kubernetes.default.svc.cluster.local, IP Address:10.96.0.1, IP Address:10.37.158.9# inspect servive logs

journalctl -u etcd.service -l# view logs

kubectl logs -f etcddocker ps -a | grep api

docker logs xxx

3. certificates api(todo)

https://kubernetes.io/zh/docs/reference/access-authn-authz/certificate-signing-requests/

openssl genrsa -out jane.key 2048openssl req -new -key jane.key -subj "/CN=jane" -out jane.csrcat jane.csr | base64apiVersion: certificates.k8s.io/v1beta1

kind: CertificateSigningRequest

metadata:name: jane

spec:groups:- system:nodes- system:authenticatedusages:- digital signature- key encipherment- client authrequest:cat jane.csr | base64 // 此处要先在文本文件上手动处理下,否则会报错kubectl get csr kubectl certificate approve janekubectl certificate deny agent-smithkubectl get csr jane -o yamlcat /etc/kubernetes/manifests/kube-controller-manager.yaml --cluster-signing-cert-file = pki/ca.crt--cluster-signing-key-file = pki/ca.key

4. kube config(todo)

#HOME/.kube/configcurl https://xxx:6443/api/v1/pods --key=admin.key --cert admin.crt --cacert ca.crtkubectl get pods --server --client-key --client-certificate --certificate-authority

=

kubectl get pods --kubeconfig configapiVersion: v1

kind: Config

current-context: user@clusterclusters:

- name: my-clustercluster:certificate-authority-data: /etc/kubernetes/pki/ca.crtserver:// cat ca.crt | base64// echo "" | base64 --decodecontexts:

- name: use@clustercontext:cluster: my-clusteruser: my-usernamespace: xxxusers:

- name: my-useruser:client-certificate-data: /etc/kubernetes/pki/user/xxx.crtclient-key-data: /etc/kubernetes/pki/user/xxx.key// 将集群详细信息添加到配置文件中:

kubectl config --kubeconfig=my-kube-config set-cluster test-cluster-1 --server=https://controlplane:6443 --certificate-authority=/etc/kubernetes/pki/ca.crt//

kubectl config --kubeconfig=my-kube-config set-credentials dev-user --client-certificate=fake-cert-file --client-key=fake-key-seefile// 查看context

kubectl config view --kubeconfig=my-config

// 改变context

kubectl config use-context prod-user@clusterkubectl config -h

线上测试(todo)

1. I would like to use the dev-user to access test-cluster-1. Set the current context to the right one so I can do that.Once the right context is identified, use the kubectl config use-context command.2. We don't want to have to specify the kubeconfig file option on each command. Make the my-kube-config file the default kubeconfig.

CheckCompleteIncomplete

Default kubeconfig file configured3.

5. api groups(todo)

curl http://localhost:6433/api/v1/version

curl http://localhost:6443 -k | grep "name"core:apiv1

namespace pods rcnamed:apis

/apps /extensions /networking.k8s.io /storage

v1

(resources) (verbs)

deployments list

rs get

statefulsets createkubectl proxy

curl http://localhost:8001 -k

6. auth

node

kube api --> kubelt read servicesendpointsnodespodswritenode statuspod statusevents

abac

rbac

role permission

webhook

user --> kube api --> open policy agent

cat /etc/kubenetes/manifests/kube-apiserver.yaml --authorization-mode=Node,RBAC【Webhook】kubectl describe role kube-proxy -n kube-systemPolicyRule:Resources Non-Resource URLs Resource Names Verbs--------- ----------------- -------------- -----configmaps [] [kube-proxy] [get]1. Which account is the kube-proxy role assigned to it?kubectl get rolebindings.rbac.authorization.k8s.io -Akubeadm2.

7. rbac

# role

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:namespace: defaultname: dev

rules:- apiGroups: [""] // core apiresources: ["pods"]verbs: ["get", "watch", "list"]- apiGroups: [""] // core apiresources: ["configMap"]verbs: ["create"]apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:creationTimestamp: "2022-02-06T07:48:49Z"name: developernamespace: blueresourceVersion: "870"uid: 1e34b0a5-6b68-475c-8510-ca17258a3b51

rules:

- apiGroups:- ""resourceNames:- blue-appresources:- podsverbs:- get- watch- create- delete- list# roleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:name: dev-bindingnamespace: defaultsubjects:- kind: Username: janeapiGroup: rbac.authorization.k8s.ioroleRef:kind: Rolename: devapiGroup: rbac.authorization.k8s.iokubectl get roles/rolebindings

kubectl describe role dev

kubectl describe rolebinding dev-bindingkubectl auth can-i create deployments --as dev

kubectl auth can-i create/list pods --as dev -n xxx

kubectl auth can-i delete nodes# clusterRole 跨namespace

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:name: secret-reader

rules:- apiGroups: [""] resources:["secrets/nodes/ns/user/group"]verbs: ["get", "watch", "list"]# clusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:name: read-secret-globalnamespace: deploymentsubjects:- kind: Groupname: managerapiGroup: rbac.authorization.k8s.ioroleRef:kind: ClusterRolename: secret-readerapiGroup: rbac.authorization.k8s.io

线上测试

1. Grant the dev-user permissions to create deployments in the blue namespace.

Remember to add both groups "apps" and "extensions".

Create Deploymentsrules:

- apiGroups:- ""resourceNames:- dark-blue-appresources:- podsverbs:- get- watch- create- delete- list

- apiGroups: ["extensions","apps"]resources:- deploymentsverbs: ["create"]2. What namespace is the cluster-admin clusterrole part of?

cluster roles are cluster wide and not part of any namespaces

8. sa

用来访问kubernetes api, 由kubenetes创建,挂载到/var/run/secrets/kubernetes.io/serviceaccountkubectl run nginx --image=hub.atguigu.com/library/nginx:v1kubectl exec -it `kubectl get pods -l run=nginx -o=name | cut -d "/" -f2` ls /var/run/secrets/kubernetes.io/serviceaccountkubectl exec -it `kubectl get pods -l run=nginx -o=name | cut -d "/" -f2` cat /var/run/secrets/kubernetes.io/serviceaccount/token"""

ca.crt

namespace

token

"""kubectl create sa dashboard-sa

kubectl get sa

kubectl describe sa dashboard-saapiVersion: v1

kind: Pod

metadata:name: myapp-podlabels:app: myapp

spec:containers:- name: myapp-containerimage: busybox:1.28serviceAccountName: dashboard-saserviceAccount: xxxxautomountServiceAccountName: false

9. image security

docker tag nginx:latest hub.atguigu.com/mylibrary/nginx:v1

docker push hub.atguigu.com/mylibrary/nginx:v1docker logout hub.atguigu.com

docker rmi

docker pull hub.atguigu.com/mylibrary/nginx:v1# vim secret-dockerconfig.yaml

apiVersion: v1

kind: Pod

metadata:name: foo

spec:containers:- name: fooimage: hub.atguigu.com/mylibrary/nginx:v1imagePullSecrets:- name: myregistrykeykubectl create -f secret-dockerconfig.yaml

kubectl get pod foo # 发现不能正常运行kubectl create secret docker-registry myregistrykey --docker-server=hub.atguigu.com --docker-username=admin --docker-password=Harbor12345 --docker-email="123@qq.com"kubectl get pod foo # 发现能正常运行

10. security context(***)

可用来给为 Container 设置linux权能

https://kubernetes.io/zh/docs/tasks/configure-pod-container/security-context/

apiVersion: v1

kind: Pod

metadata:name: foo

spec:containers:- name: fooimage: hub.atguigu.com/mylibrary/nginx:v1securityContext:runAsUser: 1000capabilities:add: ["mac_admin", "NET_ADMIN", "SYS_TIME"]

11. network policy

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:name: db-policy

spec:podSelector:matchLabels:role: dbpolicyTypes:- Ingress- Engressingress:- from:- podSelector:matchLabels:name: api-pod- namespaceSelector:matchLabels:name: api-pod- ipBlock:cidr: 192.168.1.1/20ports:- protocol: TCPport: 3306egress:- to:- ipBlock:cidr: 192.168.19.1/32ports:- protocol: TCPport: 80kubectl get networkpolicies

线上测试

11. Create a network policy to allow traffic from the Internal application only to the payroll-service and db-service.Use the spec given on the below. You might want to enable ingress traffic to the pod to test your rules in the UI.CheckCompleteIncomplete

Policy Name: internal-policy

Policy Type: Egress

Egress Allow: payroll

Payroll Port: 8080

Egress Allow: mysql

MySQL Port: 3306apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:name: internal-policynamespace: default

spec:podSelector:matchLabels:name: internalpolicyTypes:- Egress- Ingressingress:- {}egress:- to:- podSelector:matchLabels:name: mysqlports:- protocol: TCPport: 3306- to:- podSelector:matchLabels:name: payrollports:- protocol: TCPport: 8080- ports:- port: 53protocol: UDP- port: 53protocol: TCP

7. storage

1. storage in docker

/var/lib/docker

aufs

containers

image

volumesdata_volumes// image & container

镜像分层

copy on writecontainer layer read write

image layer read only// volumes

-v read --- read writedocker run -v data_volumes:/var/lib/mysql mysql

2. drivers

// storage drivers

zfs/overlay// volume drivers

local/gfs/...docker run -it --name mysql --volume-driver rexray/ebs --mount src=ebs-vol,target=/var/lib/mysql mysql

3. csi

rkt, cri-ocni: flannel, cilium, weaveworkscsi: dell emc, glusterfs

4. volumes

apiVersion: v1

kind: Pod

metadata:name: foo

spec:containers:- name: fooimage: hub.atguigu.com/mylibrary/nginx:v1volumeMounts:- mountPath: /optname: data-volumesvolumes:- name: data_volumeshostpath: path: /datatype: Directory// volumes types

hostpath

awsElasticBlockStorevolumeId:fsType: ext4

3. pv

apiVersion: v1

kind: PersistentVolume

metadata:name: pv-voll

spec:accessMode:- ReadWriteOncecapacity:storage: 1Gihostpath:path: /tmp/dataawsElasticBlockStore:volumeId: xxxfsType: ext4kubectl get pv

4. pvc

// pv

labels:name: pv// pvc

selector:matchLabels:name: my-pv// pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: myclaim

spec:accessModes:- ReadWriteOncevolumeMode: Filesystemresources:requests:storage: 500MistorageClassName: slowselector:matchLabels:release: "stable"matchExpressions:- {key: environment, operator: In, values: [dev]}kubectl get pvc// pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:name: pv-voll

spec:accessMode:- ReadWriteOncecapacity:storage: 1GipersistentVolumeReclaimPolicy: Retain/Delete/RecycleawsElasticBlockStore:volumeId: xxxfsType: ext4// pod.yaml

apiVersion: v1

kind: Pod

metadata:labels:run: static-busyboxname: static-busybox

spec:containers:- image: busyboxname: static-busyboxcommand:- "bin/sh"- "-c"- "sleep"args: ["1"] volumeMounts:- mountPath: /optname: data-volumevolumes:- name: data-volumepersistentVolumeClaim:claimName: myclaim

5. storage class(***)

// static provision// dynamic provision

// sc.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:name: google-storage

provisioner: kubernetes.io/gce-pd

parameters:resturl: "http://192.168.10.100:8080"restuser: ""secretNamespace: ""secretName: ""ortype: pd-standard | pd-ssdreplication-type: nonereclaimPolicy: Retain

allowVolumeExpansion: true

mountOptions:- debug

volumeBindingMode: Immediate// pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:name: pv-voll

spec:accessMode:- ReadWriteOncecapacity:storage: 1GigcePersistentDisk:pdName: pd-diskfsType: ext4// pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: myclaim

spec:accessModes:- ReadWriteOnceresources:requests:storage: 500MistorageClassName: google-storage// pod.yaml

apiVersion: v1

kind: Pod

metadata:labels:run: static-busyboxname: static-busybox

spec:containers:- image: busyboxname: static-busyboxcommand:- "bin/sh"- "-c"- "sleep"args: ["1"] volumeMounts:- mountPath: /optname: data-volumevolumes:- name: data-volumepersistentVolumeClaim:claimName: myclaim

8. network

1. basic

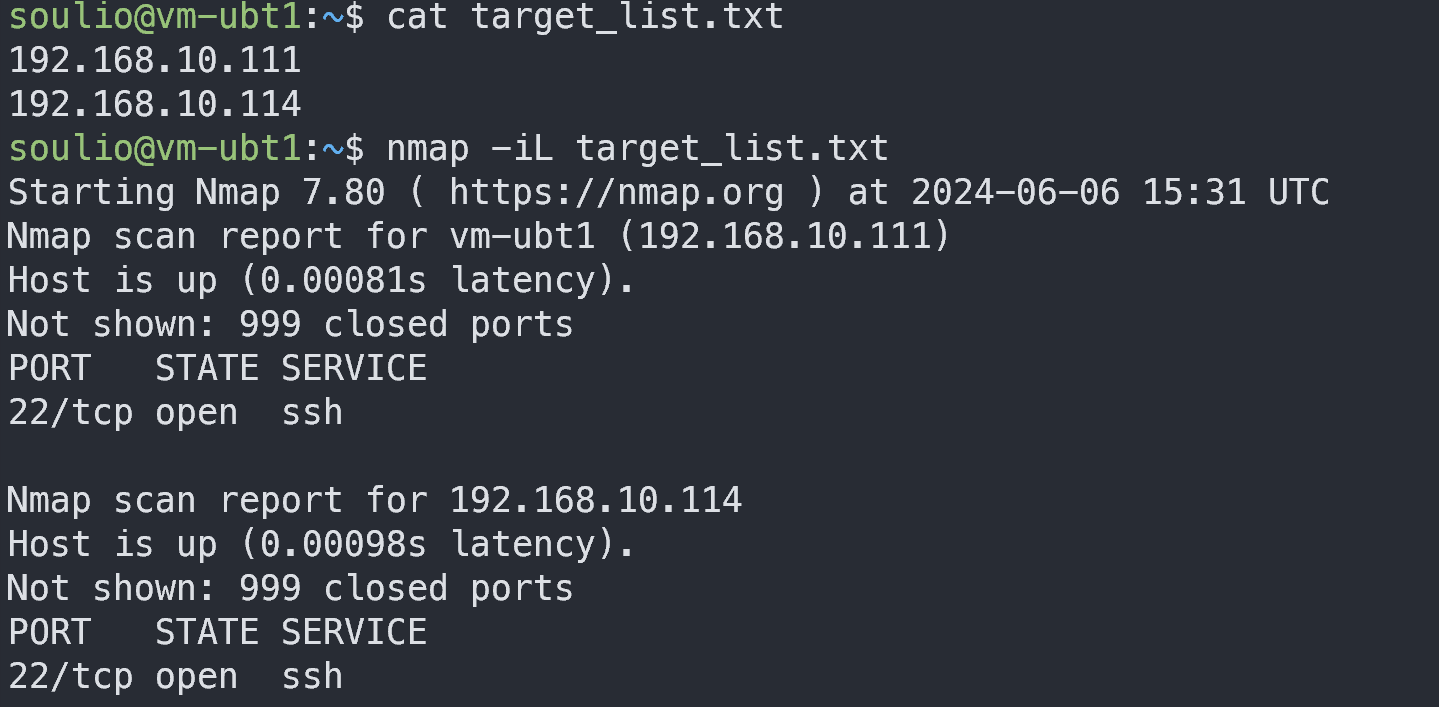

1. switch and routing// switch 同网段ip linkip addr add 192.168.1.10/24 dev eth0ip addr add 192.168.1.11/24 dev eth0ping 192.168.1.1// routing 跨网段192.168.1.1 192.168.2.1 交换机上的一个端口routeip route add 192.168.2.0/24 via 192.168.1.1同理ip route add 192.168.1.0/24 via 192.168.2.1// gateway ip route add default via 192.168.2.1cat /proc/sys/net/ipv4/ip_forward0echo 1 > /proc/sys/net/ipv4/ip_forwarddnsping 192.168.1.11ping db //dns server 192.168.1.100cat /etc/hosts 192.168.1.11 db192.168.1.11 www.xxx.com// sub servercat /etc/resolv.confnameserver 192.168.1.100nameserver 8.8.8.8// 优先级调整cat /etc/nsswitch.confhosts: files dnsdomain nameswww baidu .comsubdomain top level domain name rootapps.google.com org dns -> root dns -> .com dns -> google dns -> ip -> cacheroot dns:192.168.1.10 web.mycompany.comcat /etc/resolv.confnameserver 192.168.1.100search mycompany.com prod.mycompany.comcname:foot.web-server eat.web-server / hungry.web-server mappingnslookup www.baidu.comname: www.google.comaddress: 172.217.0.132dig www.google.comnetwork namespace:ps auxip netns add redip netnsip linkip netns exec red ip linkip -n red link arp node01ip netns exec red arproute ip netns exec red routeip link add veth-red type veth peer name veth-blueip link set veth-red netns redip link set veth-blue netns blueip -n red addr add 192.168.15.1 dev veth-redip -n blue addr add 192.168.15.2 dev veth-blueip -n red link set veth-red upip -n blue link set veth-blue uplinux bridge:ip addr add 192.168.15.5/24 dev v-net-0namespace 192.158.15.5 --> 192.168.1.3ip netns exec blue ping 192.168.13ip netns exec blue routeip netns exec blue ip route add 192.168.1.0/24 via 192.168.15.5iptables -t nat -A POSTROUTING -s 192.168.15.0/24 -j MASQUERADEip netns exec blue ping 8.8.8.8ip netns exec blue routeip netns exec blue ip route add default via 192.168.15.5ip netns exec blue ping 8.8.8.8docker networkhost bridgedocker network lsip link ip addrip netnsdocker inspect xxxip link 能看到vethxxx bridge 接口ip -n (ip netns) link 能看到eth0 container接口ip -n (ip netns) addr 能看到IP containerip addr 能看到ip bridge 上的docker0docker run -p 8080:80 nginx iptables -t nat -A PREROUTING -j DNAT --dport 80 --to-destination 8080iptables -nvl -t natcni:bridge add <cid> <namespace>bridge add 2exx /var/run/netns/2exx

2. pod network

同一 node 下的pod

不同 node 下的podip addr add 10.244.1.1/24 dev v-net-0ip addr add 10.244.2.1/24 dev v-net-0ip addr add 10.244.3.1/24 dev v-net-0ip addr set ip -n xxx addr addip -n xxx route addip -n xxx link setip link set*****************************************************kubelet --cni-conf-dir=/etc/cni/net.d--cni-bin-dir=/etc/cni/bin--network-plugin=cnips -ef | grep kubeletls /opt/cni/bin //二进制文件,默认ls /etc/cni/net.d //配置文件, 默认cat /etc/kubernetes/manifests/kube-apiserver.yaml | grep cluster-ip-range*****************************************************通过weaves 实现 10.244.1.2 to 10.244.2.2kubectl exec busybox ip route

3. ipam

ip address managerip = get_free_ip_from_host_local()

ip -n <namespace> addr add

ip -n <namespace> route addcat /etc/cni/net.d/net-script.conf

4. service network

clusterip

nodeport

loadbalancer

externalkube-proxy --proxy-mode [ userspace | iptables | ipvs ]

ps aux | grep kube-api-serveriptabels -L -t nat | grep db-servicecat /var/log/kube-proxy.log

kubectl -n kube-system logs kube-proxy-log

5. coredns

default dns apps

10.244.1.5 web-service 10.107.37.188 10.107.37.188

test web-servicecurl http://web-service.apps.svc/pod.cluster.local (servicename/hostname.namespace.types.Root)pod dns

cat /etc/hosts web xxx.xx.xx.xx

cat /etc/resolv.conf test xxx.xx.xx.xxcoredns --- rs

cat /etc/coredns/Corefilekubectl get cm -n kube-system

kubectl get service -n kube-systemhost web-service

cat /etc/resolv.conf // search default.svc.cluster.local svc.cluster.local cluster.local线上测试

Set the DB_Host environment variable to use mysql.payroll.

kubectl edit deploy webapp DB_Host

6. ingress

google cloud platform

38080ingresswear-service video-service

wear wear wear video video videoapiVersion: v1

kind: Deployment

metadata:labels:run: static-busyboxname: static-busybox

spec:replicas: 1selector:matchLabels:name: nginx-ingresstemplate:metadata:labels:name: nginx-ingressspec:containers:- image: busyboxname: static-busyboxargs:env:ports:- name: httpcontainerPort: 80- name: httpscontainerPort: 443apiVersion: v1

kind: Service

metadata:name: nginx-ingress

spec:type: NodePortports:- port: 90targetPort: 80protocol: TCP- port: 443targetPort: 443protocol: TCPname: httpsselector:name: nginx-ingresskind: ConfigMap

apiVersion: v1

metadata:name: nginx-configurationkind: ServiceAccount

apiVersion: v1

metadata:name: nginx-ingress-sakind: Ingress

apiVersion: extentions/v1beta1

metadata:name: ingress-wear

spec:方式一:backend:serviceName: wear-serviceservicePort: 80方式二:rules:- http:paths: - path: /wearbackend:serviceName: wear-serviceservicePort: 80- path: /watchbackend:serviceName: watch-serviceservicePort: 80方式三:rules:- host: wear.my-online-store.comhttp:paths: - backend:serviceName: wear-serviceservicePort: 80- host: watch.my-online-store.comhttp:paths: - backend:serviceName: watch-serviceservicePort: 80

9. others

1. ha

cluster:nginx/haproxymaster master masteretcd:etcd cluster

2. trouble shooting

// application failed# check service statuscurl http://web-service-ip:node-portkubectl describe service web-service # check endpointkubectl get endpoints # check podkubectl get podkubectl describe pod xxxkubectl logs -f podxxx# check dependent applications// control plane failed# check node statuskubectl get nodeskubectl get pods# check controlplane podskubectl get pods -n kube-system# check controlplane servicesservice kube-apiserver status service kube-controller-manager statusservice kube-scheduler statusservice kubelet statusservice kube-proxy status# check service logkubectl logs kube-apiserver-master -n kube-systemsudo journalctl -u kube-apiserver// work node failed# check node statuskubectl get nodeskubectl describe node work-1# check nodetop df -h# check kubelet statusservice kubelet statussudo journalctl –u kubelet// Check Certificatesopenssl x509 -in /var/lib/kubelet/worker-1.crt -text// etcd failure// network failure// storage failure

3. advanced command

kubectl get nodes -o json // 大量节点的时候使用

kubectl get pods -o=jsonpath='{ .items[0].spec.containers[0].image }''{ range .items[*] }''{.metadata.name}{"\t"}{.status.capacity.cpu}{"\n"}'kubectl get nodes -o=custom-columns=<colume-name>:<json-path>,<colume-name>:<json-path>kubectl get nodes -o=custom-columns=node:.metadata.name, cpu:.status.capacity.cpukubectl get nodes --sort-by=.status.capacity.cpu